# Best Practices

Source: https://alexcode.ai/docs/chat/best-practices

Guidelines for effective code assistance and documentation usage in Alex Sidebar

## Writing Effective Prompts

* Be specific about your goals

* State what you want to achieve

* Provide clear success criteria

* Share relevant code snippets

* Include error messages

* Mention project requirements

## Using Think First Mode

* Complex architectural decisions

* Bug investigation

* Performance optimization

* Security considerations

* Quick syntax questions

* Simple code completions

* Documentation lookups

* Basic refactoring

### Tips for Think First Mode

* Allow extra time for the dual-model processing

* Provide detailed context for better analysis

* Use it for mission-critical code changes

* Consider disabling for rapid prototyping phases

### Examples

❌ **Ineffective**: `My code isn't working. Can you help?`

✅ **Effective**: `I'm getting a 'Thread 1: Fatal error: Unexpectedly found nil' when trying to unwrap an optional UIImage in my custom UICollectionViewCell. Here's my cellForItemAt implementation...`

✅ **Feature Request**: `I need to implement a custom tab bar in SwiftUI that shows a circular progress indicator around the selected tab icon. The progress should be animated. Here's my current TabView implementation...`

## Managing Context

* Use @ Files to add specific files

* Include related dependencies

* Share configuration files

* Use @ Codebase for framework-level questions

* Reference specific components

* Share relevant modules

### Examples

❌ **Limited**: `How do I update this delegate method?`

✅ **Complete**: `I need to update this UITableViewDelegate method to handle custom swipe actions. Here's my current implementation (@Files TableViewController.swift) and the custom SwipeActionView (@Files Views/SwipeActionView.swift) I want to integrate.`

✅ **Framework**: `I'm building a custom networking layer (@Codebase Networking/*). Can you help me implement proper retry logic with exponential backoff?`

## Using Documentation

* Use @ Apple Docs for framework reference

* Reference specific APIs

* Include version information

* Use @ Apple Docs (Individual) for specific methods

* Reference specific classes

* Include parameter details

### Examples

❌ **Vague**: `How do I use Core Data?`

✅ **Specific**: `I need help implementing NSFetchedResultsController (@Apple Docs NSFetchedResultsController) with multiple sections based on dates. Here's my current Core Data model (@Files Model.xcdatamodeld)...`

✅ **API Reference**: `Can you explain how to use URLSession's (@Apple Docs URLSession) background download tasks with proper delegate handling (@Apple Docs URLSessionDownloadDelegate)?`

## Platform-Specific Best Practices

* Use Swift-specific terminology

* Reference Swift documentation

* Include Swift version

* Reference UI frameworks

* Include view hierarchy

* Share layout constraints

### Examples

❌ **Ambiguous**: `How do I create a button?`

✅ **SwiftUI**: `I'm using SwiftUI (iOS 16+) and need to create a custom button with a gradient background, dynamic shadow, and haptic feedback. Here's my current Button implementation...`

✅ **UIKit Integration**: `I need to embed this SwiftUI view (@Files CustomView.swift) into my existing UIKit navigation stack. Here's my current UIHostingController setup...`

## Common Scenarios

* Share error messages

* Include stack traces

* Reference relevant code

* Explain current structure

* Describe desired outcome

* Share relevant files

### Examples

❌ **Unclear**: `The app crashes sometimes.`

✅ **Detailed**: `The app crashes when switching between tabs while a network request is in progress. Here's the crash log and relevant networking code (@Files NetworkManager.swift). The issue started after implementing async/await...`

✅ **Refactoring**: `I want to refactor this massive view controller (@Files ProfileViewController.swift) into smaller components using MVVM. Here's my planned architecture diagram...`

### Examples

❌ **Too Much**: `Sharing entire project files for a simple UI fix`

✅ **Just Right**: `Continuing from our previous chat about the networking layer (see chat history), I need to add request caching. Here's the specific RequestCaching protocol I want to implement...`

✅ **Breaking Down**: \`I need to migrate this UIKit project to SwiftUI. Let's break it down:

1. First, let's handle the navigation structure

2. Then, convert each view controller individually

3. Finally, implement the data flow with @StateObject and ObservableObject\`

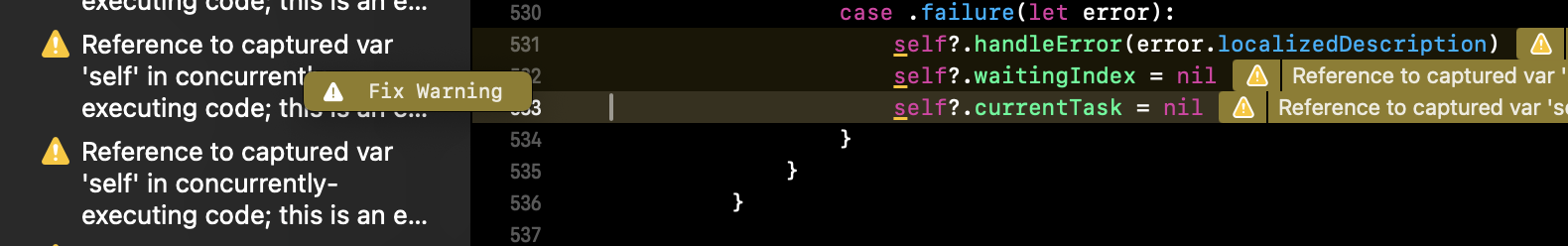

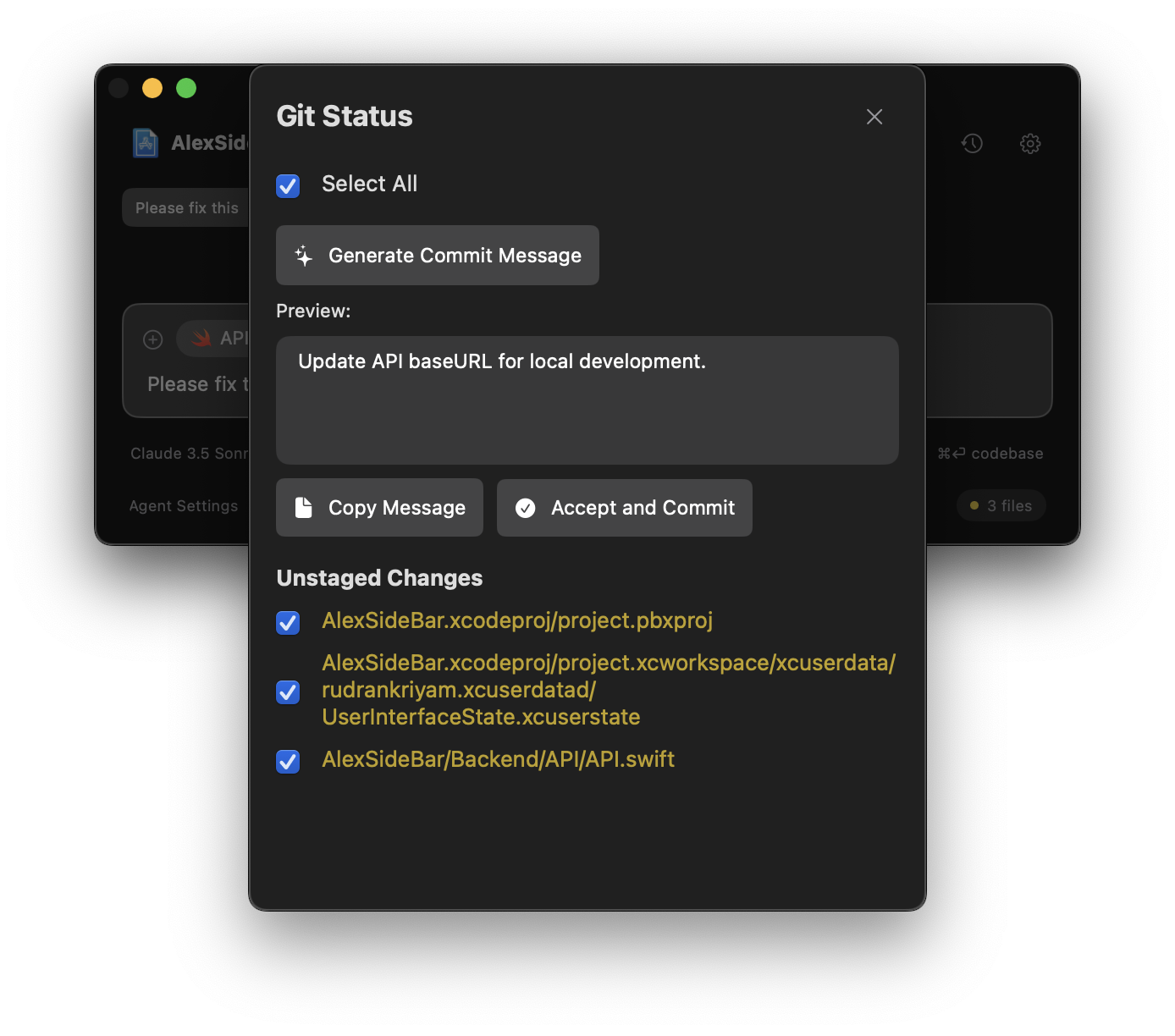

## Working with Build Errors

* Use for automatic error resolution

* Alex handles the entire build-fix cycle by using Xcode's build system

* No manual intervention needed

* Continues until build succeeds

### When to Use Automatic Build & Fix

* Multiple compilation errors

* Missing imports or protocols

* Type mismatches

* Initialization errors

* Access control issues

* Complex architectural decisions

* Business logic errors

* Performance optimizations

* Custom framework integration

### Examples

✅ **Automatic Fix**: `Click "Build & Fix Errors" when you see multiple red errors in Xcode. Alex will handle missing imports, protocol conformance, and type issues automatically one by one until the build succeeds.`

### Build Error Best Practices

1. **Let Alex Work**: Do not interrupt the build-fix loop unless necessary

2. **Review Changes**: Always review the final working code

3. **Save Progress**: Use checkpoints after successful builds

## Community Resources

Need more help? Join the [Discord community](https://discord.gg/T5zxfReEnd)

for support and tips from other developers.

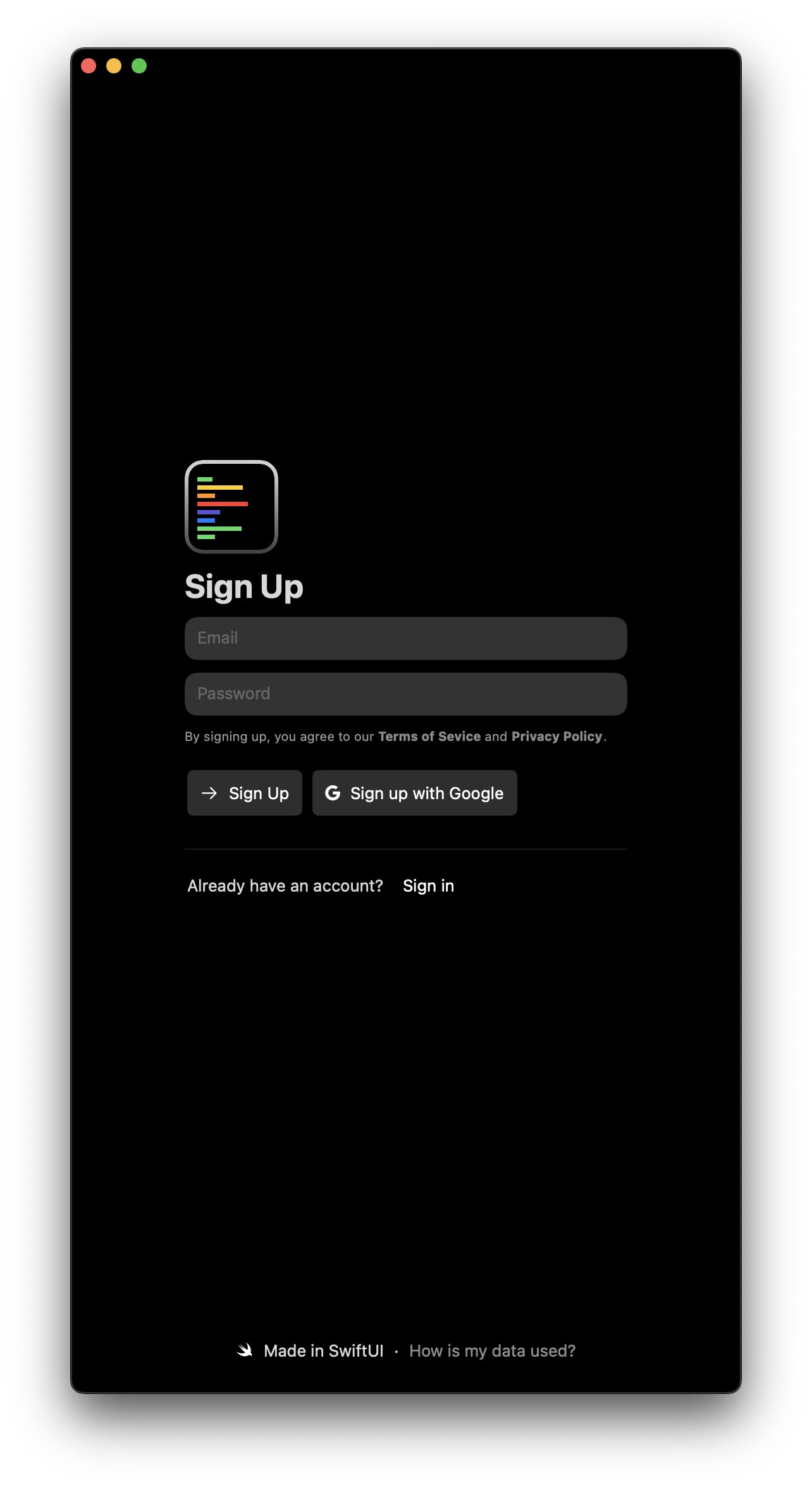

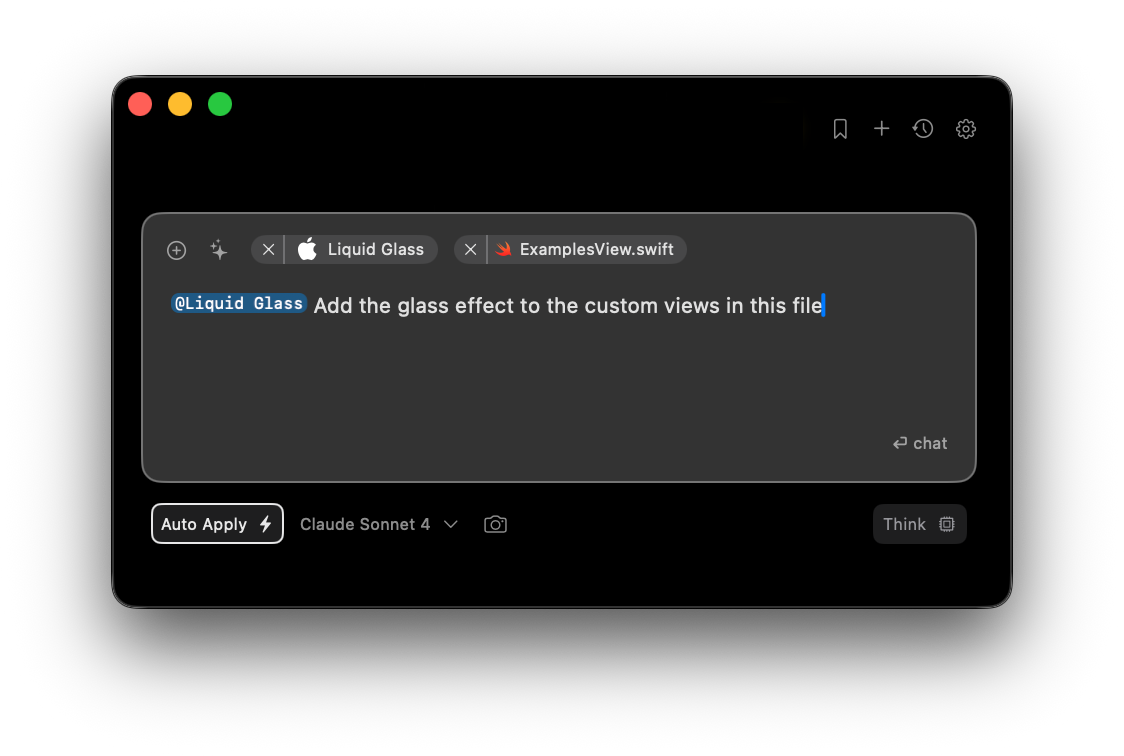

# Agents

Source: https://alexcode.ai/docs/chat/context/agents

AI agents specialized for development tasks

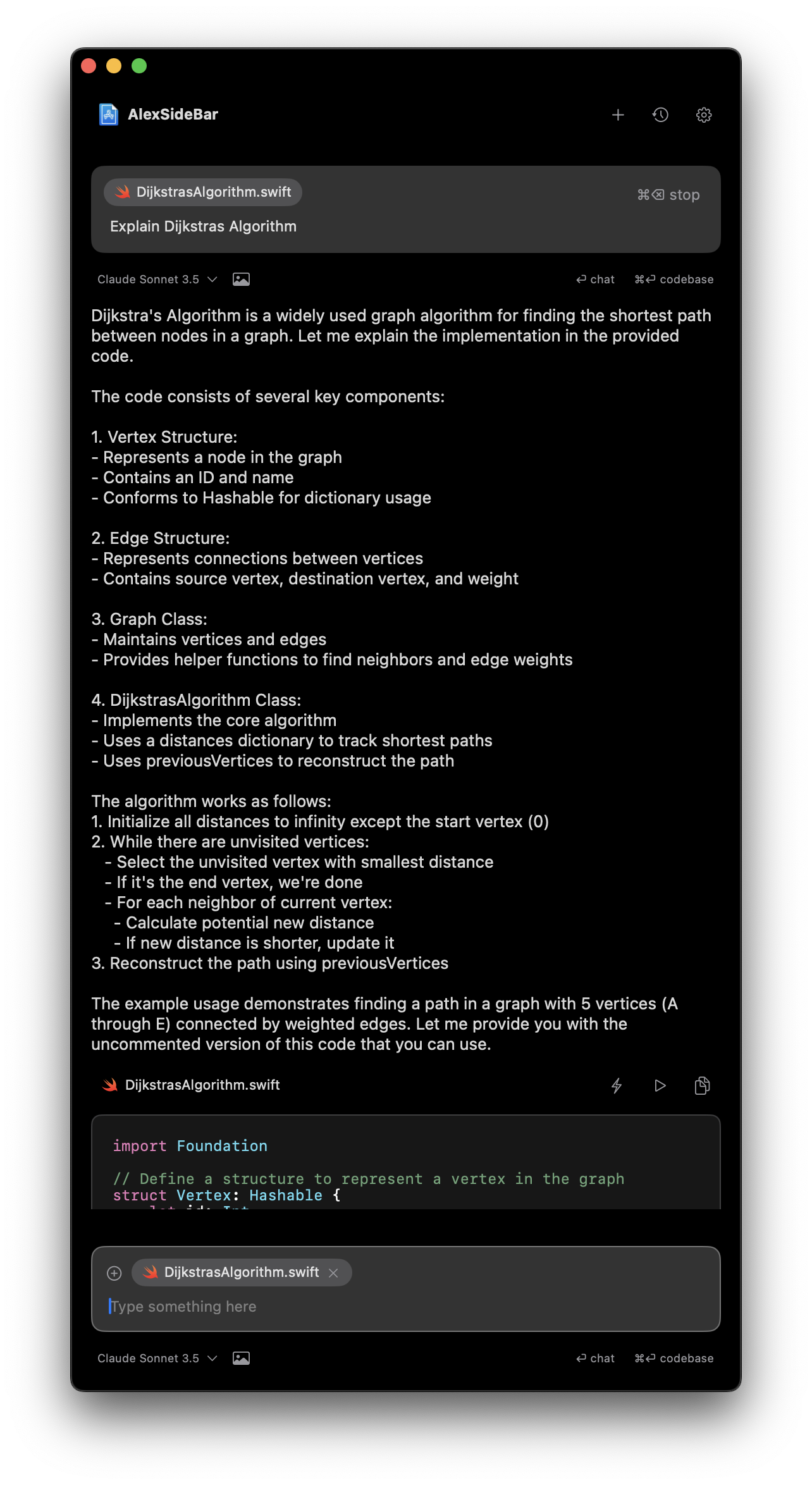

Alex Sidebar's agents are specialized AI assistants, each trained for specific development tasks. Alex automatically selects the right agent for your workflow for autonomously handling your development tasks!

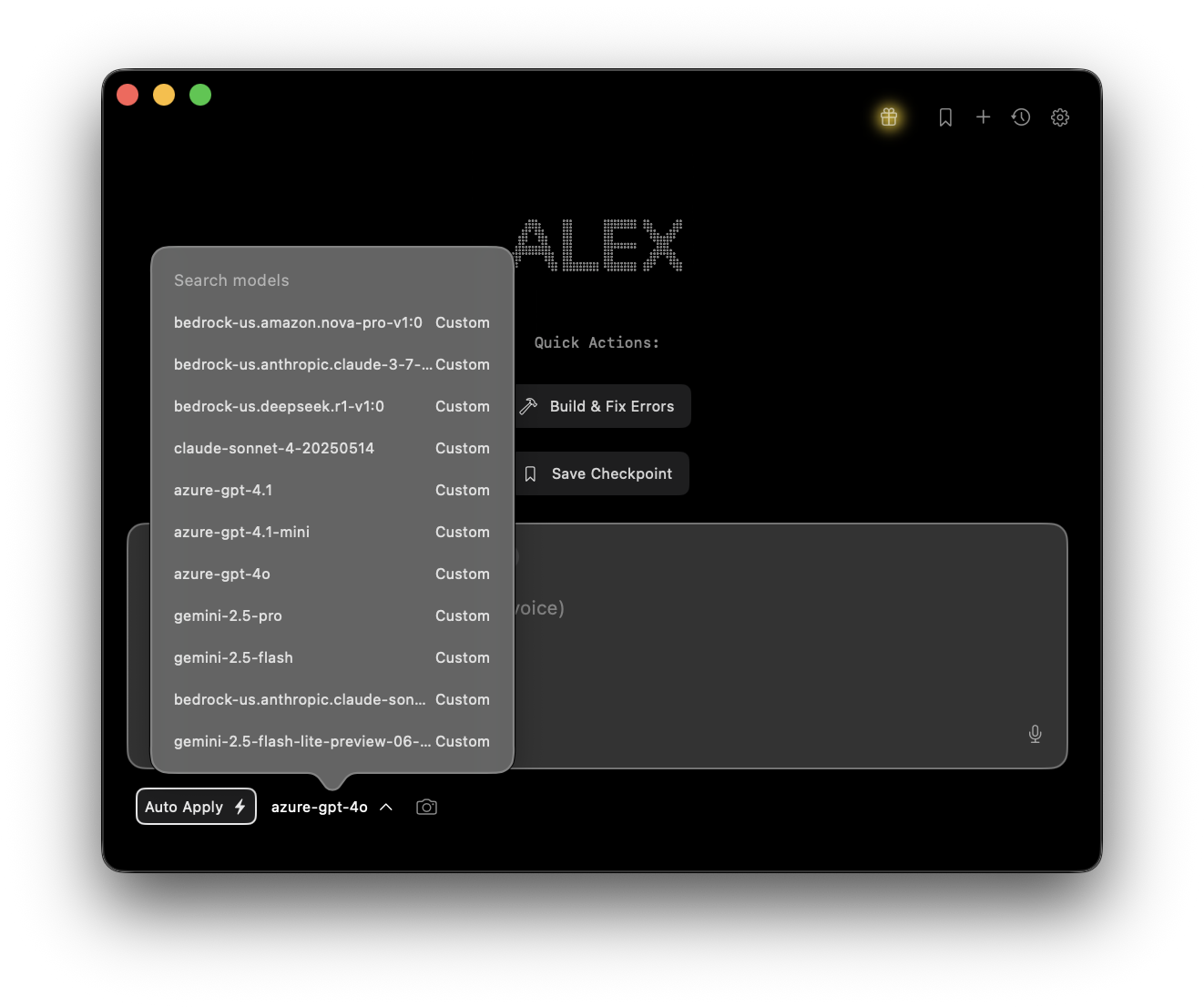

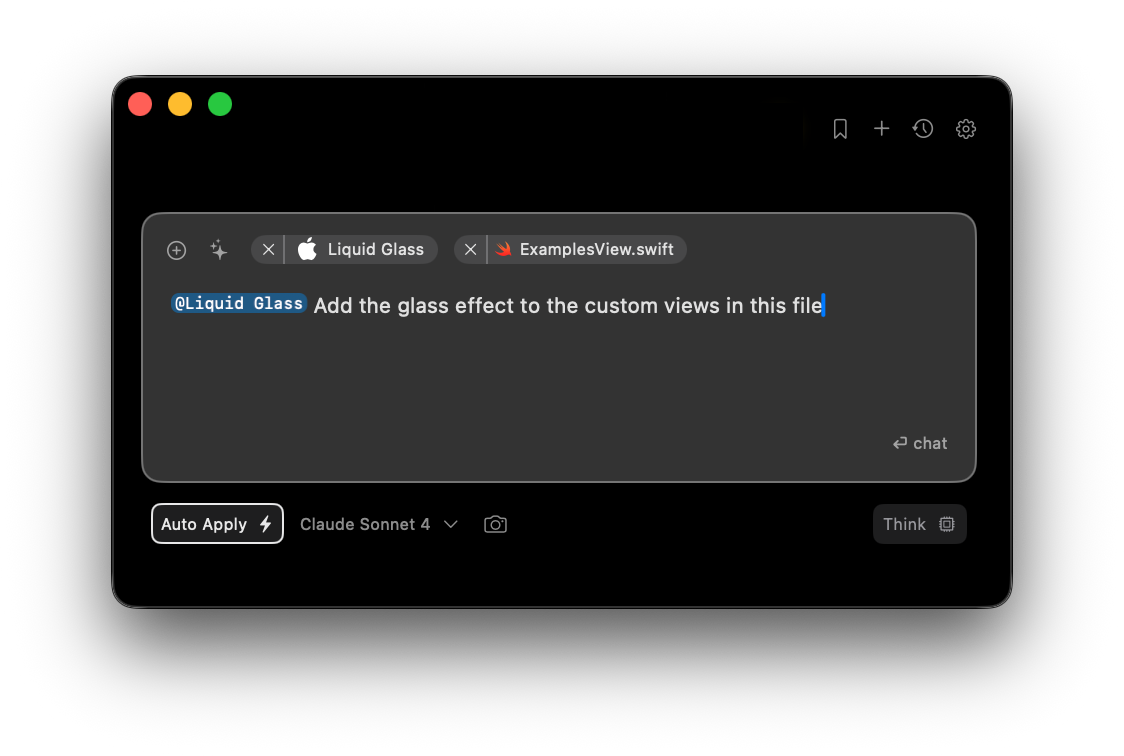

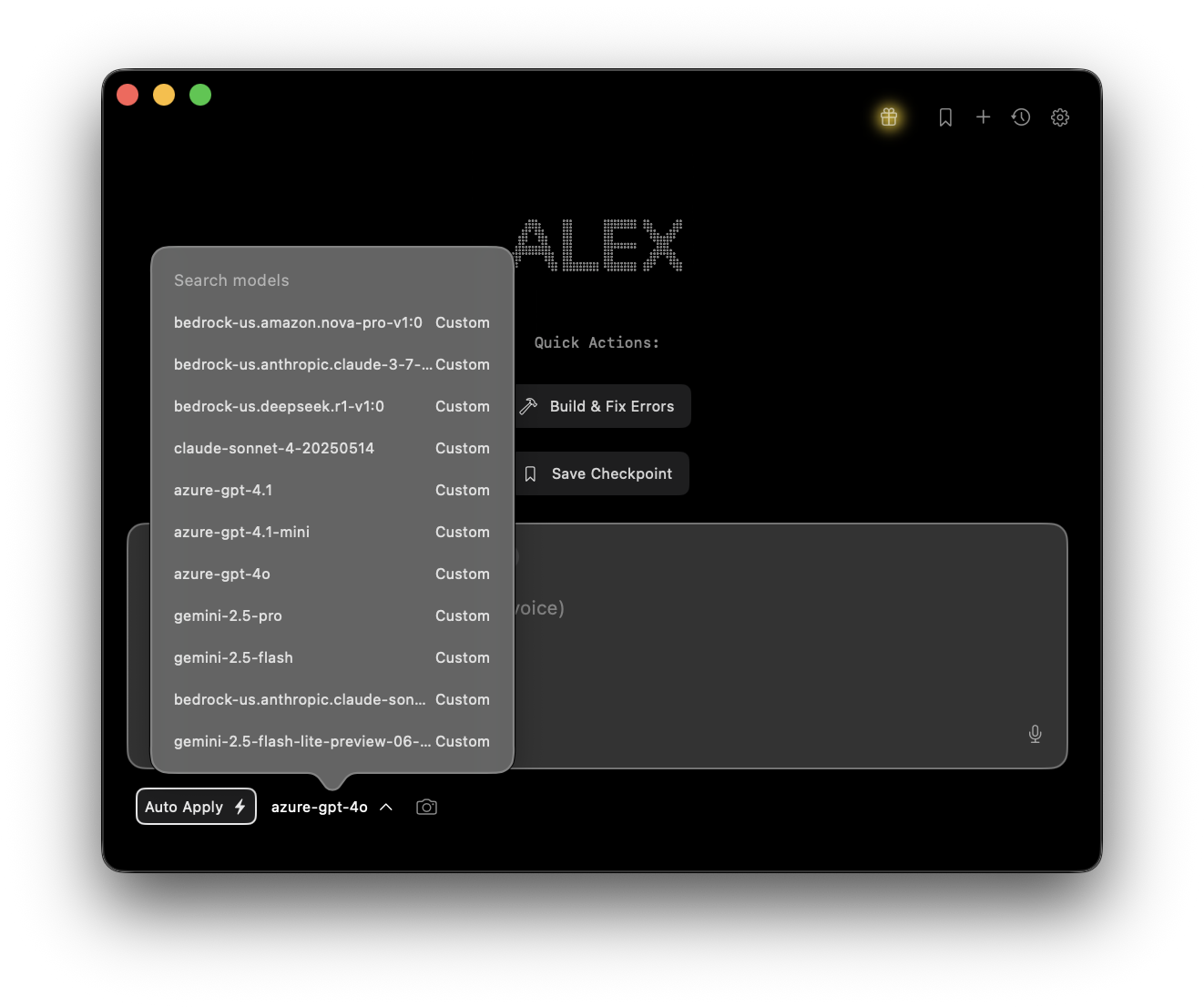

Agent mode is now always on! Claude Sonnet 4 is the recommended model for its superior code generation capabilities, but you can now use other supported models including:

* Claude 3.5 Sonnet

* Gemini 2.5 Pro

* Gemini 2.5 Flash

* OpenAI o3

* OpenAI o4 Mini

* OpenAI GPT 4.1

* DeepSeek R1

* DeepSeek V3 (03.24)

Choose your preferred model from the model selector while maintaining all agent capabilities.

## Quick Actions

One-click automatic build, error detection, and fix application. Alex continuously rebuilds until your project compiles successfully.

Let agents handle repetitive tasks while you focus on core development work.

Switch between different AI models while maintaining agent capabilities and context.

## Understanding Agents

Agents work best by:

* Learning your project structure and patterns

* Maintaining contextual understanding across sessions

* **Automatically building and running your app after changes**

* **Detecting and fixing compilation errors in a continuous loop**

* **Taking screenshots for verification and debugging**

You can make agents more effective over time by providing more notes about your project and coding preferences. Learn more about project notes [here](/chat/context/memory).

## Getting Started

Press **Command + Shift + A** to toggle auto-apply for code changes. When enabled, code suggestions will be automatically applied to your files.

Use voice or text to describe your project:

```swift

"This is an iOS app using SwiftUI and MVVM architecture.

The main features include user authentication and data persistence."

```

The agent will:

* Analyze project structure

* Study coding patterns

* Build contextual understanding

* Use regex search to find relevant code patterns

## Automatic Build & Run

Alex Sidebar's agent automatically builds and runs your project after making changes, creating a seamless development experience.

While the agent handles most errors automatically, always review the final changes to ensure they align with your project's requirements.

## Best Practices

Provide specific requirements and context for best results

Break complex tasks into smaller, manageable steps

Always review and test agent-suggested modifications

Keep project notes updated for better agent performance

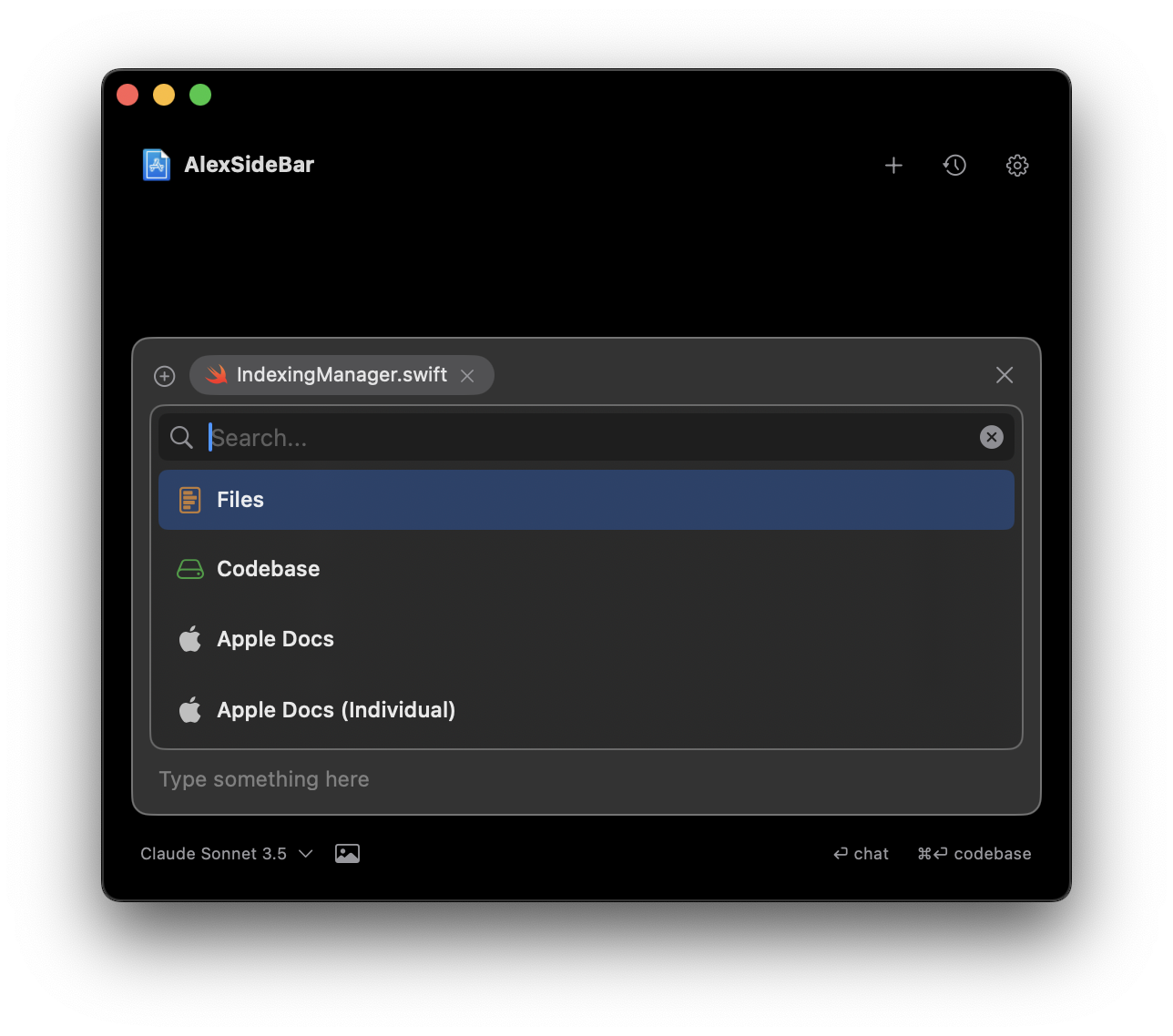

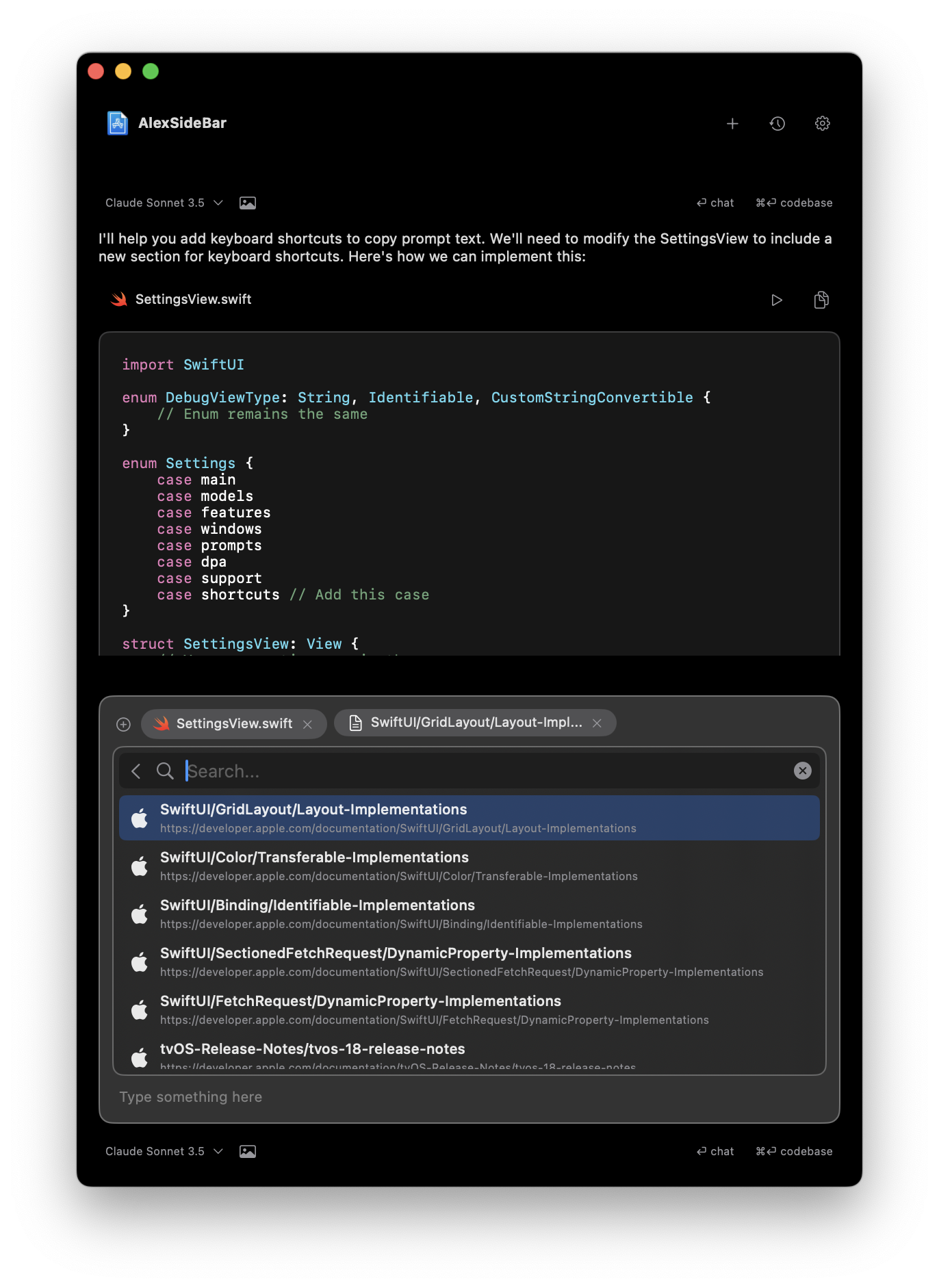

# Commands

Source: https://alexcode.ai/docs/chat/context/commands

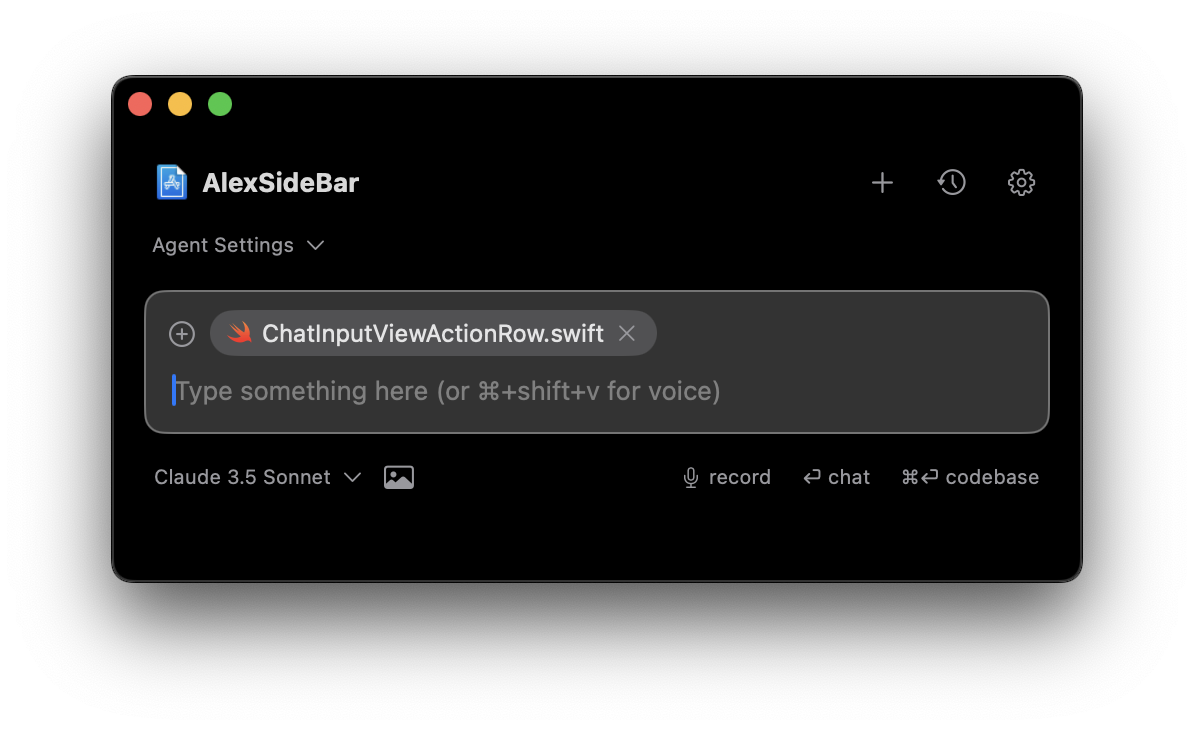

Available commands and shortcuts in Alex Sidebar's chat interface

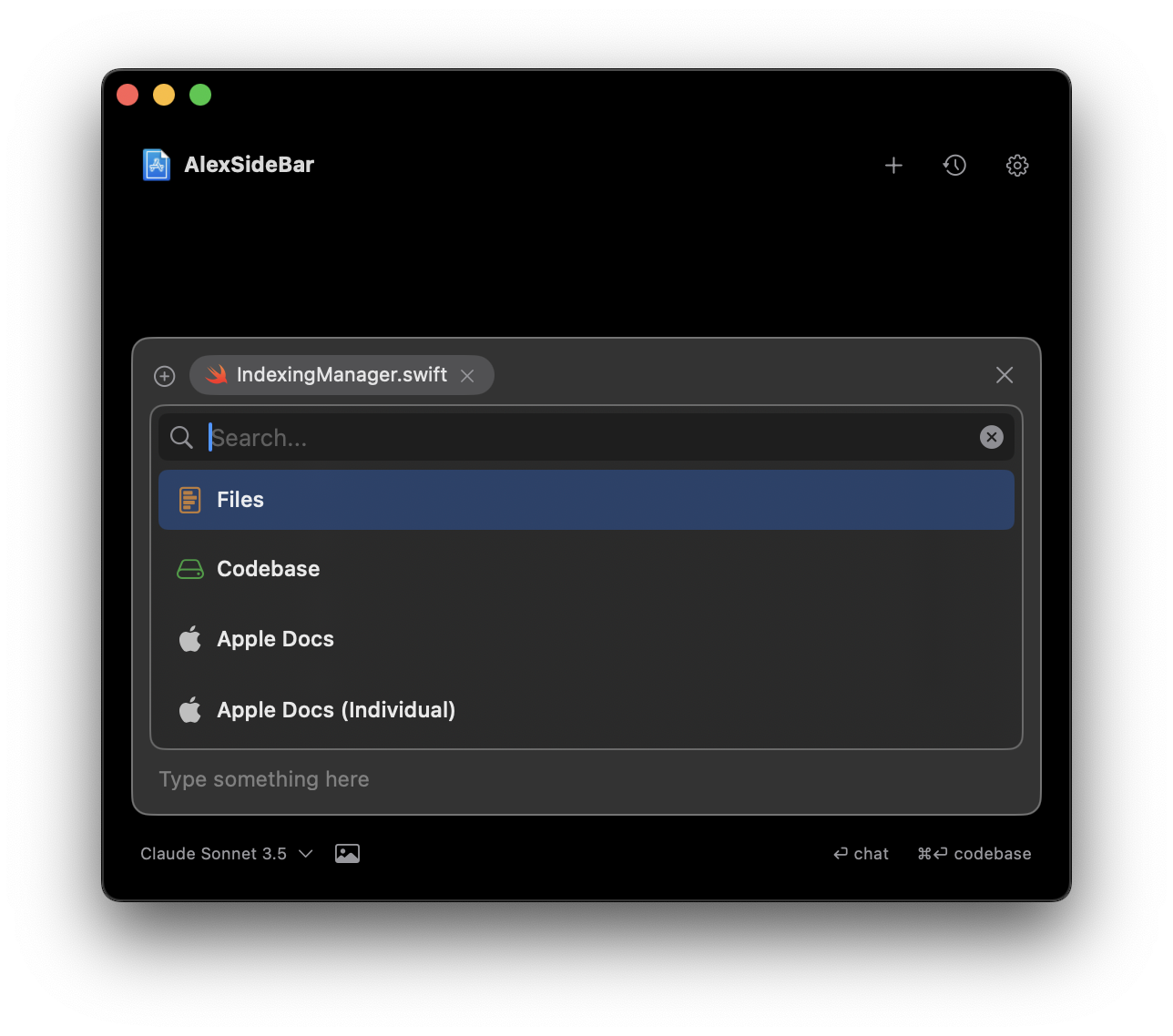

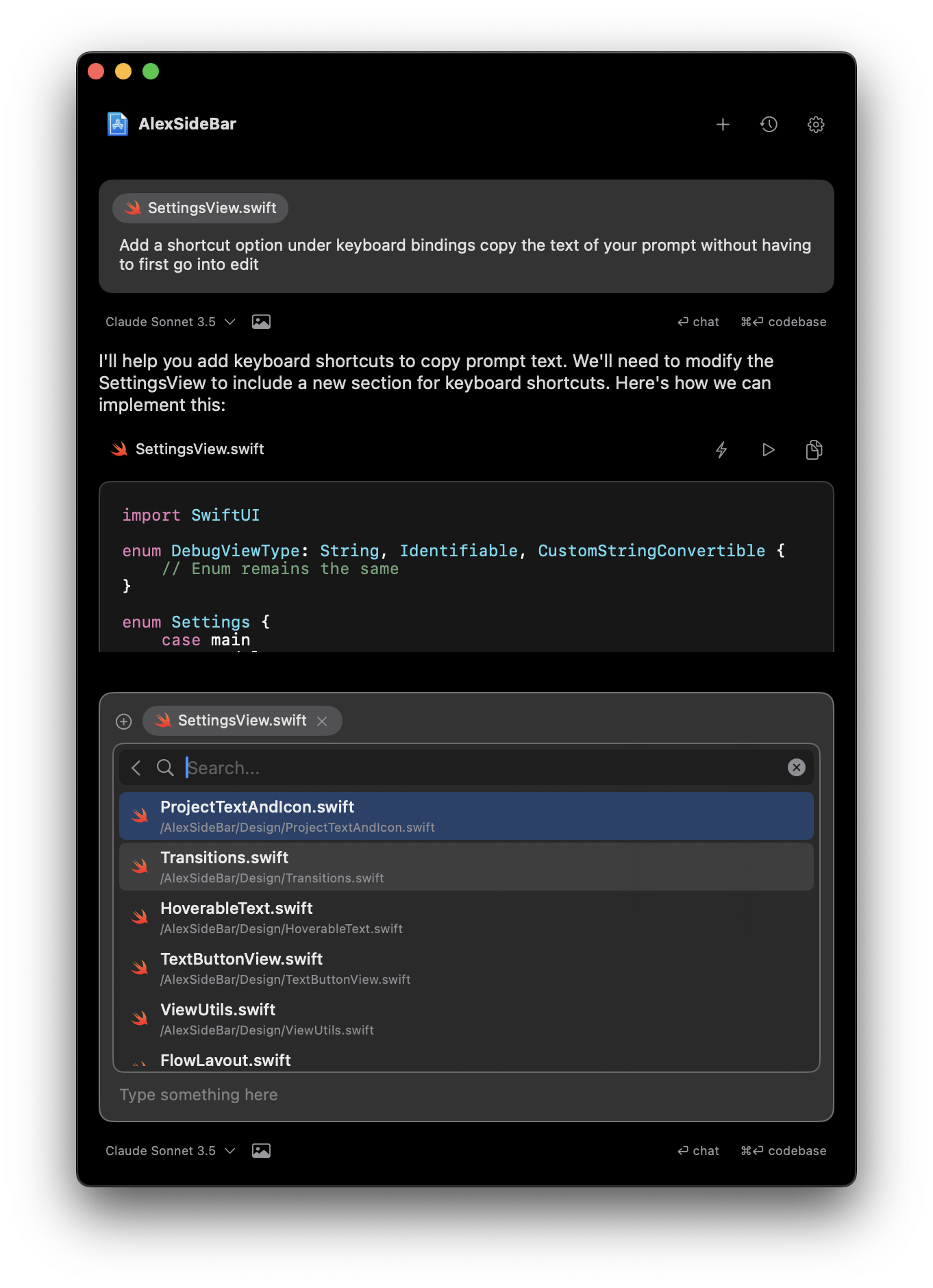

## Available Commands

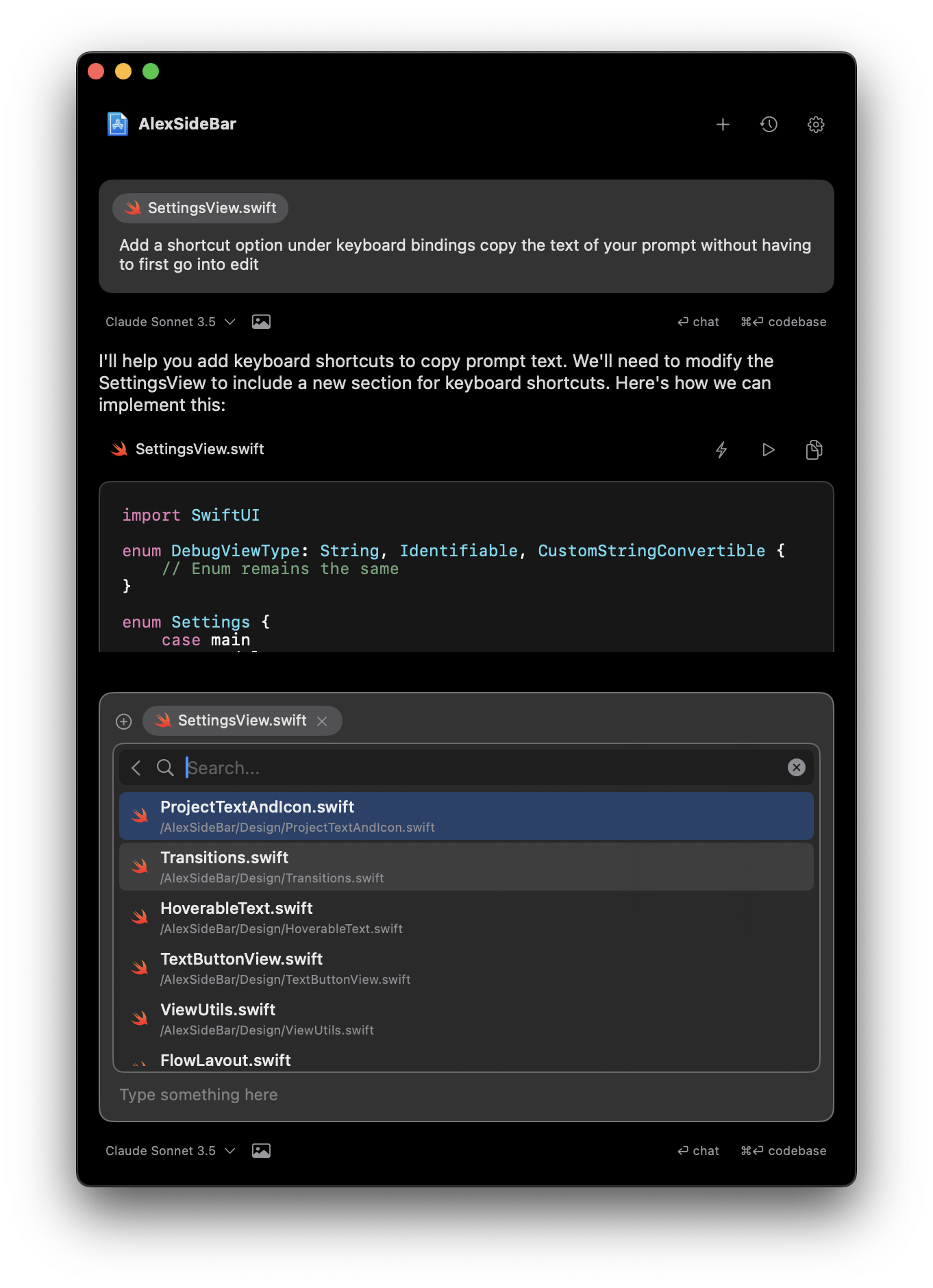

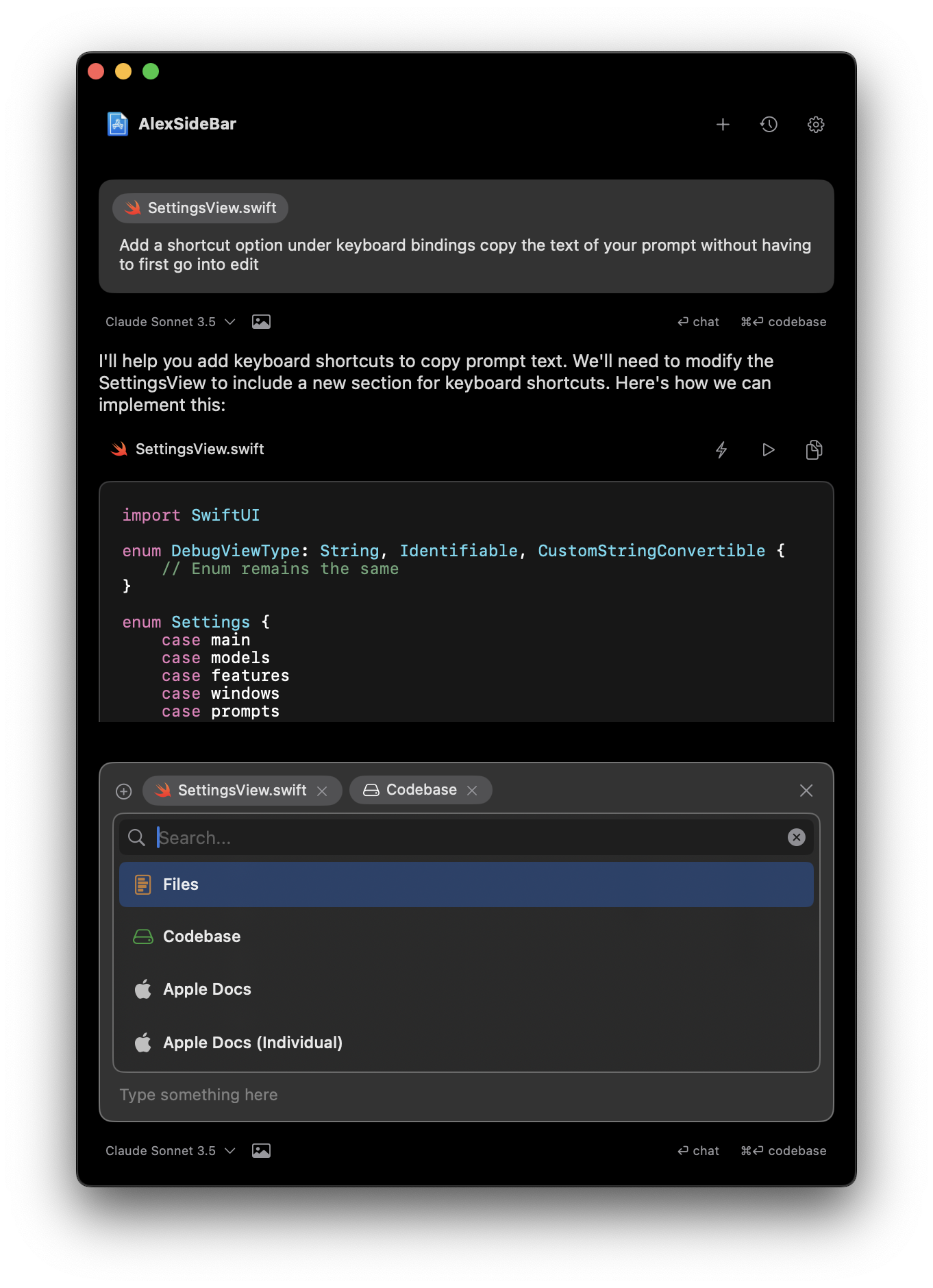

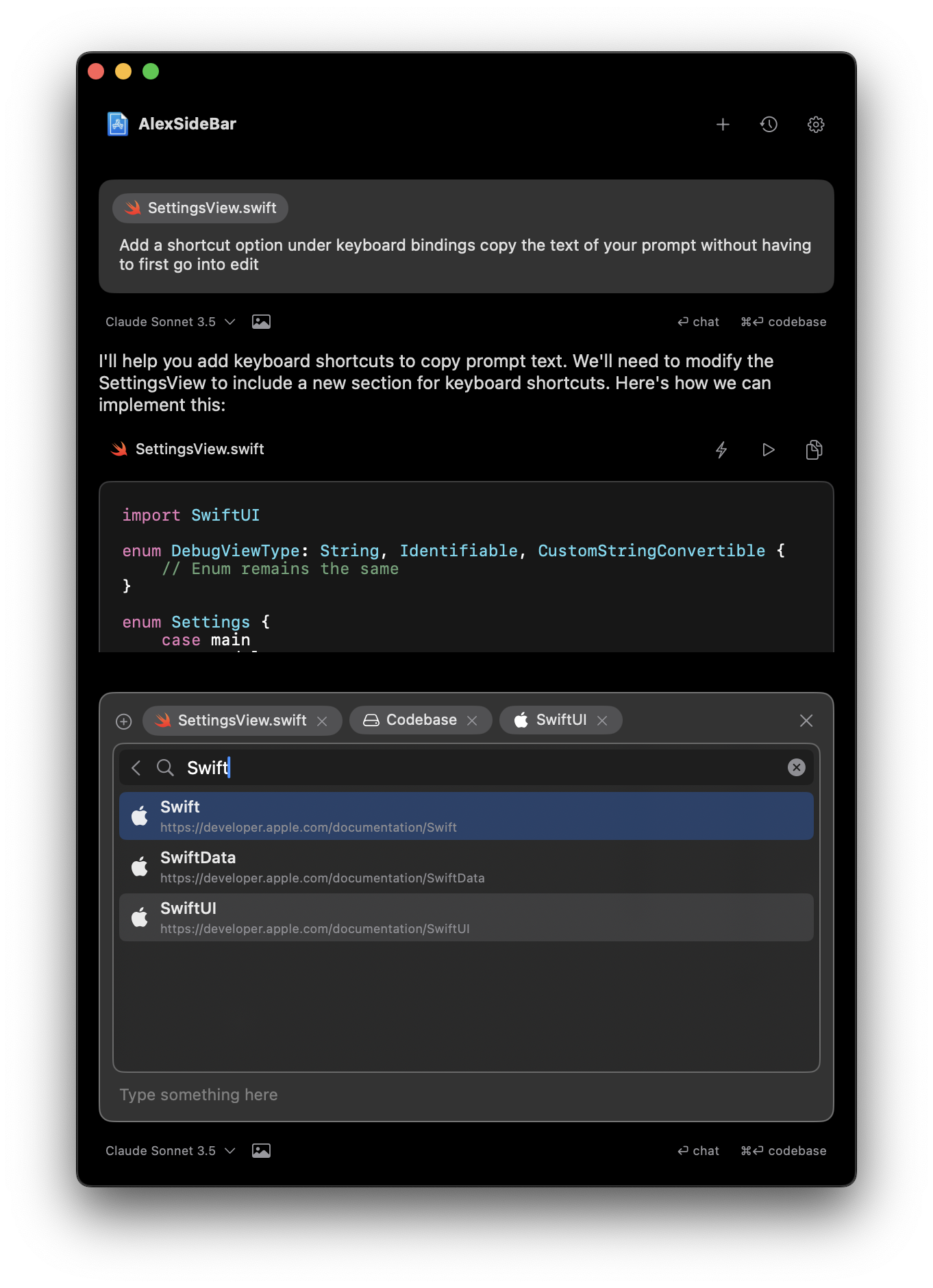

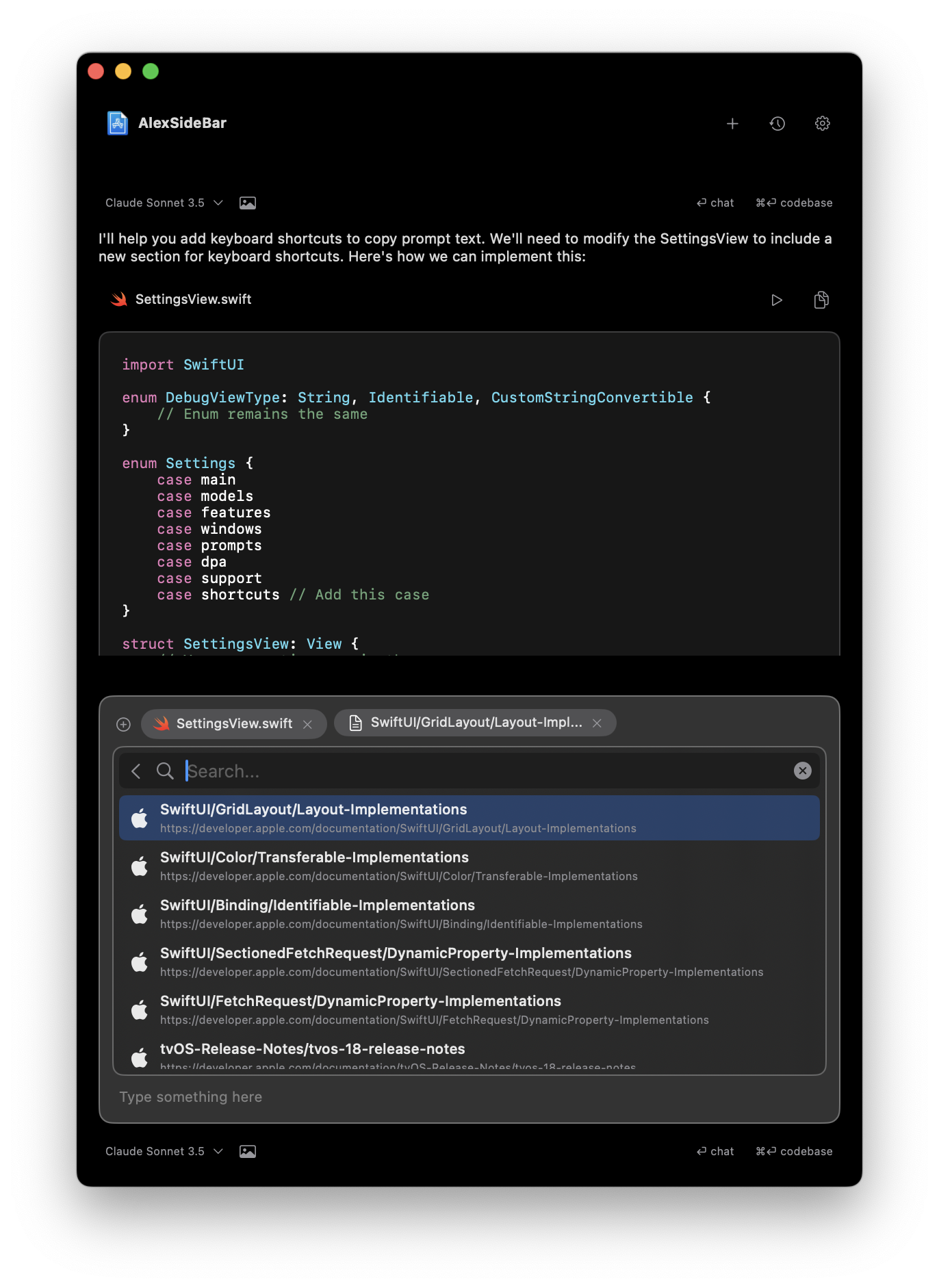

Access and reference files in your project directly from the chat interface.

Search and reference your entire codebase context during chat conversations.

Search and reference official Apple documentation without leaving the chat.

Access specific Apple documentation entries for targeted reference.

## Using Commands

Type `@` or click the + button in the chat interface to see available commands.

Use the search bar at the top to filter available commands and find what you

need quickly.

Commands help you efficiently access resources and context without leaving

your chat workflow.

## Files Command

Access your project files directly within the chat interface. Browse, search, and reference specific files during your conversations.

Access and reference files in your project directly from the chat interface.

Search and reference your entire codebase context during chat conversations.

Search and reference official Apple documentation without leaving the chat.

Access specific Apple documentation entries for targeted reference.

## Using Commands

Type `@` or click the + button in the chat interface to see available commands.

Use the search bar at the top to filter available commands and find what you

need quickly.

Commands help you efficiently access resources and context without leaving

your chat workflow.

## Files Command

Access your project files directly within the chat interface. Browse, search, and reference specific files during your conversations.

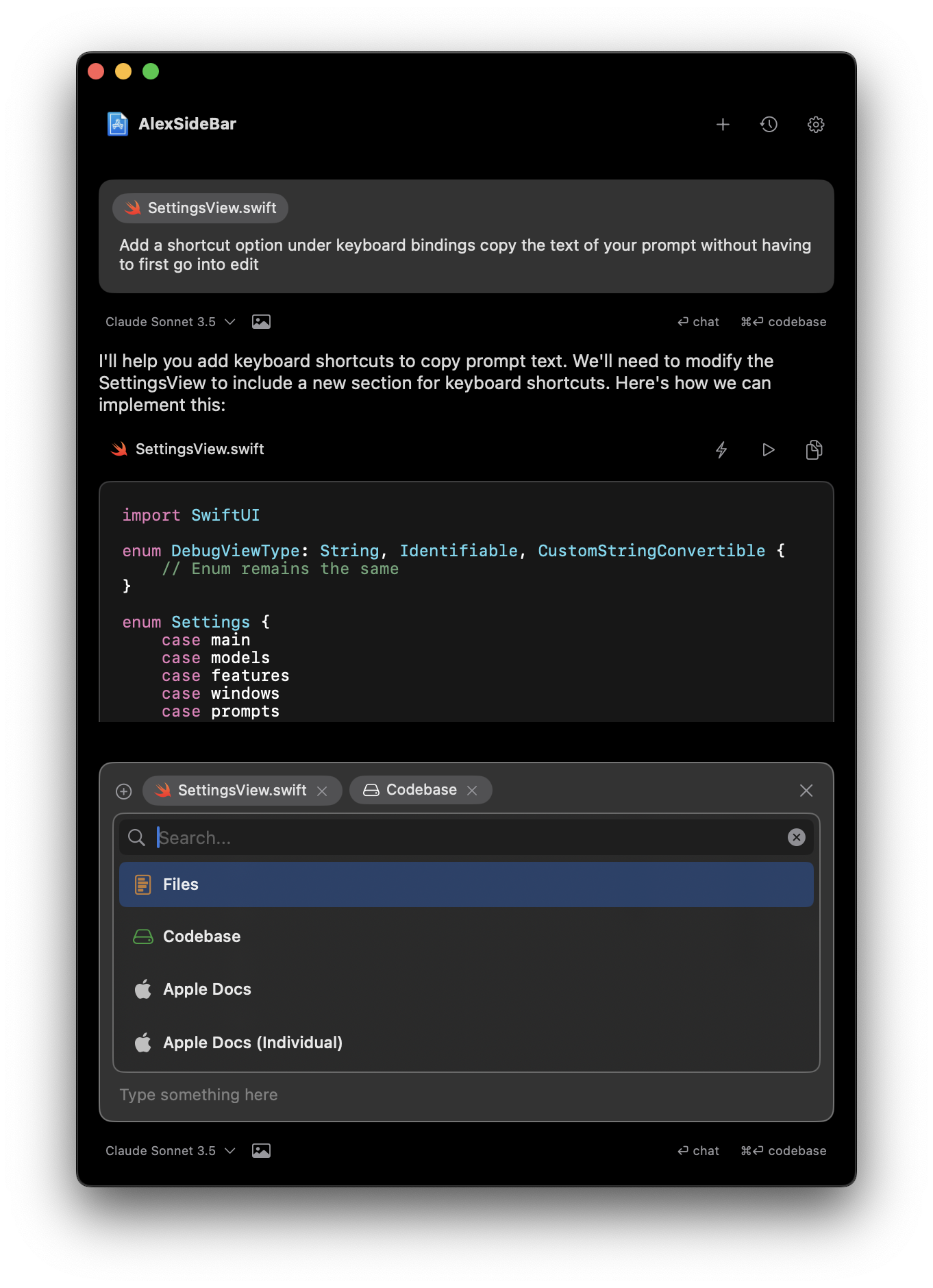

## Codebase Command

Search through your entire codebase context, find specific implementations, and reference code snippets in your discussions.

## Codebase Command

Search through your entire codebase context, find specific implementations, and reference code snippets in your discussions.

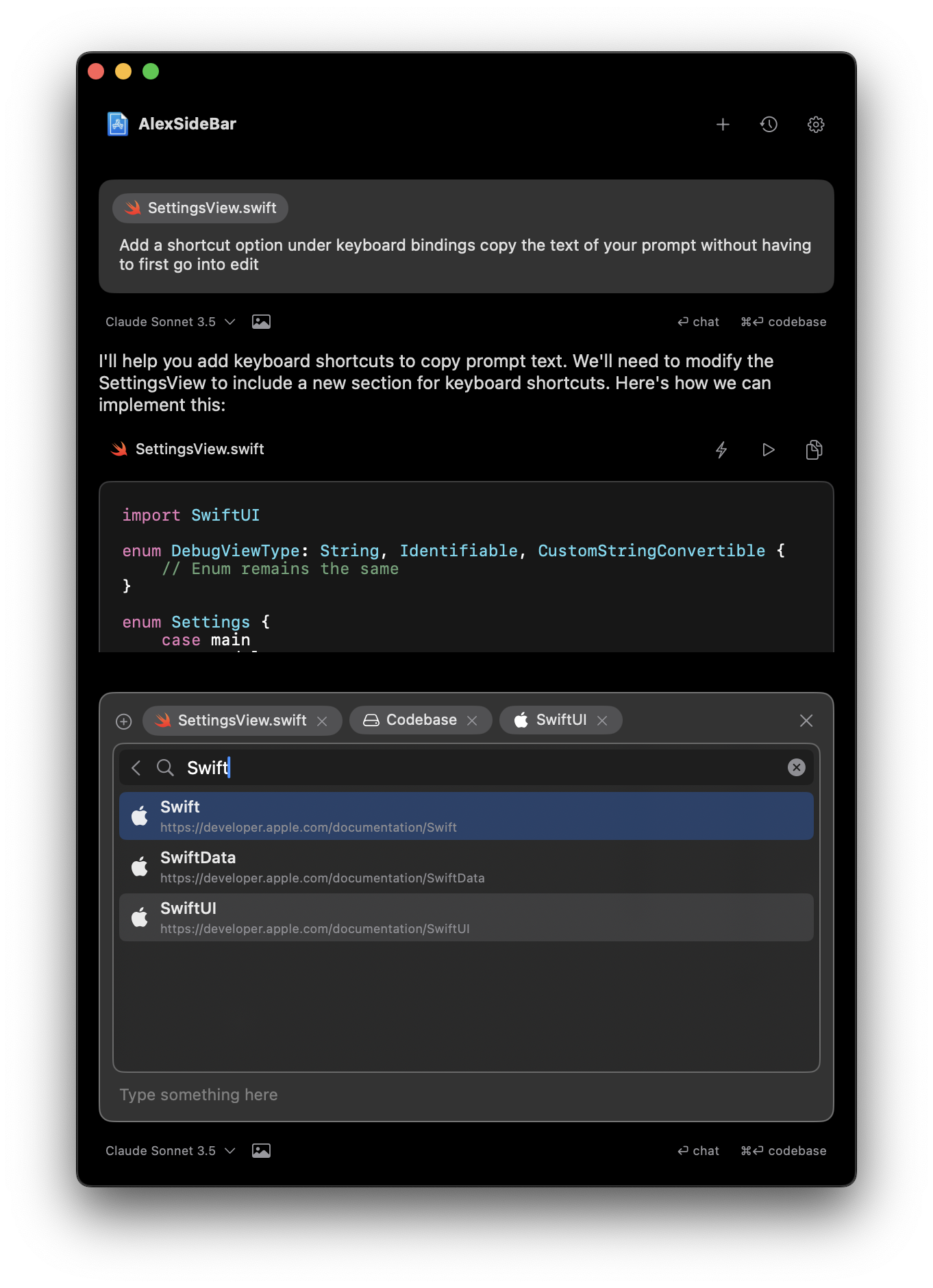

## Apple Documentation Command

Search and browse through the complete Apple documentation library without switching contexts or leaving your chat.

## Apple Documentation Command

Search and browse through the complete Apple documentation library without switching contexts or leaving your chat.

## Individual Apple Documentation Command

Access and reference specific documentation entries, methods, or APIs for technical discussions.

## Individual Apple Documentation Command

Access and reference specific documentation entries, methods, or APIs for technical discussions.

# Project Memory

Source: https://alexcode.ai/docs/chat/context/memory

Remember context across conversations with Alex

Project Memory enables Alex to remember context across conversations by using terms like "remember this" or "keep this in mind". This helps maintain continuity and provides more contextually relevant responses over time.

## Understanding Project Memory

When enabled, you can ask Alex to remember context across conversations by using phrases like:

* "Remember this"

* "Keep this in mind"

* "Remember that..."

* "Take note of..."

This allows Alex to:

* Maintain important context between chat sessions

* Remember project-specific requirements and patterns

* Provide more consistent and personalized responses

* Reference previously discussed solutions

## Managing Project Memory

### Accessing Memory Settings

To manage your project memory:

1. Open Settings (gear icon)

2. Navigate to **Tools & Features** → **Project Memory**

3. Toggle **Enable Memory** on/off using the switch

### Searching Memories

Once memories are saved, you can:

* Use the search bar to find specific memories

* View all stored memories in the list

* Delete individual memories as needed

### Creating Memories

Simply tell Alex what to remember during any conversation:

* "Remember that our app uses SwiftUI and MVVM architecture"

* "Keep in mind that we're targeting iOS 17+"

* "Note that all API calls should use async/await"

## Privacy and Security

All project memory data is:

* Stored locally on your device

* Never shared with external services

* Fully under your control

## Best Practices

### What to Remember

Use project memory for:

* **Project architecture**: "Remember we're using MVVM with Combine"

* **Coding standards**: "Keep in mind we use 2-space indentation"

* **API details**: "Remember our API base URL is api.example.com"

* **Team preferences**: "Note that we prefer guard statements over if-let"

* **Dependencies**: "Remember we're using Firebase for authentication"

### Memory Management Tips

1. **Be specific**: Clear, specific memories are more useful than vague ones

2. **Update regularly**: Remove outdated memories to keep context relevant

3. **Use search**: Quickly find memories using the search feature

4. **Review periodically**: Check your stored memories to ensure they're still accurate

Project Memory is especially useful for long-term projects where maintaining consistent context across multiple coding sessions is important.

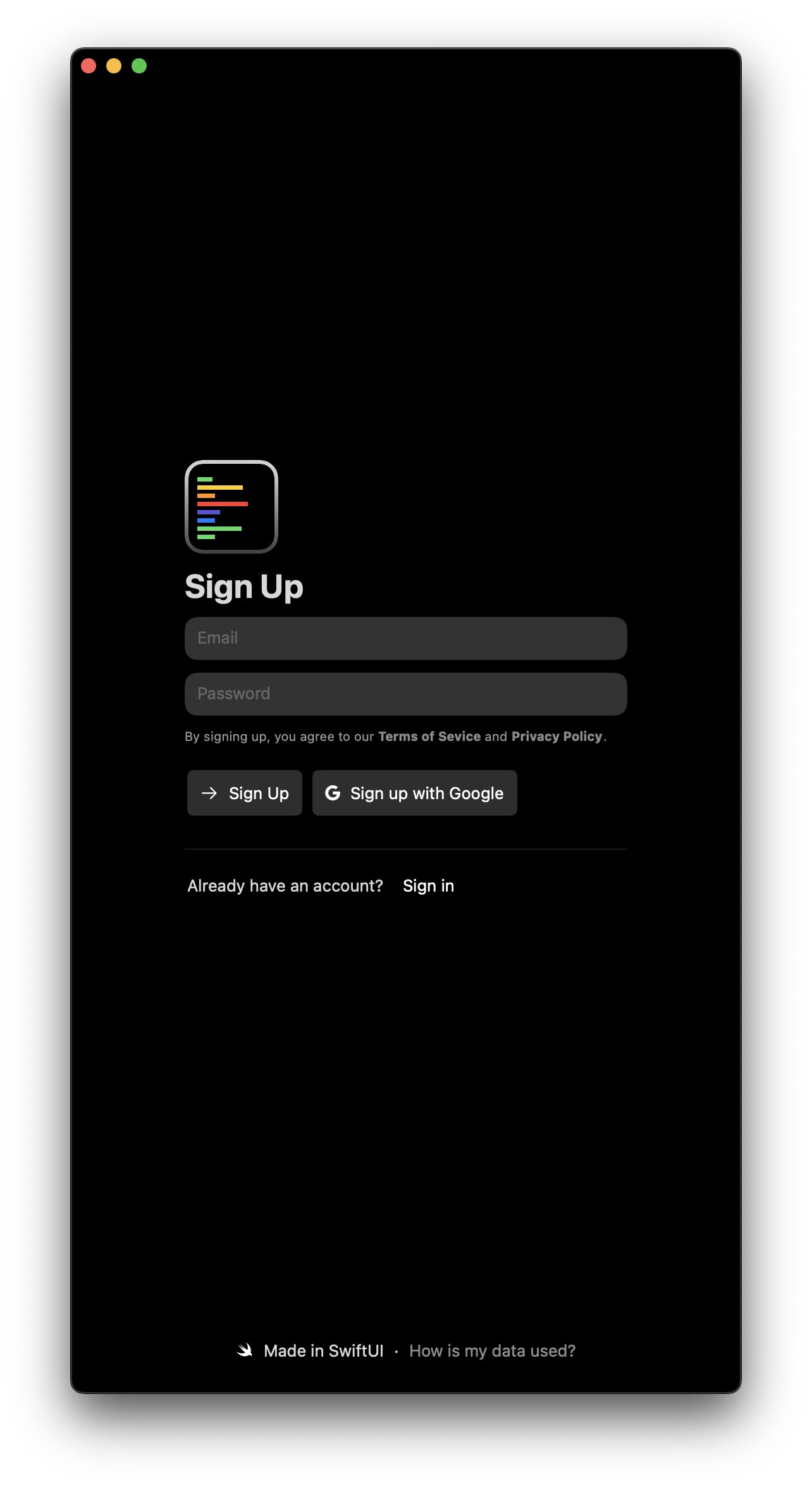

# Image to Code

Source: https://alexcode.ai/docs/chat/input-modes/image-to-code

Transform designs into code by dragging images into Alex Sidebar

The Image-to-Code feature allows you to quickly convert design mockups, screenshots, or UI elements into code. You can input images through multiple methods:

## Input Methods

Use **Command + Shift + 6** to:

* Take window screenshots

* Capture specific UI selections

* Perfect for quick UI component captures

Drag images directly into the chat interface from:

* Finder

* Browser

* Design tools

## Screenshot Tool (⌘ + ⇧ + 6)

The built-in screenshot tool provides a quick way to capture UI elements and convert them to code. You can customize its behavior in the settings to match your workflow.

### Capture Options

Capture entire windows with a single click. Perfect for:

* Full screen interfaces

* Complete view hierarchies

* Dialog boxes and alerts

Select specific areas to capture. Ideal for:

* Individual UI components

* Specific sections of an interface

* Custom-sized regions

### Settings Customization

You can customize the screenshot tool in three ways:

#### 1. Quick Access Menu

When you click the screenshot button or use ⌘ + ⇧ + 6, a dropdown menu appears with three options:

* **Capture window**: Take a screenshot of the entire window

* **Capture selection**: Draw a selection box around the desired area

* **Attach file**: Choose a file from your system instead

#### 2. Default Behavior Settings

You can set your preferred default screenshot behavior in Settings:

1. Open Settings

2. Navigate to "Chat Settings"

3. Look for "Default Screenshot Behavior" under the chat options

4. Choose between:

* Capture window (automatically capture the entire window)

* Capture selection (start with selection tool)

* Show options (always show the dropdown menu)

#### 3. Customize Keyboard Shortcut

You can change the default ⌘ + ⇧ + 6 shortcut:

1. Open Settings

2. Go to "Chat Settings"

3. Find "Take Screenshot" in the list

4. Click on the current shortcut (⌘ + ⇧ + 6) to change it

5. Press your desired key combination

Even with a default behavior set, you can always access other capture modes through the dropdown menu or by using your configured keyboard shortcut.

### Best Practices for Screenshots

1. **Clean Captures**

* Close unnecessary windows or tabs

* Hide sensitive information

* Ensure the UI is in its final state

2. **Component Focus**

* Zoom in for small components

* Include padding for context

* Capture in the correct state (hover, active, etc.)

## Getting Started

Supported formats:

* PNG

* JPEG

* Screenshots directly from Xcode/Figma

For best results, ensure your images clearly show the UI elements you want to convert.

Choose your preferred method:

1. Use **Command + Shift + 6** to take a screenshot

2. Drag and drop images into the chat

3. Copy-paste images directly

The eligible model will analyze the images and generate corresponding code. You can:

* Copy the code directly

* Request modifications

* Ask for explanations of specific parts

## Best Practices

### Image Preparation

* Use high-resolution images for better accuracy

* Crop to include only relevant UI elements

* Ensure good contrast between elements

* Include any specific styling details as prompt you want to capture

### Code Generation

* Start with simple components before complex layouts

* Review generated code for customization needs

* Use follow-up questions to refine the output

* Consider breaking complex UIs into smaller pieces

For complex designs, try generating code for individual components first, then

combine them into the final layout.

## Example Workflows

### Basic UI Component

Take a screenshot of a button or card design

Drag the image into Alex Sidebar

```swift

// Generated code example

struct CustomButton: View {

var body: some View {

Button(action: {}) {

Text("Get Started")

.font(.headline)

.foregroundColor(.white)

.padding(.horizontal, 24)

.padding(.vertical, 12)

.background(Color.blue)

.cornerRadius(8)

}

}

}

```

### Complex Layout

Identify main components in your layout. You can:

* Split your design into logical sections

* Take screenshots of individual components

* Prepare multiple images for different parts

You have two options:

* Drag multiple component images at once to generate all parts simultaneously

* Generate code for each major section individually

Ask Alex to help combine components into a cohesive layout. You can:

* Request adjustments to match the overall design

* Fine-tune spacing and alignment

* Add container views and navigation elements

## Troubleshooting

### Common Issues

* Ensure image quality is high

* Try cropping closer to the component

* Use screenshots rather than photos

* Provide additional context in your prompt

* Specify exact colors if known

* Include style guide references

* Ask for specific modifications

* Use follow-up questions for refinement

* Break complex layouts into sections

* Specify constraints explicitly

* Ask for alternative layout approaches

* Provide reference screenshots

## Tips for Better Results

1. **Start Simple**: Begin with basic components before attempting complex layouts

2. **Iterate**: Use follow-up questions to refine the generated code

3. **Combine Methods**: Use both image and text descriptions for better results

4. **Review Output**: Always review and test generated code in your project

Remember that Image-to-Code is a starting point. You can always ask Alex to

modify the generated code to better match your needs.

# Voice Mode

Source: https://alexcode.ai/docs/chat/input-modes/voice-mode

Use voice commands to interact with Alex Sidebar more naturally

## Overview

Voice Mode allows you to interact with Alex Sidebar using speech instead of typing, making code discussions more natural and efficient. This feature is particularly useful when you need to explain complex problems or want to reduce typing fatigue.

# Project Memory

Source: https://alexcode.ai/docs/chat/context/memory

Remember context across conversations with Alex

Project Memory enables Alex to remember context across conversations by using terms like "remember this" or "keep this in mind". This helps maintain continuity and provides more contextually relevant responses over time.

## Understanding Project Memory

When enabled, you can ask Alex to remember context across conversations by using phrases like:

* "Remember this"

* "Keep this in mind"

* "Remember that..."

* "Take note of..."

This allows Alex to:

* Maintain important context between chat sessions

* Remember project-specific requirements and patterns

* Provide more consistent and personalized responses

* Reference previously discussed solutions

## Managing Project Memory

### Accessing Memory Settings

To manage your project memory:

1. Open Settings (gear icon)

2. Navigate to **Tools & Features** → **Project Memory**

3. Toggle **Enable Memory** on/off using the switch

### Searching Memories

Once memories are saved, you can:

* Use the search bar to find specific memories

* View all stored memories in the list

* Delete individual memories as needed

### Creating Memories

Simply tell Alex what to remember during any conversation:

* "Remember that our app uses SwiftUI and MVVM architecture"

* "Keep in mind that we're targeting iOS 17+"

* "Note that all API calls should use async/await"

## Privacy and Security

All project memory data is:

* Stored locally on your device

* Never shared with external services

* Fully under your control

## Best Practices

### What to Remember

Use project memory for:

* **Project architecture**: "Remember we're using MVVM with Combine"

* **Coding standards**: "Keep in mind we use 2-space indentation"

* **API details**: "Remember our API base URL is api.example.com"

* **Team preferences**: "Note that we prefer guard statements over if-let"

* **Dependencies**: "Remember we're using Firebase for authentication"

### Memory Management Tips

1. **Be specific**: Clear, specific memories are more useful than vague ones

2. **Update regularly**: Remove outdated memories to keep context relevant

3. **Use search**: Quickly find memories using the search feature

4. **Review periodically**: Check your stored memories to ensure they're still accurate

Project Memory is especially useful for long-term projects where maintaining consistent context across multiple coding sessions is important.

# Image to Code

Source: https://alexcode.ai/docs/chat/input-modes/image-to-code

Transform designs into code by dragging images into Alex Sidebar

The Image-to-Code feature allows you to quickly convert design mockups, screenshots, or UI elements into code. You can input images through multiple methods:

## Input Methods

Use **Command + Shift + 6** to:

* Take window screenshots

* Capture specific UI selections

* Perfect for quick UI component captures

Drag images directly into the chat interface from:

* Finder

* Browser

* Design tools

## Screenshot Tool (⌘ + ⇧ + 6)

The built-in screenshot tool provides a quick way to capture UI elements and convert them to code. You can customize its behavior in the settings to match your workflow.

### Capture Options

Capture entire windows with a single click. Perfect for:

* Full screen interfaces

* Complete view hierarchies

* Dialog boxes and alerts

Select specific areas to capture. Ideal for:

* Individual UI components

* Specific sections of an interface

* Custom-sized regions

### Settings Customization

You can customize the screenshot tool in three ways:

#### 1. Quick Access Menu

When you click the screenshot button or use ⌘ + ⇧ + 6, a dropdown menu appears with three options:

* **Capture window**: Take a screenshot of the entire window

* **Capture selection**: Draw a selection box around the desired area

* **Attach file**: Choose a file from your system instead

#### 2. Default Behavior Settings

You can set your preferred default screenshot behavior in Settings:

1. Open Settings

2. Navigate to "Chat Settings"

3. Look for "Default Screenshot Behavior" under the chat options

4. Choose between:

* Capture window (automatically capture the entire window)

* Capture selection (start with selection tool)

* Show options (always show the dropdown menu)

#### 3. Customize Keyboard Shortcut

You can change the default ⌘ + ⇧ + 6 shortcut:

1. Open Settings

2. Go to "Chat Settings"

3. Find "Take Screenshot" in the list

4. Click on the current shortcut (⌘ + ⇧ + 6) to change it

5. Press your desired key combination

Even with a default behavior set, you can always access other capture modes through the dropdown menu or by using your configured keyboard shortcut.

### Best Practices for Screenshots

1. **Clean Captures**

* Close unnecessary windows or tabs

* Hide sensitive information

* Ensure the UI is in its final state

2. **Component Focus**

* Zoom in for small components

* Include padding for context

* Capture in the correct state (hover, active, etc.)

## Getting Started

Supported formats:

* PNG

* JPEG

* Screenshots directly from Xcode/Figma

For best results, ensure your images clearly show the UI elements you want to convert.

Choose your preferred method:

1. Use **Command + Shift + 6** to take a screenshot

2. Drag and drop images into the chat

3. Copy-paste images directly

The eligible model will analyze the images and generate corresponding code. You can:

* Copy the code directly

* Request modifications

* Ask for explanations of specific parts

## Best Practices

### Image Preparation

* Use high-resolution images for better accuracy

* Crop to include only relevant UI elements

* Ensure good contrast between elements

* Include any specific styling details as prompt you want to capture

### Code Generation

* Start with simple components before complex layouts

* Review generated code for customization needs

* Use follow-up questions to refine the output

* Consider breaking complex UIs into smaller pieces

For complex designs, try generating code for individual components first, then

combine them into the final layout.

## Example Workflows

### Basic UI Component

Take a screenshot of a button or card design

Drag the image into Alex Sidebar

```swift

// Generated code example

struct CustomButton: View {

var body: some View {

Button(action: {}) {

Text("Get Started")

.font(.headline)

.foregroundColor(.white)

.padding(.horizontal, 24)

.padding(.vertical, 12)

.background(Color.blue)

.cornerRadius(8)

}

}

}

```

### Complex Layout

Identify main components in your layout. You can:

* Split your design into logical sections

* Take screenshots of individual components

* Prepare multiple images for different parts

You have two options:

* Drag multiple component images at once to generate all parts simultaneously

* Generate code for each major section individually

Ask Alex to help combine components into a cohesive layout. You can:

* Request adjustments to match the overall design

* Fine-tune spacing and alignment

* Add container views and navigation elements

## Troubleshooting

### Common Issues

* Ensure image quality is high

* Try cropping closer to the component

* Use screenshots rather than photos

* Provide additional context in your prompt

* Specify exact colors if known

* Include style guide references

* Ask for specific modifications

* Use follow-up questions for refinement

* Break complex layouts into sections

* Specify constraints explicitly

* Ask for alternative layout approaches

* Provide reference screenshots

## Tips for Better Results

1. **Start Simple**: Begin with basic components before attempting complex layouts

2. **Iterate**: Use follow-up questions to refine the generated code

3. **Combine Methods**: Use both image and text descriptions for better results

4. **Review Output**: Always review and test generated code in your project

Remember that Image-to-Code is a starting point. You can always ask Alex to

modify the generated code to better match your needs.

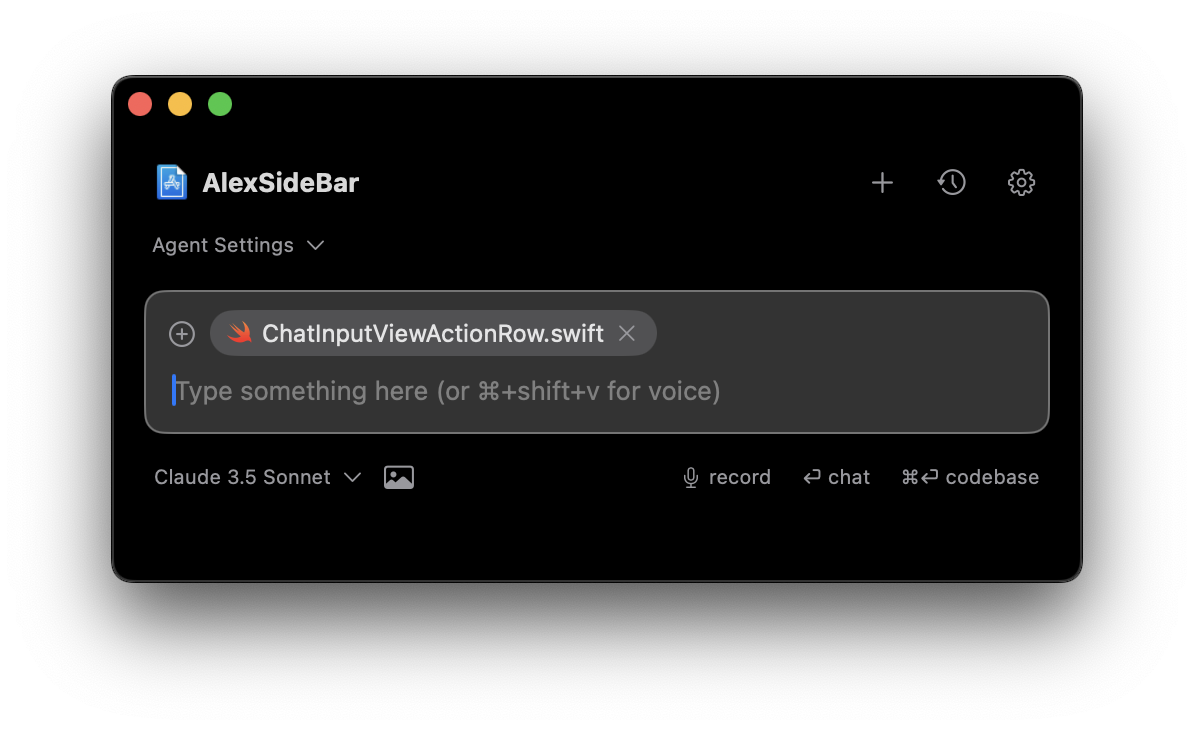

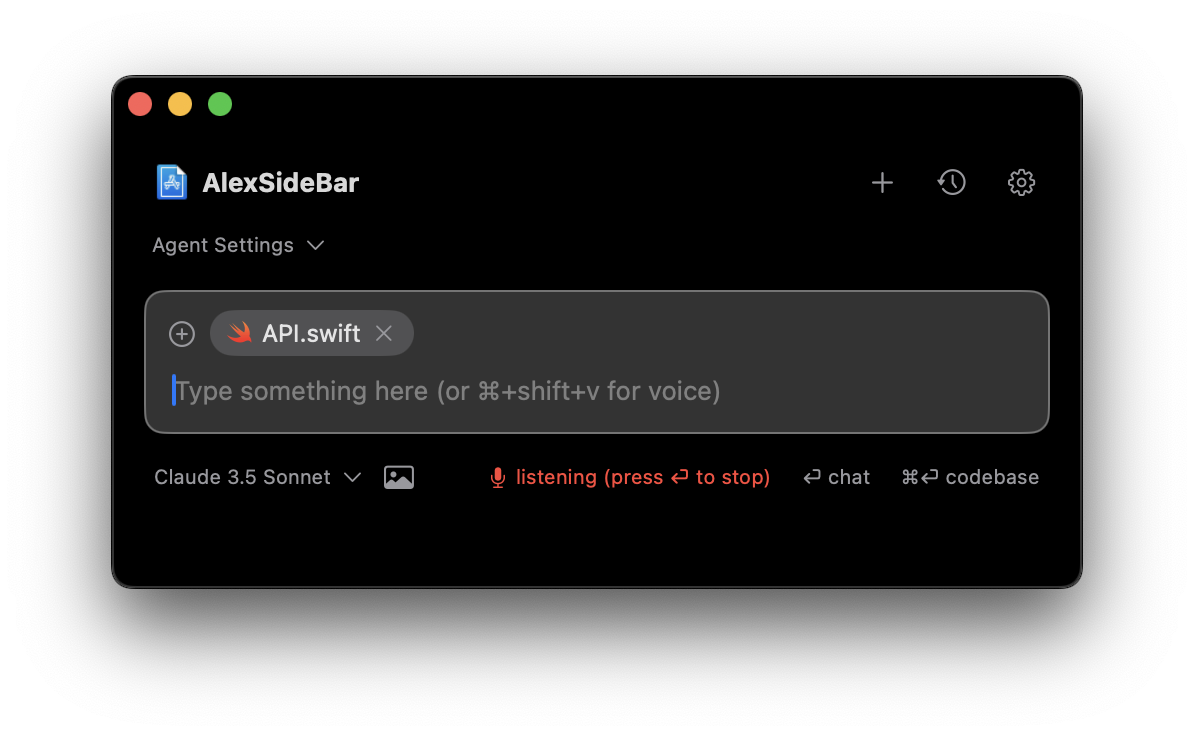

# Voice Mode

Source: https://alexcode.ai/docs/chat/input-modes/voice-mode

Use voice commands to interact with Alex Sidebar more naturally

## Overview

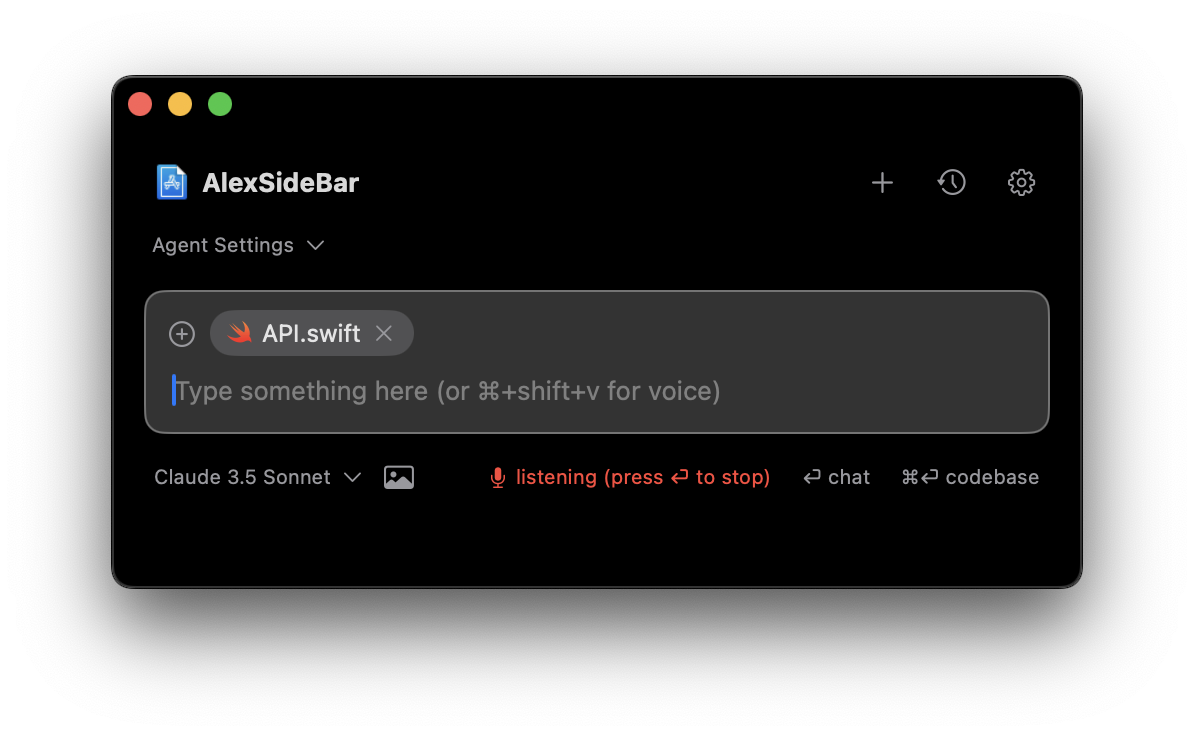

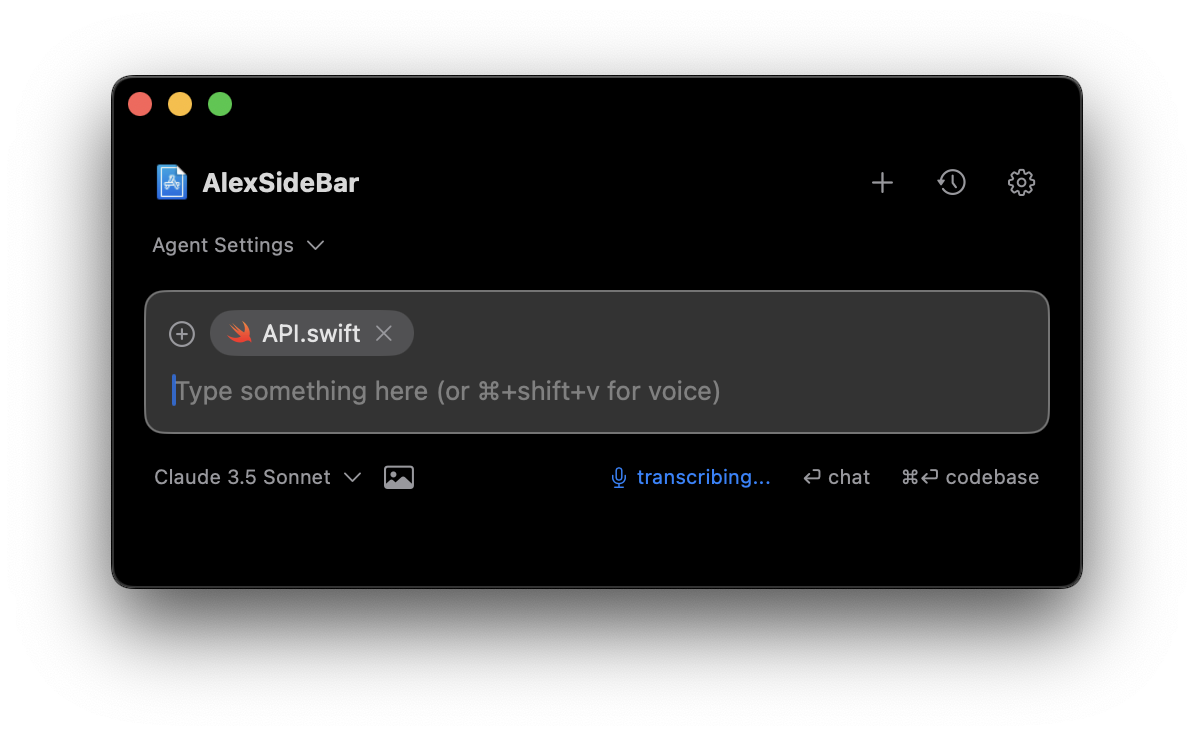

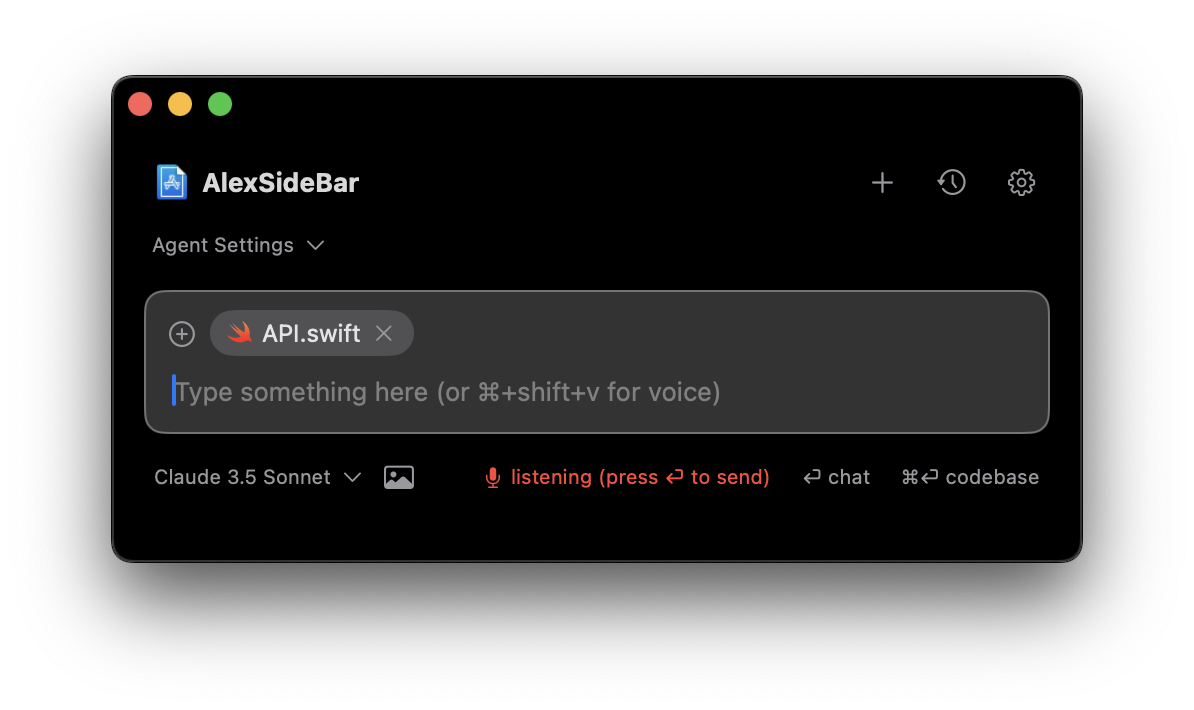

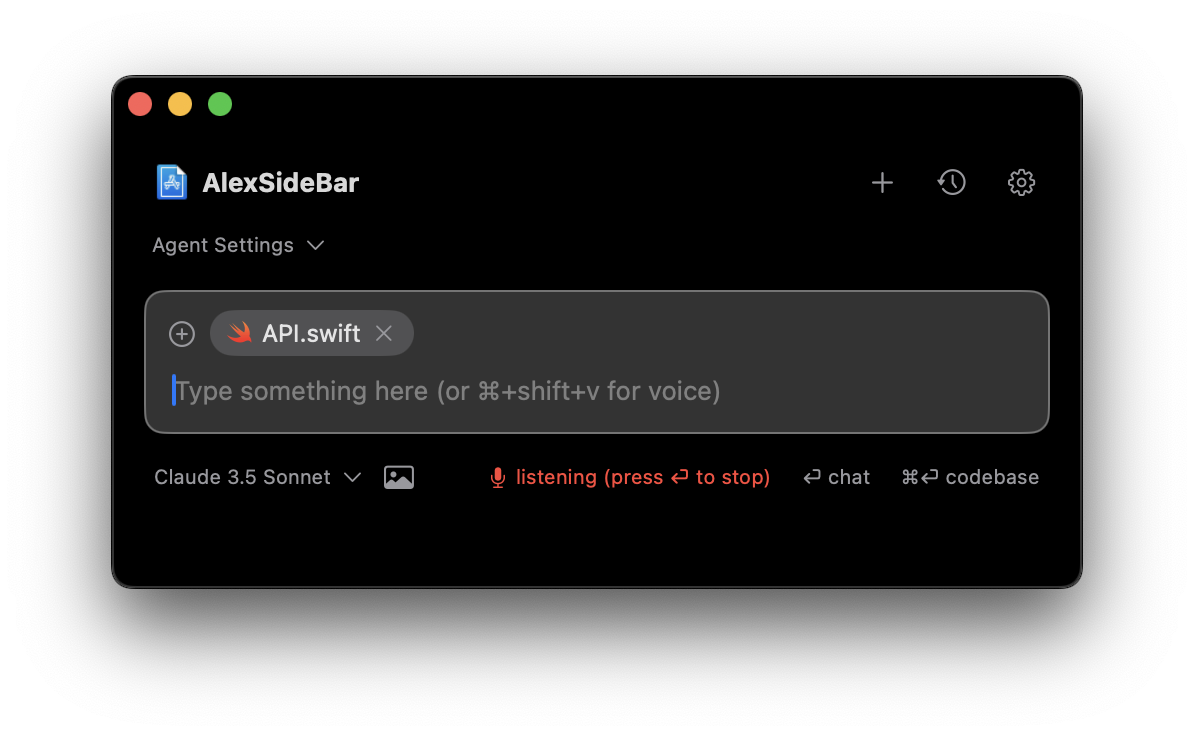

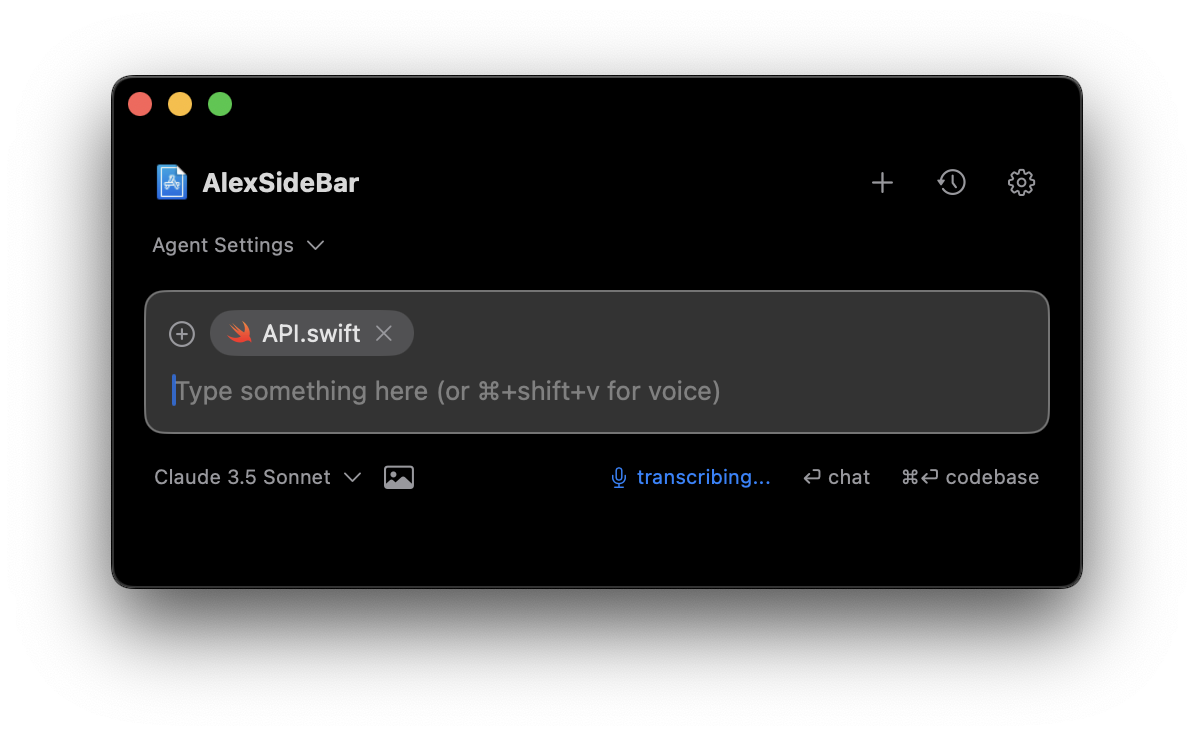

Voice Mode allows you to interact with Alex Sidebar using speech instead of typing, making code discussions more natural and efficient. This feature is particularly useful when you need to explain complex problems or want to reduce typing fatigue.

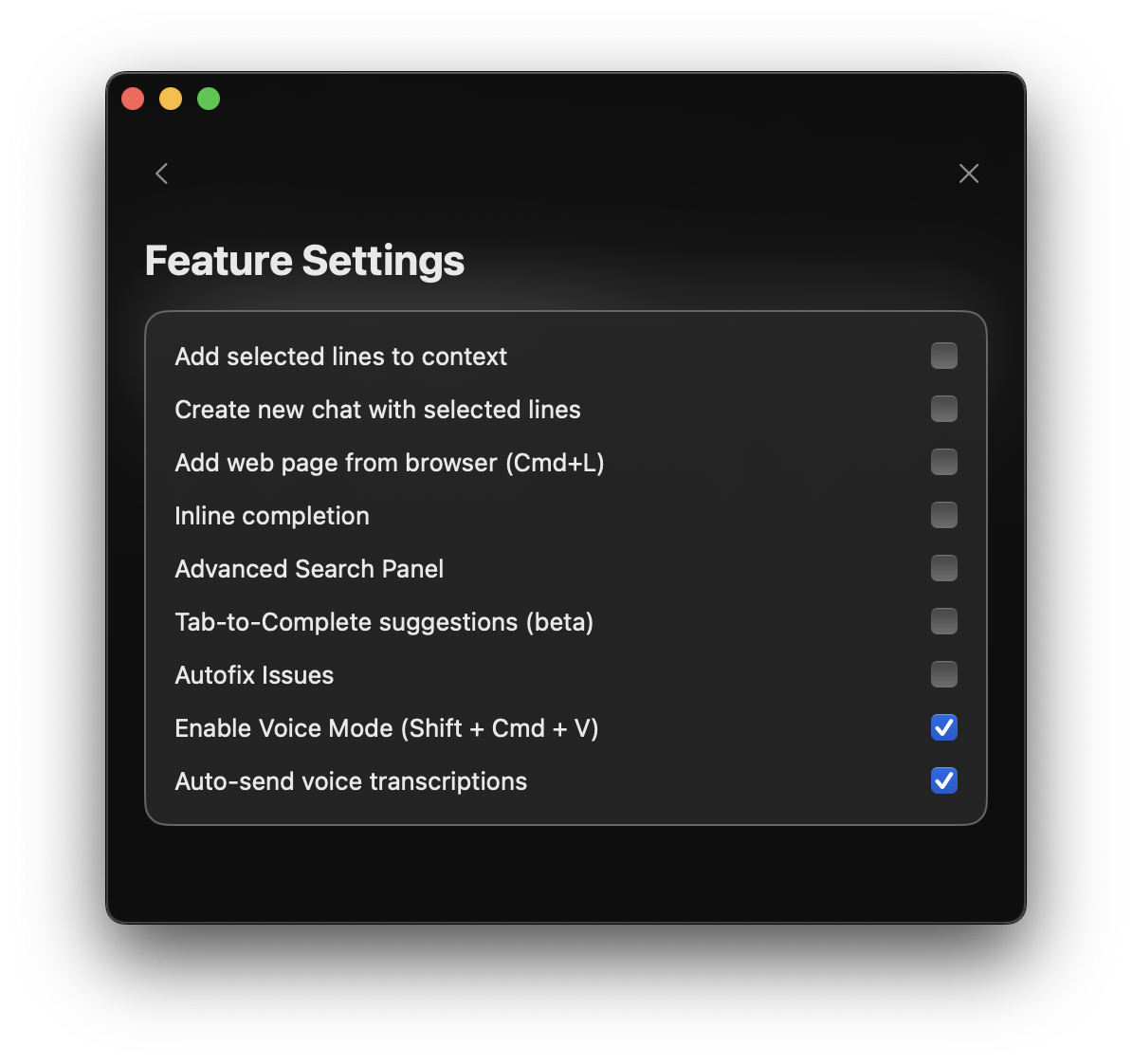

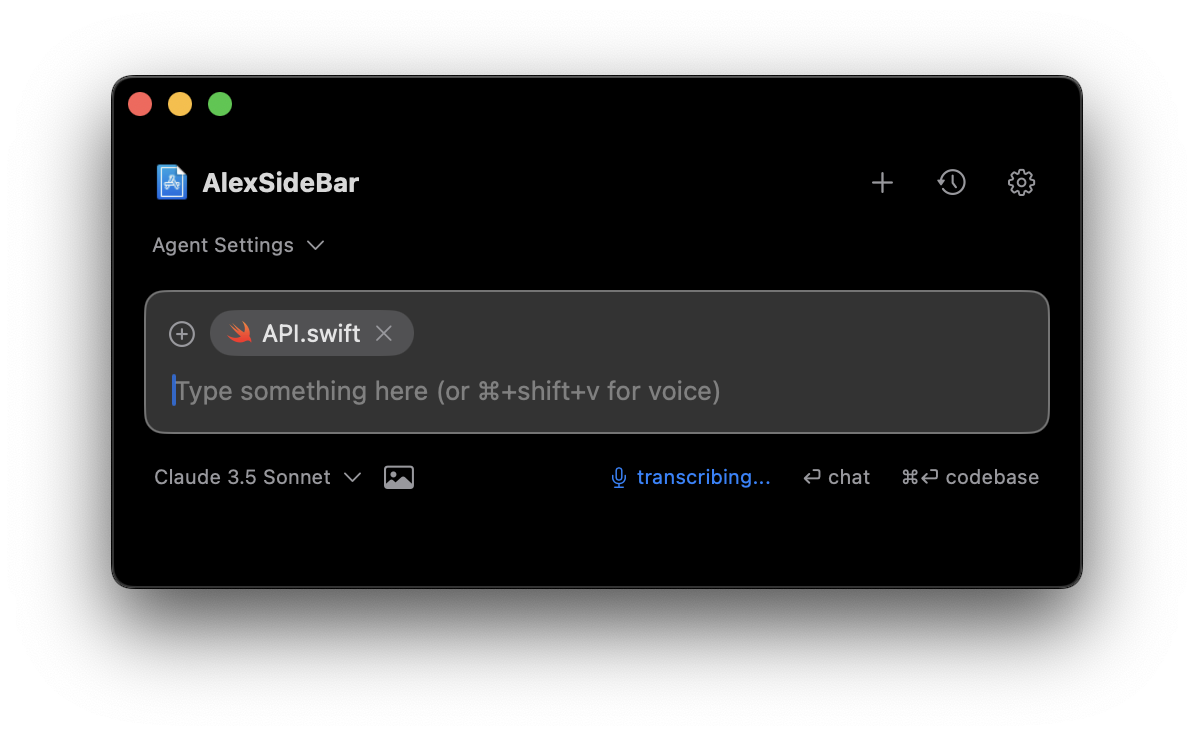

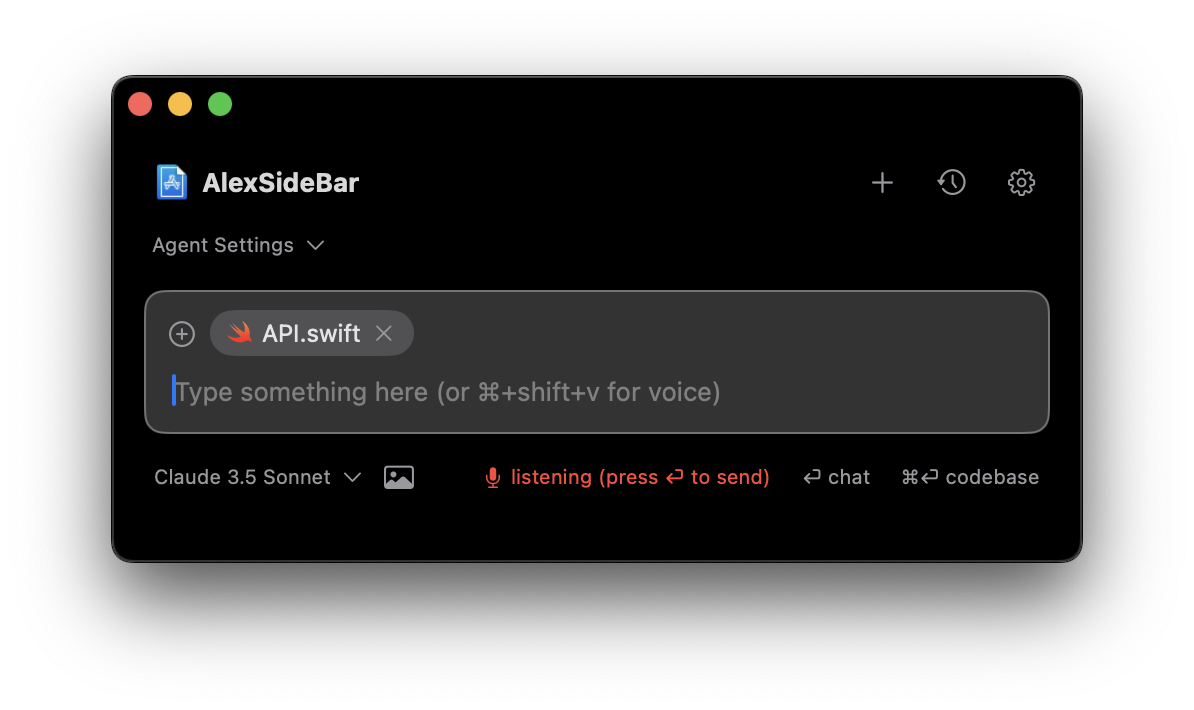

## Quick Actions

Toggle voice recording on/off. Use this shortcut to quickly start or stop voice input without clicking.

Click the microphone icon in the chat input area to start/stop recording.

## Using Voice Mode

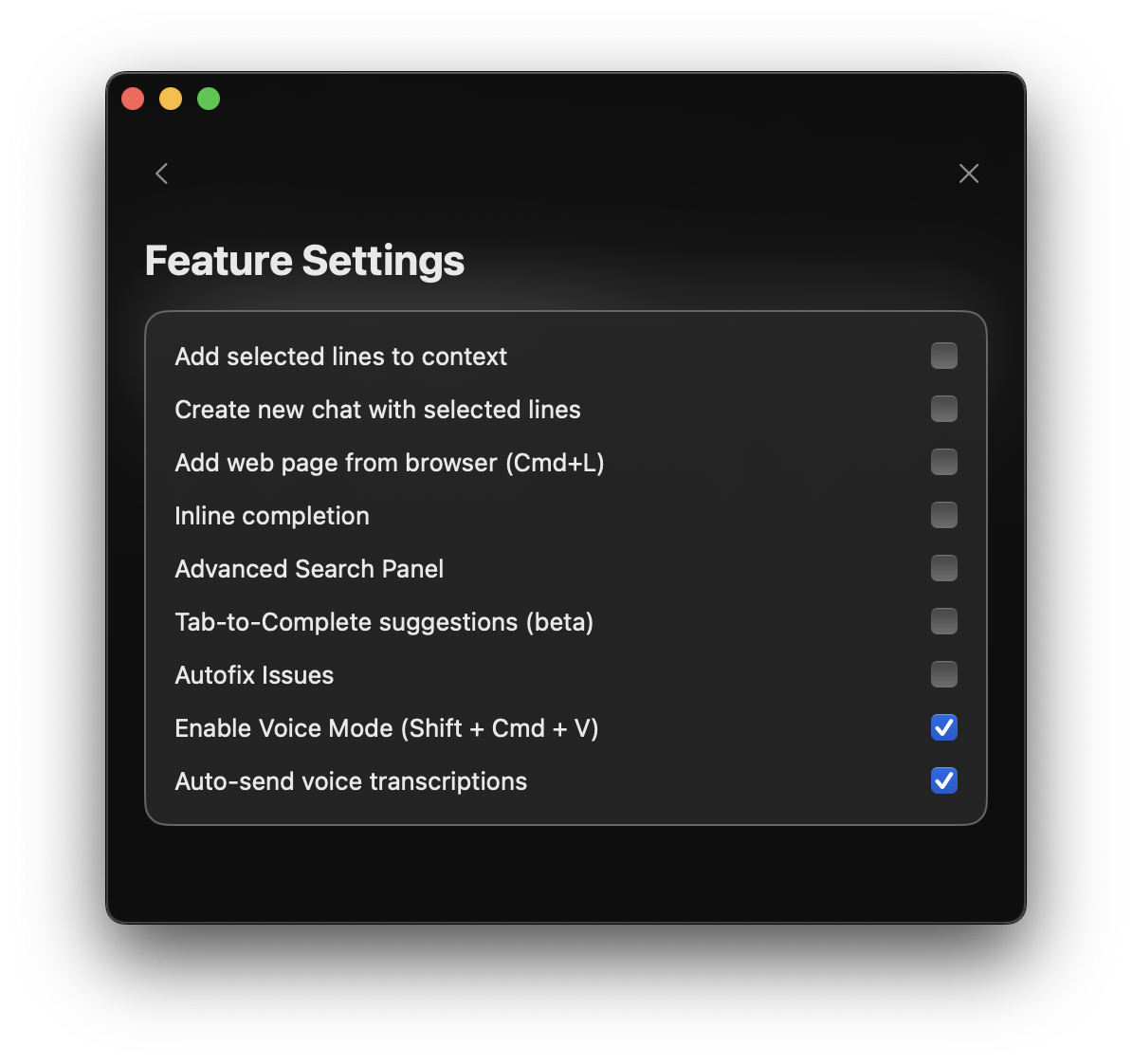

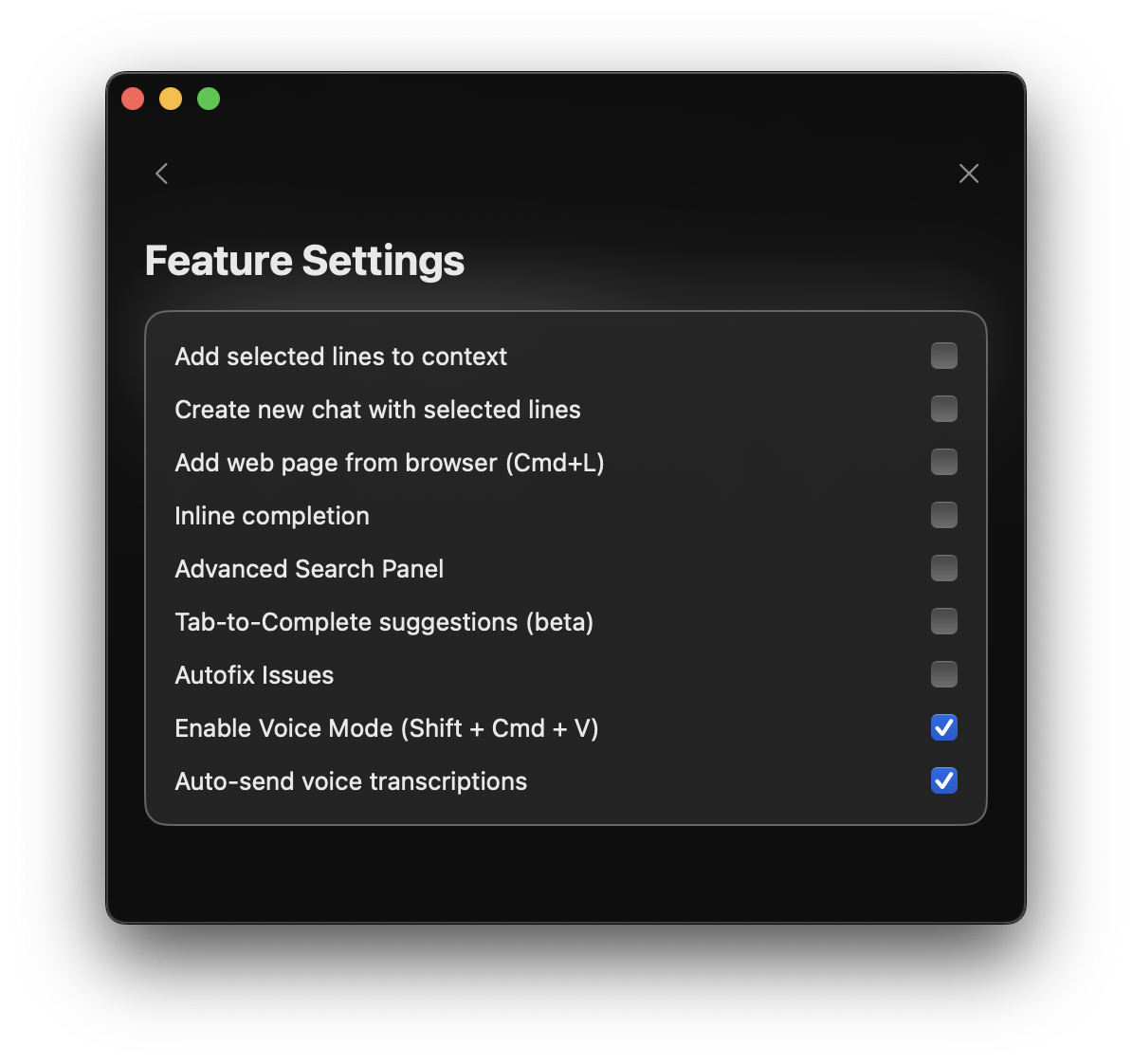

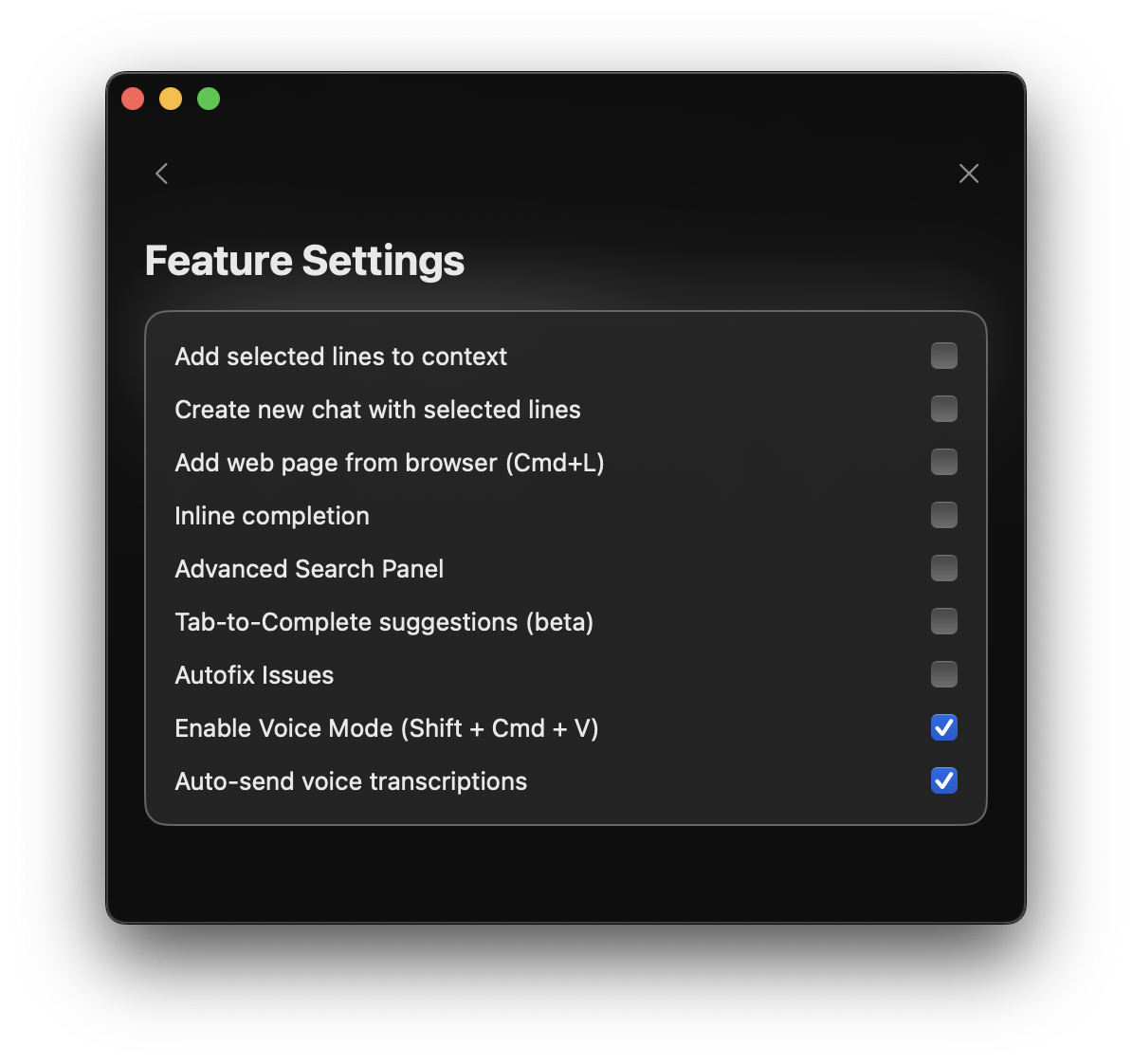

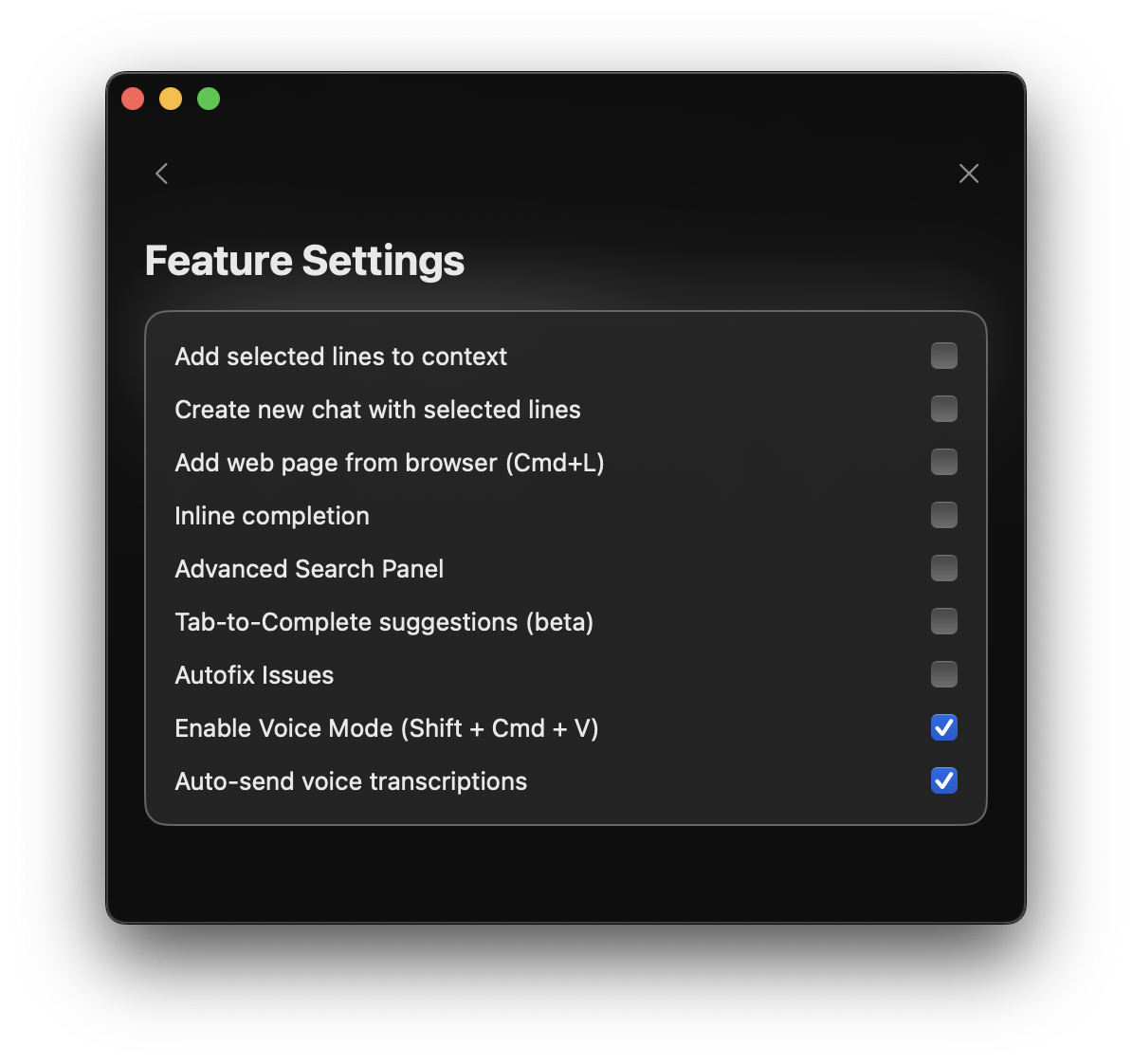

1. Open Settings (gear icon)

2. Navigate to Chat Settings

3. Enable Voice Mode

## Quick Actions

Toggle voice recording on/off. Use this shortcut to quickly start or stop voice input without clicking.

Click the microphone icon in the chat input area to start/stop recording.

## Using Voice Mode

1. Open Settings (gear icon)

2. Navigate to Chat Settings

3. Enable Voice Mode

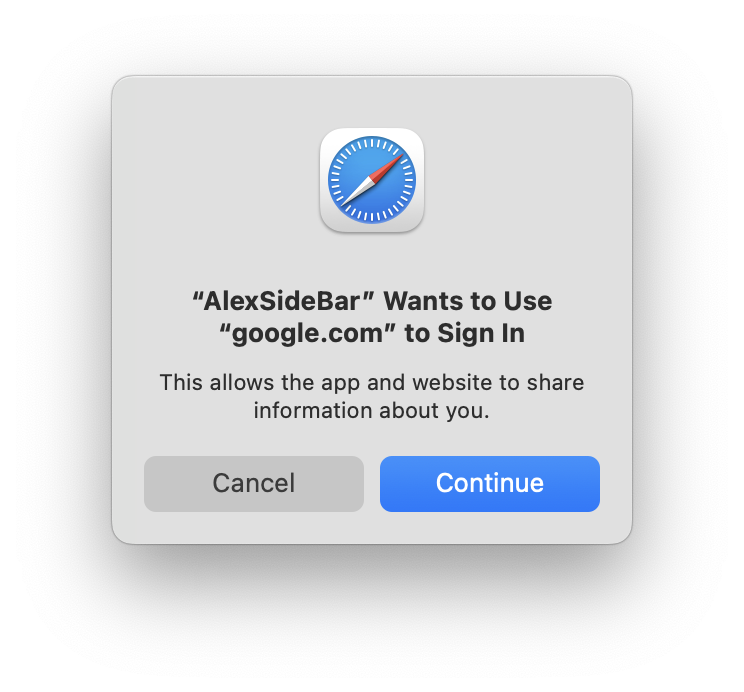

Voice Mode requires microphone permissions. You will be prompted to grant access when first enabling this feature.

Two ways to start:

* Press **Command + Shift + V**

* Click the microphone icon

Voice Mode requires microphone permissions. You will be prompted to grant access when first enabling this feature.

Two ways to start:

* Press **Command + Shift + V**

* Click the microphone icon

Speak clearly into your microphone.

Use any of these methods to stop:

* Press **Command + Shift + V** again

* Click the microphone icon

* Press **Return/Enter** key

* Press **Escape** key to cancel recording

Your speech will be automatically transcribed and inserted into the chat input box.

Speak clearly into your microphone.

Use any of these methods to stop:

* Press **Command + Shift + V** again

* Click the microphone icon

* Press **Return/Enter** key

* Press **Escape** key to cancel recording

Your speech will be automatically transcribed and inserted into the chat input box.

Use the Escape key if you want to cancel the recording without transcribing the audio.

## Auto Mode

Use the Escape key if you want to cancel the recording without transcribing the audio.

## Auto Mode

Enable "Auto Mode" in settings to automatically send transcribed messages:

* Finish speaking and stop recording

* Press Return/Enter

* Message sends automatically and starts AI inference

Enable "Auto Mode" in settings to automatically send transcribed messages:

* Finish speaking and stop recording

* Press Return/Enter

* Message sends automatically and starts AI inference

## Best Practices

* Speak at a natural pace

* Enunciate clearly

* Avoid background noise

* Use technical terms carefully

* Use voice for longer explanations

* Review transcription before sending

* Combine with code selection

## Accessibility Benefits

Voice Mode makes Alex more accessible and easier to use for everyone.

* When you are coding for long periods, **Voice Mode** lets you take breaks from typing while staying productive.

* For developers with mobility challenges or strain injuries, voice input provides a comfortable way to interact with Alex. You can dictate code explanations and questions instead.

* Having voice as an additional input option means you can choose what works best for you in different situations. Sometimes speaking is just more convenient than typing.

And when you need to explain complex concepts or walk through detailed logic, speaking it out loud often feels more natural and fluid than typing it all out.

# Overview

Source: https://alexcode.ai/docs/chat/overview

Learn how to use Alex Sidebar's chat for code assistance

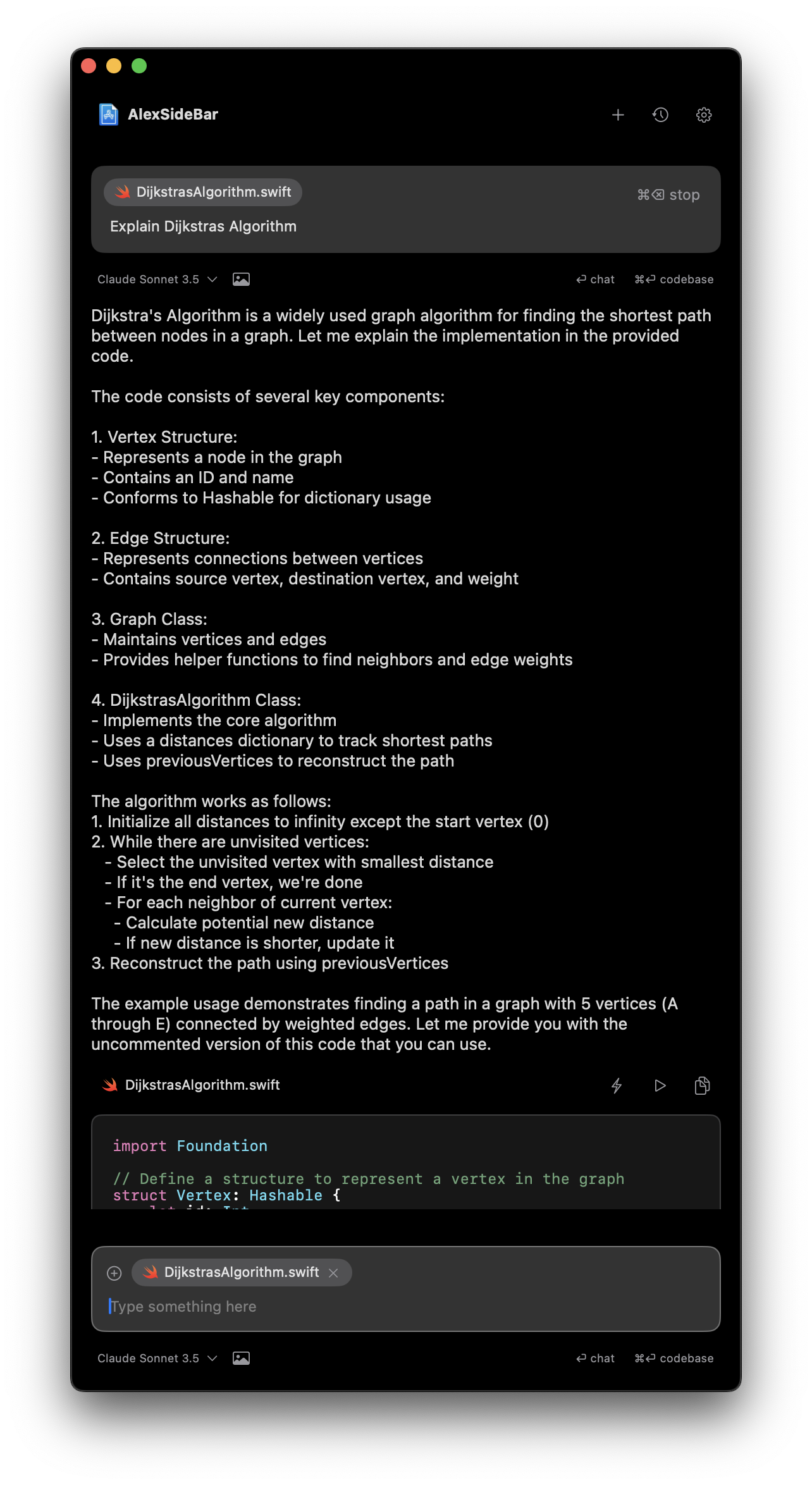

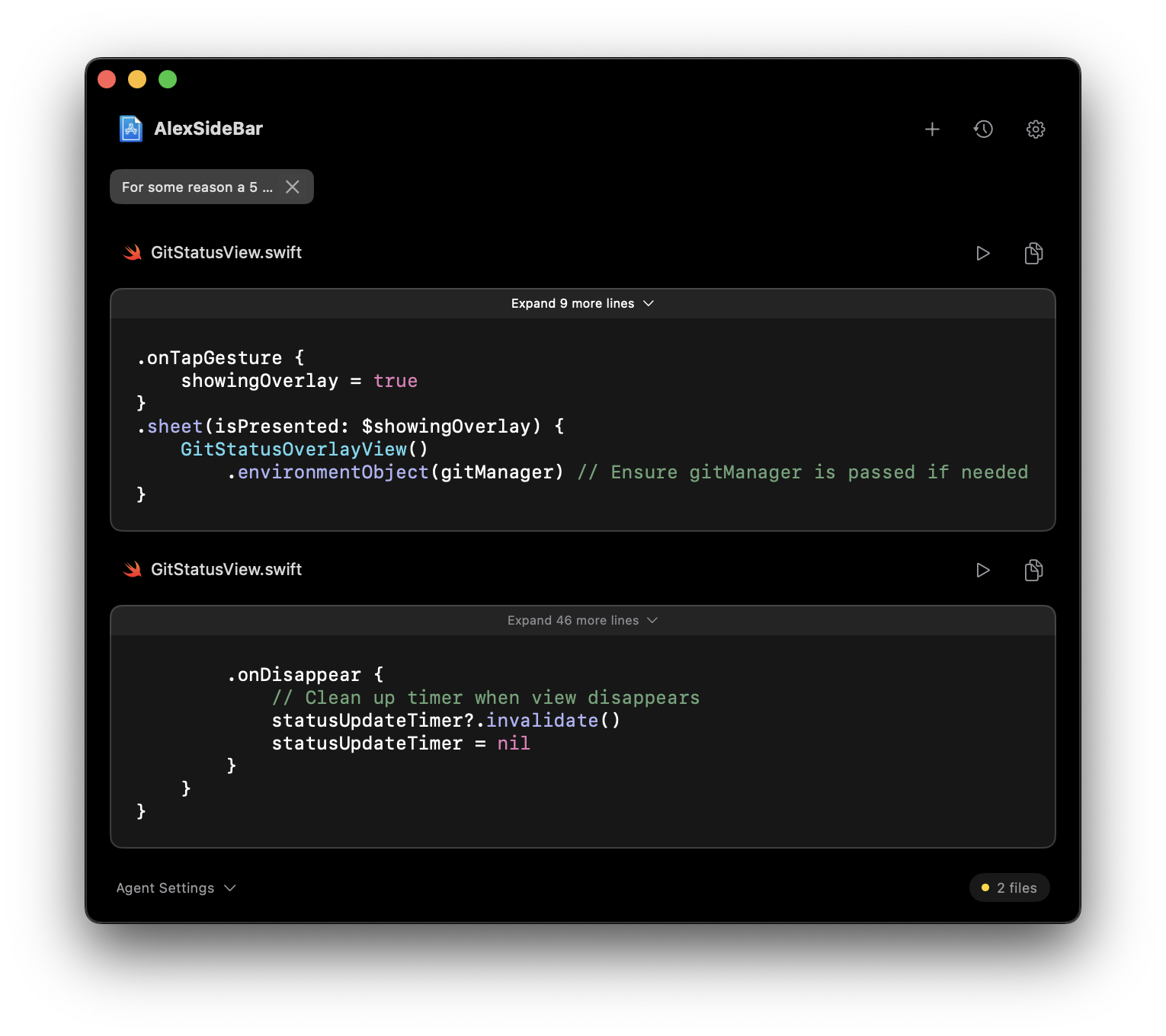

Alex Sidebar's chat feature enables code discussions and improvements in Xcode running on the side. By prompting your queries, you can assist your coding process and resolve issues more quickly.

## Quick Actions

Click this button in the main view to automatically build your project and fix all compilation errors in a continuous loop until success.

Select code in Xcode to start a **new chat**. The selected content will automatically be added as a reference.

Add selected code to your existing chat without opening a new window. Perfect for building context incrementally.

Start a chat with entire codebase as context. Useful for high-level questions

about your project.

Access documentation, add more files and more using the @ menu in your chat.

Copy-paste or drag images directly into chat for design analysis and code generation. Perfect for UI discussions and visual debugging.

## Copy Request Button

Perfect for using the most powerful models like o3 Pro or models with massive context windows like Gemini 2.5 Pro (1M+ tokens).

The Copy Request button lets you export your code context to use with external AI services:

Look for the copy icon at the bottom of any message in your chat

Clicking copies:

* All code context and file contents

* Your current query

* Formatted for easy pasting

Paste the copied content into:

* **ChatGPT o3-pro** - For the most powerful reasoning

* **Google AI Studio** - For Gemini 2.5 Pro with 1M+ token context

## Getting Started

In Xcode's editor window, highlight the code you want to discuss or improve.

Providing relevant code context helps the AI better understand your

question and provide more accurate responses.

Select code and press **Command + L** to start a chat.

Additional ways to start a chat:

* Click the chat (plus) icon in the top right of the sidebar

* Click the **"Build & Fix Errors"** button for automatic error resolution

* Use **Command + Enter** for a new chat with full codebase context

The more relevant context you provide, the more accurate and helpful the

responses will be.

## Context Management

* Chats are automatically saved

* Access previous chats from history

* Clear individual history

* Reference multiple files in one chat

* Generate changes for multiple files

## Common Use Cases

* Select complex functions or blocks

* Press **Command + L**

* Ask for step-by-step explanations

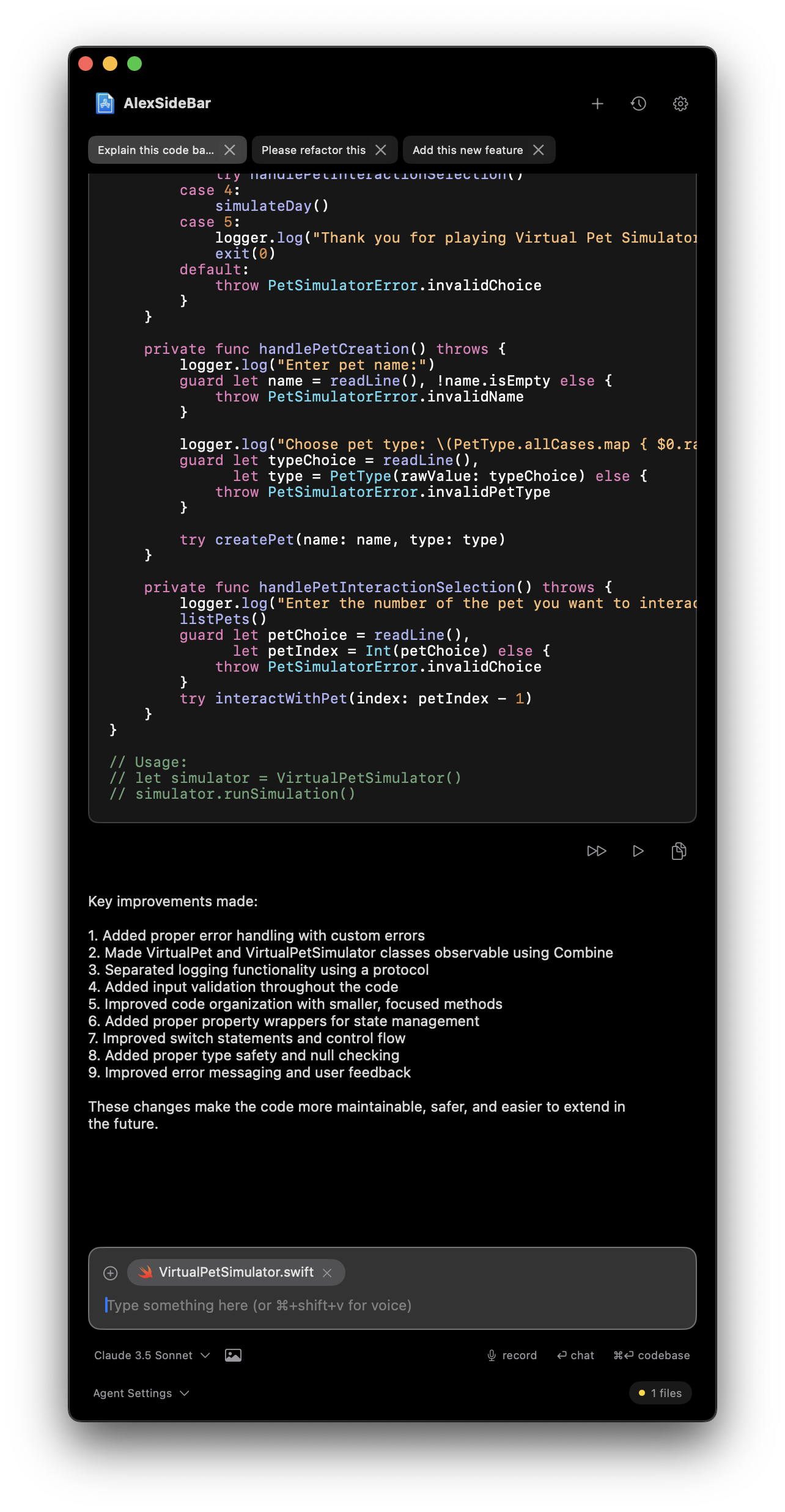

* Get detailed breakdowns of code behavior

* Highlight code that needs improvement

* Start a chat with **Command + L**

* Request refactoring suggestions

* Apply changes directly from chat

* Select problematic code sections

* Include error messages if available

* Ask for debugging assistance

* Get targeted solutions and fixes

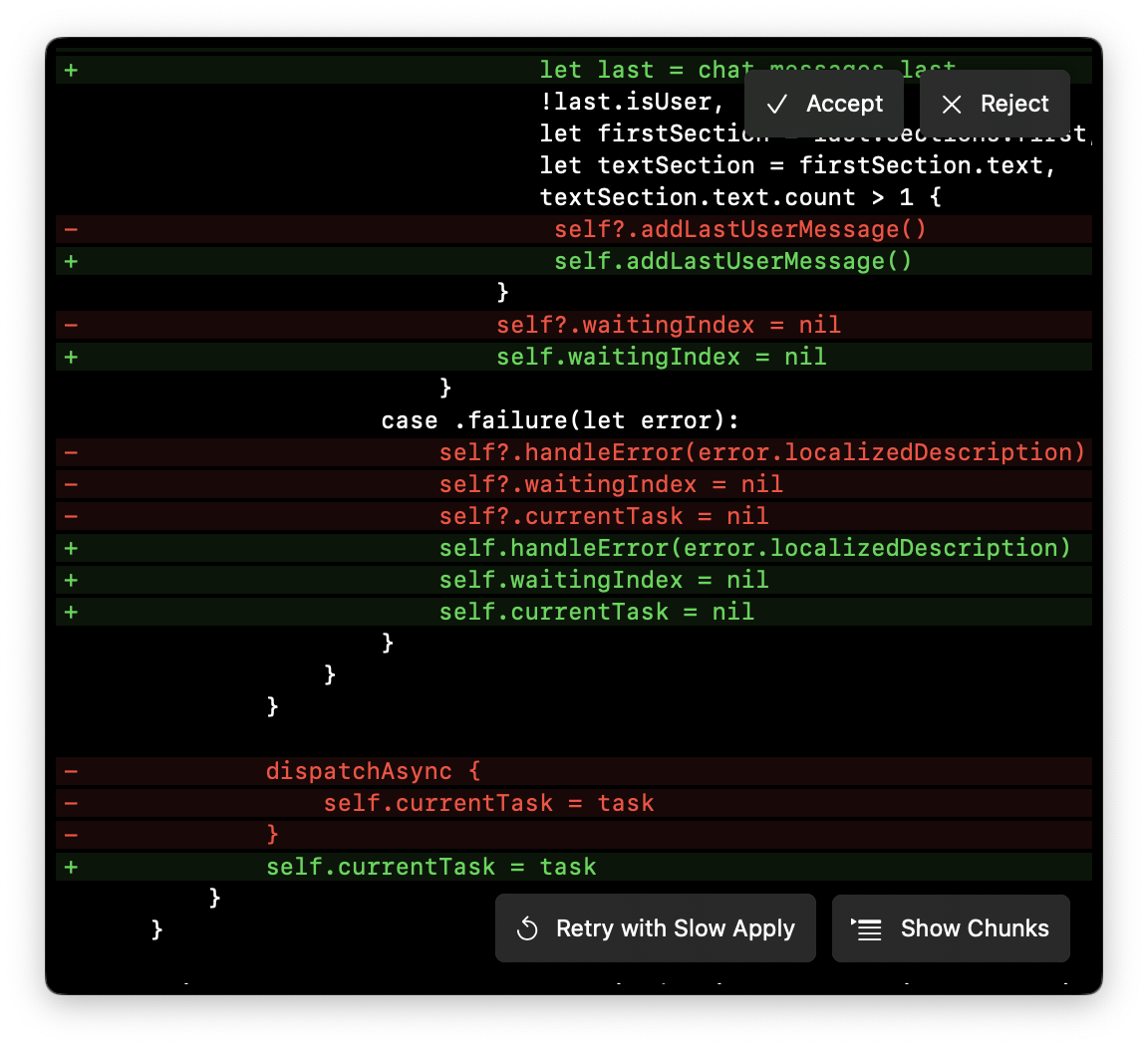

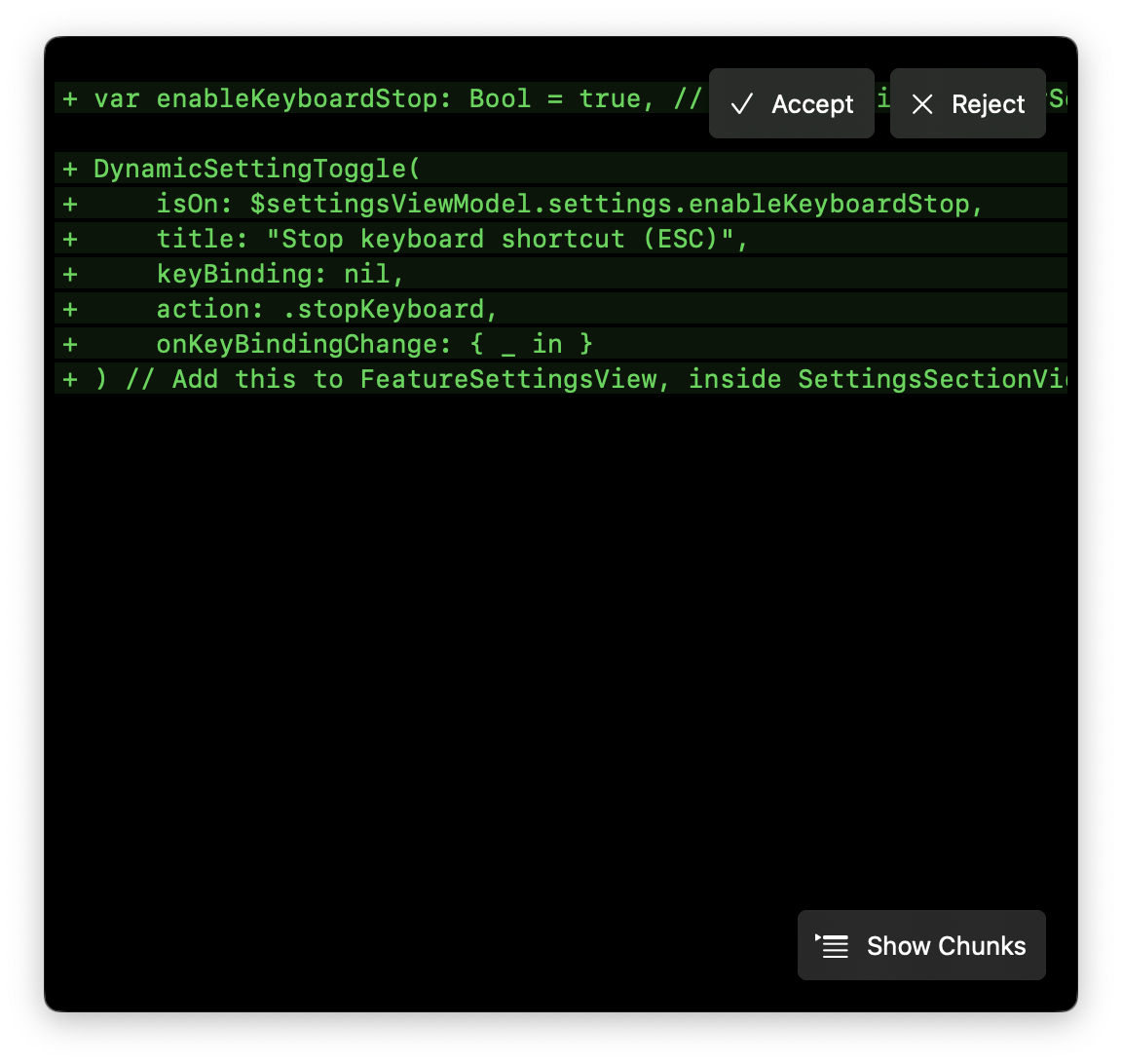

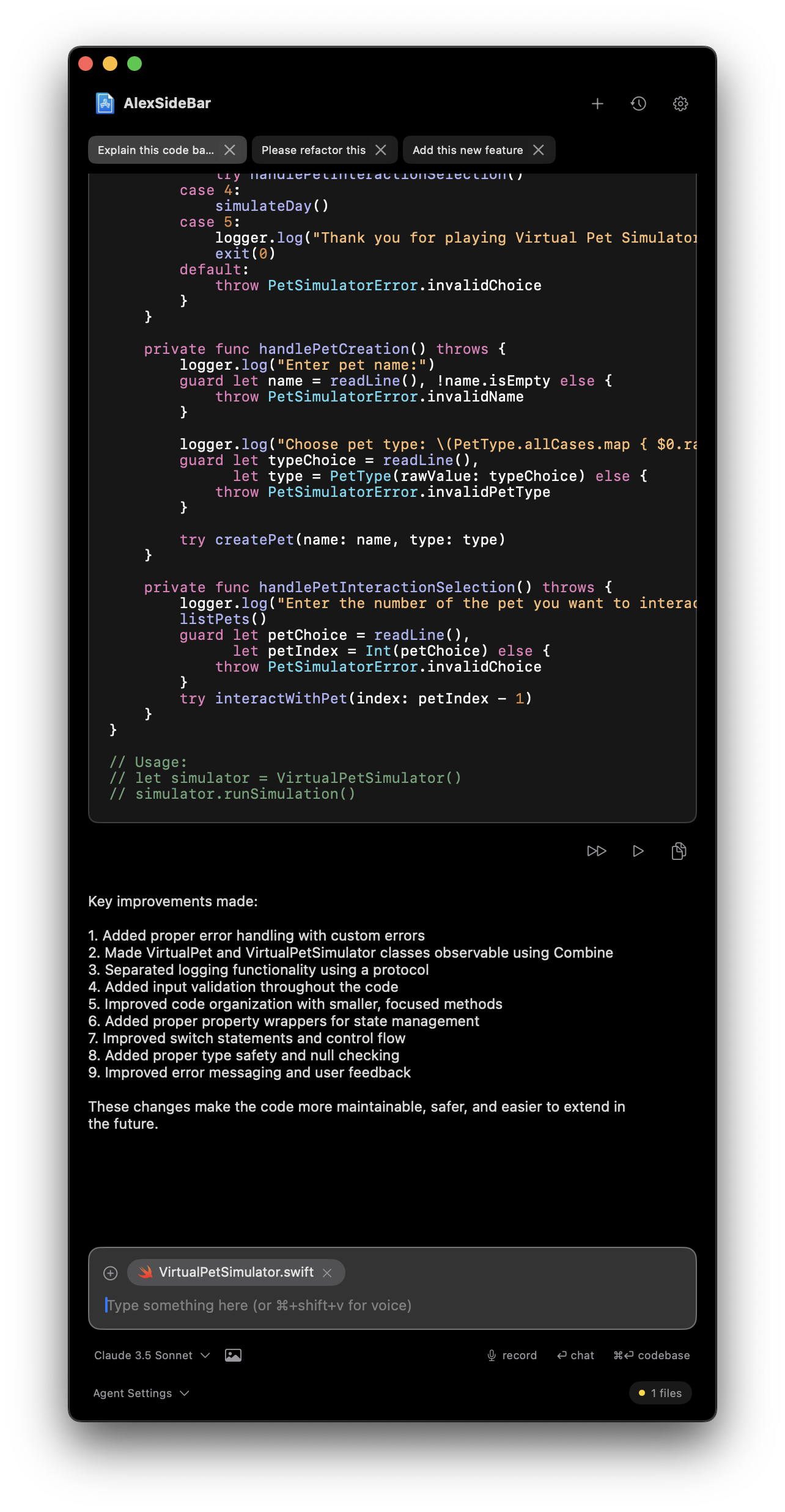

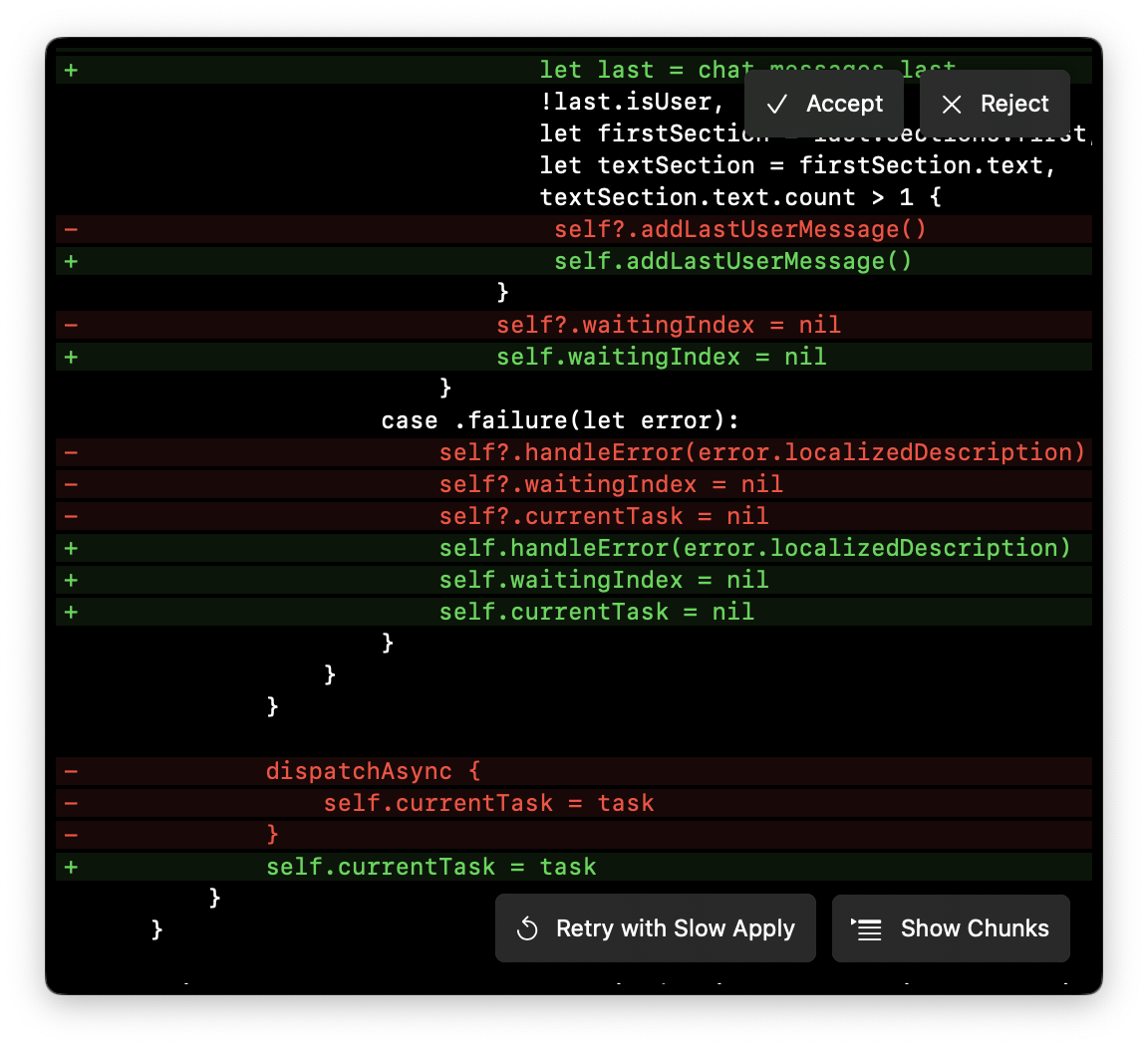

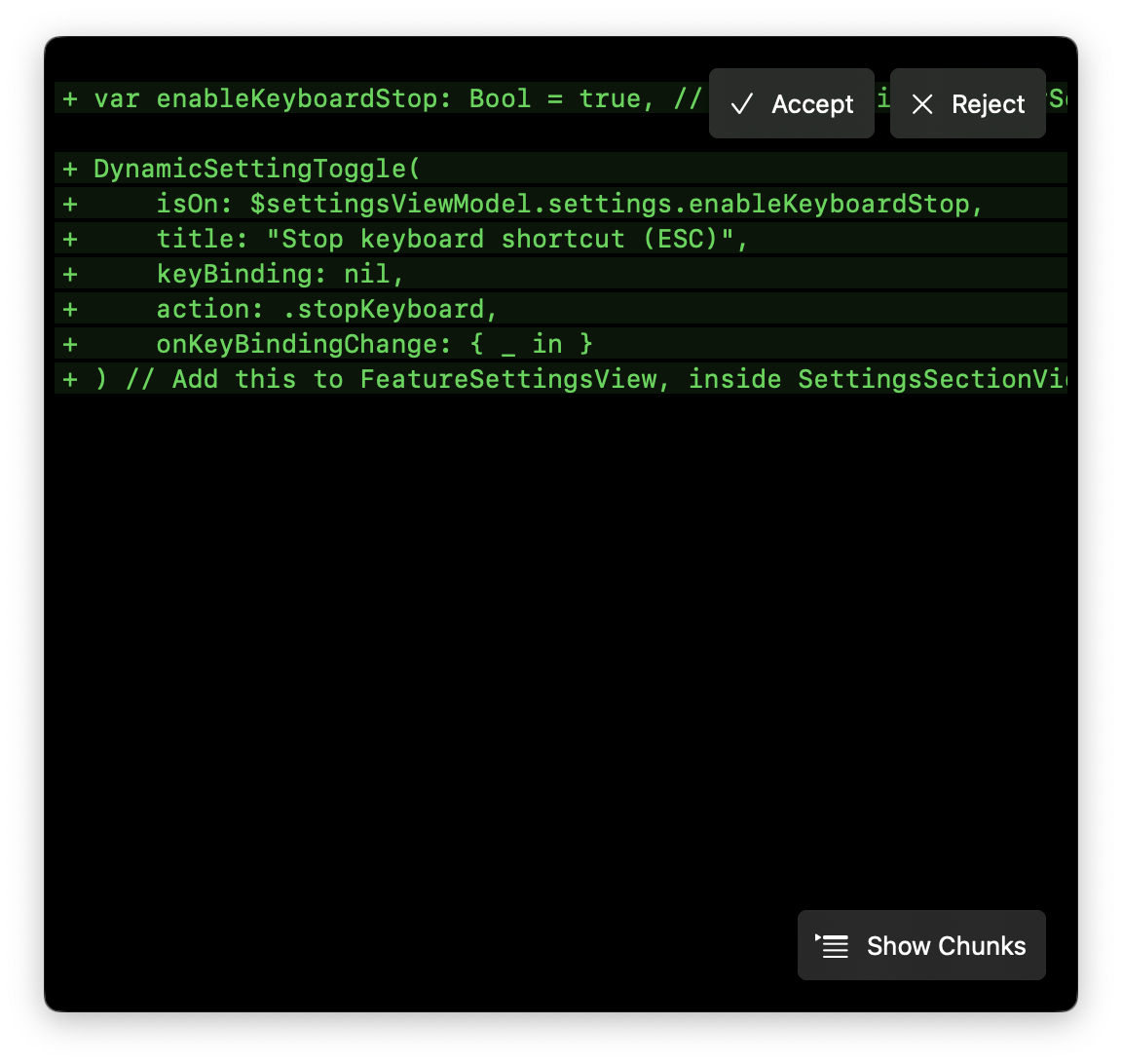

## Applying Changes

* Smart and fast code diff

* Handles both simple and complex changes

* Chunks mode enabled by default for better handling

* Quick Apply for instant changes without diff panel

* Fast-forward button (⏩) for instant application

* Perfect for all types of code modifications from quick fixes to refactoring

## Think First Mode

The "Think First" option combines DeepSeek R1's reasoning capabilities with Claude's responses for the best possible results. When enabled:

1. DeepSeek R1 first reasons about the problem using thinking tokens

2. This analysis is then used to guide Claude's response

3. Results in more thorough and accurate solutions

Think First mode can be toggled for individual messages using the "Think first" option under each message input.

## Best Practices

* Be specific about what you want

* Provide necessary context

* Use appropriate commands

* Break complex questions into smaller parts

* Keep context focused and relevant

* Remove unnecessary files

* Update context when switching tasks

* Clear context for new topics

Need more detailed guidance? Learn more about best practices in our [Best Practices guide](/best-practices).

## Web Search Integration

## Best Practices

* Speak at a natural pace

* Enunciate clearly

* Avoid background noise

* Use technical terms carefully

* Use voice for longer explanations

* Review transcription before sending

* Combine with code selection

## Accessibility Benefits

Voice Mode makes Alex more accessible and easier to use for everyone.

* When you are coding for long periods, **Voice Mode** lets you take breaks from typing while staying productive.

* For developers with mobility challenges or strain injuries, voice input provides a comfortable way to interact with Alex. You can dictate code explanations and questions instead.

* Having voice as an additional input option means you can choose what works best for you in different situations. Sometimes speaking is just more convenient than typing.

And when you need to explain complex concepts or walk through detailed logic, speaking it out loud often feels more natural and fluid than typing it all out.

# Overview

Source: https://alexcode.ai/docs/chat/overview

Learn how to use Alex Sidebar's chat for code assistance

Alex Sidebar's chat feature enables code discussions and improvements in Xcode running on the side. By prompting your queries, you can assist your coding process and resolve issues more quickly.

## Quick Actions

Click this button in the main view to automatically build your project and fix all compilation errors in a continuous loop until success.

Select code in Xcode to start a **new chat**. The selected content will automatically be added as a reference.

Add selected code to your existing chat without opening a new window. Perfect for building context incrementally.

Start a chat with entire codebase as context. Useful for high-level questions

about your project.

Access documentation, add more files and more using the @ menu in your chat.

Copy-paste or drag images directly into chat for design analysis and code generation. Perfect for UI discussions and visual debugging.

## Copy Request Button

Perfect for using the most powerful models like o3 Pro or models with massive context windows like Gemini 2.5 Pro (1M+ tokens).

The Copy Request button lets you export your code context to use with external AI services:

Look for the copy icon at the bottom of any message in your chat

Clicking copies:

* All code context and file contents

* Your current query

* Formatted for easy pasting

Paste the copied content into:

* **ChatGPT o3-pro** - For the most powerful reasoning

* **Google AI Studio** - For Gemini 2.5 Pro with 1M+ token context

## Getting Started

In Xcode's editor window, highlight the code you want to discuss or improve.

Providing relevant code context helps the AI better understand your

question and provide more accurate responses.

Select code and press **Command + L** to start a chat.

Additional ways to start a chat:

* Click the chat (plus) icon in the top right of the sidebar

* Click the **"Build & Fix Errors"** button for automatic error resolution

* Use **Command + Enter** for a new chat with full codebase context

The more relevant context you provide, the more accurate and helpful the

responses will be.

## Context Management

* Chats are automatically saved

* Access previous chats from history

* Clear individual history

* Reference multiple files in one chat

* Generate changes for multiple files

## Common Use Cases

* Select complex functions or blocks

* Press **Command + L**

* Ask for step-by-step explanations

* Get detailed breakdowns of code behavior

* Highlight code that needs improvement

* Start a chat with **Command + L**

* Request refactoring suggestions

* Apply changes directly from chat

* Select problematic code sections

* Include error messages if available

* Ask for debugging assistance

* Get targeted solutions and fixes

## Applying Changes

* Smart and fast code diff

* Handles both simple and complex changes

* Chunks mode enabled by default for better handling

* Quick Apply for instant changes without diff panel

* Fast-forward button (⏩) for instant application

* Perfect for all types of code modifications from quick fixes to refactoring

## Think First Mode

The "Think First" option combines DeepSeek R1's reasoning capabilities with Claude's responses for the best possible results. When enabled:

1. DeepSeek R1 first reasons about the problem using thinking tokens

2. This analysis is then used to guide Claude's response

3. Results in more thorough and accurate solutions

Think First mode can be toggled for individual messages using the "Think first" option under each message input.

## Best Practices

* Be specific about what you want

* Provide necessary context

* Use appropriate commands

* Break complex questions into smaller parts

* Keep context focused and relevant

* Remove unnecessary files

* Update context when switching tasks

* Clear context for new topics

Need more detailed guidance? Learn more about best practices in our [Best Practices guide](/best-practices).

## Web Search Integration

The **Web** button in chat provides access to curated iOS development resources. When enabled:

* Automatically searches popular iOS development blogs

* Finds relevant GitHub repositories and discussions

* Retrieves up-to-date documentation and examples

### Using Web Search

1. Click the "Web" button in chat

2. Alex searches relevant iOS resources

3. Results are automatically added as context

4. Get responses based on the latest information

The web search feature ensures you get the most current solutions and best practices from the iOS development community.

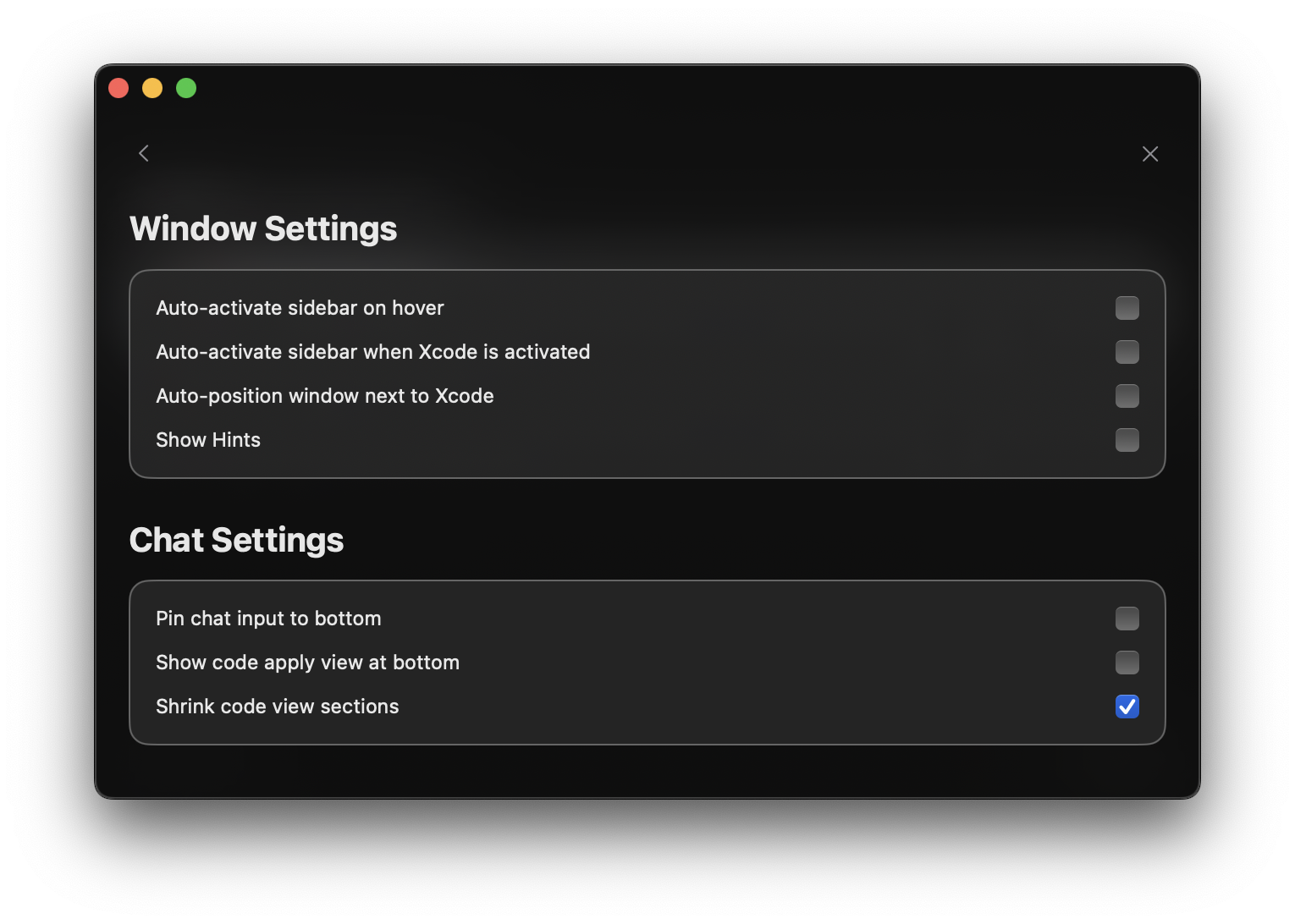

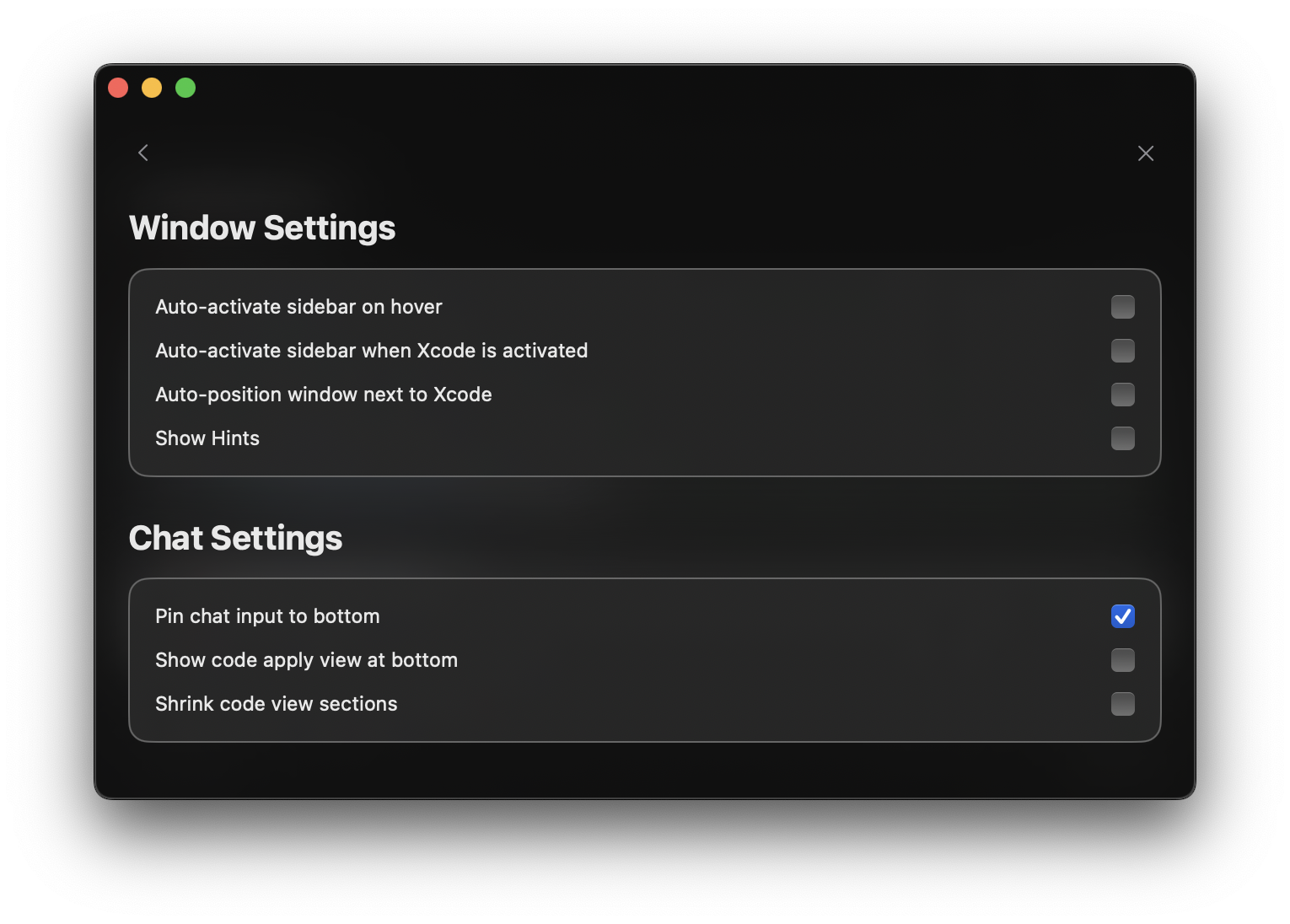

# UI Customization

Source: https://alexcode.ai/docs/chat/ui/customization

Customize Alex Sidebar chat interface to match your preferences

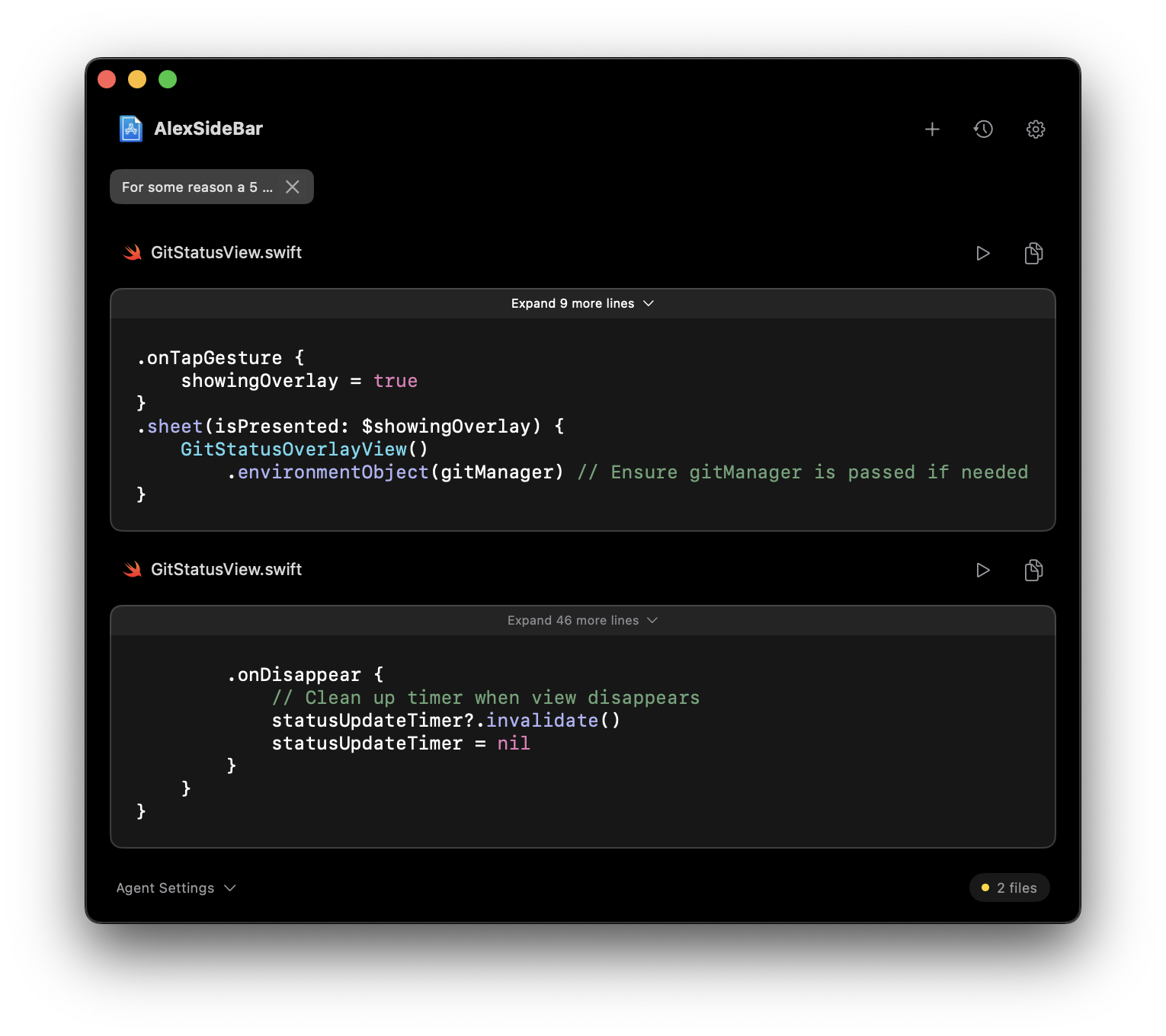

## Code Section Expansion

Control how code sections are displayed in chat by default. This setting helps you manage the visibility of code blocks in your conversations.

The **Web** button in chat provides access to curated iOS development resources. When enabled:

* Automatically searches popular iOS development blogs

* Finds relevant GitHub repositories and discussions

* Retrieves up-to-date documentation and examples

### Using Web Search

1. Click the "Web" button in chat

2. Alex searches relevant iOS resources

3. Results are automatically added as context

4. Get responses based on the latest information

The web search feature ensures you get the most current solutions and best practices from the iOS development community.

# UI Customization

Source: https://alexcode.ai/docs/chat/ui/customization

Customize Alex Sidebar chat interface to match your preferences

## Code Section Expansion

Control how code sections are displayed in chat by default. This setting helps you manage the visibility of code blocks in your conversations.

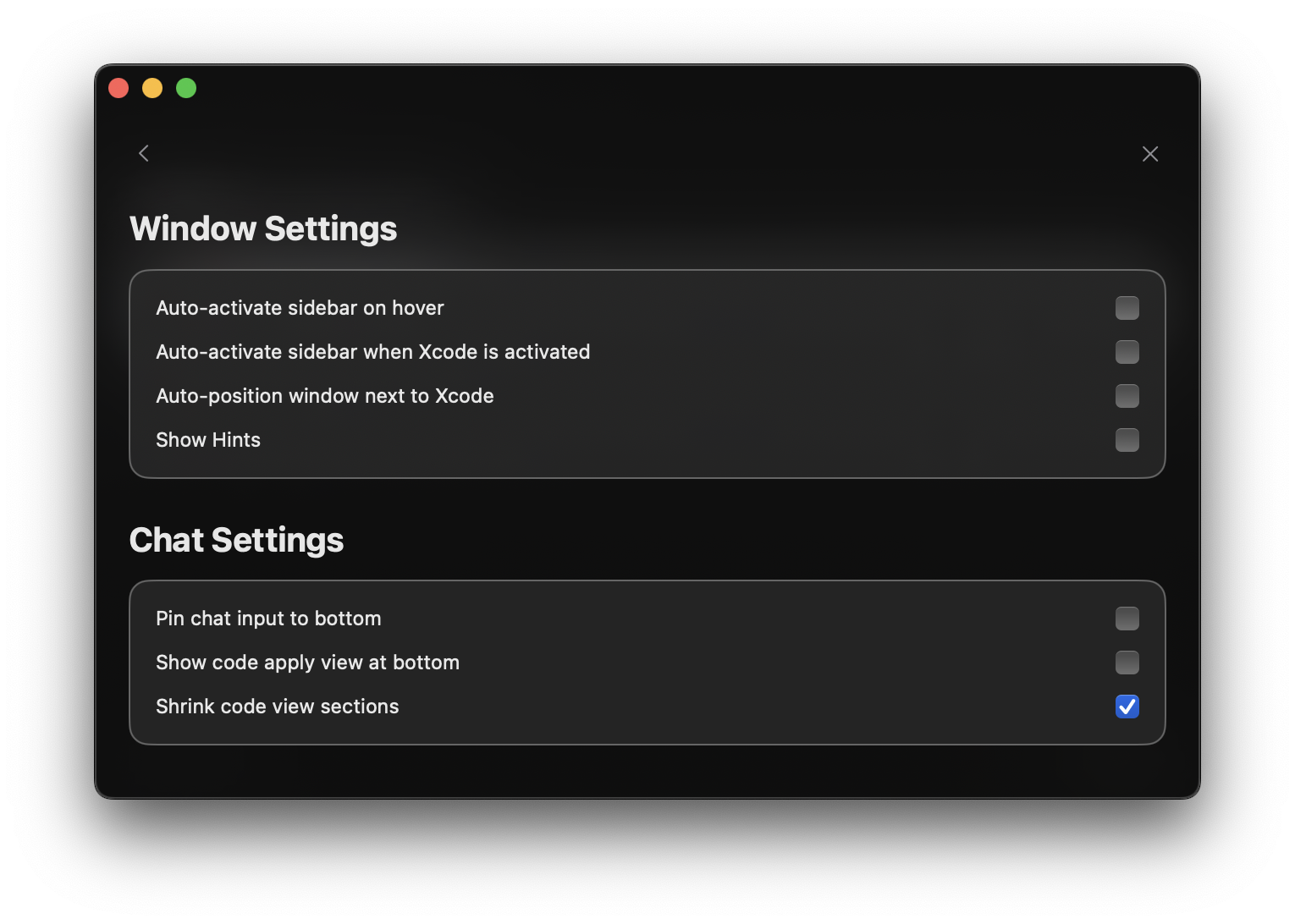

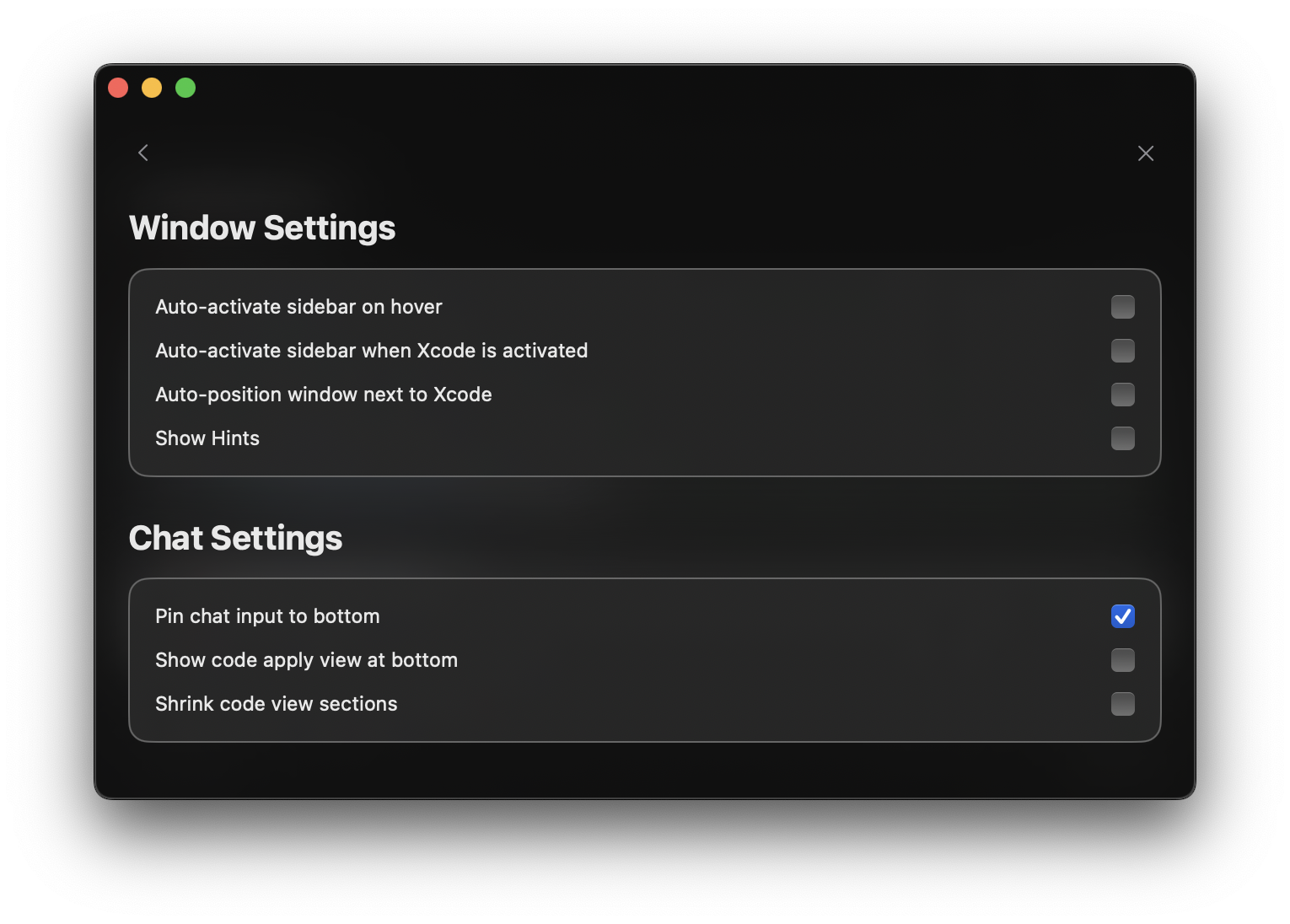

Code sections now expand by default for immediate visibility of complete code blocks.

Customize this behavior in Settings > Window Management to match your workflow preferences.

## Configure Code Expansion

You can configure the code expansion behavior in Settings > Window Management. This setting allows you to control whether code blocks automatically expand or remain collapsed by default.

Code sections now expand by default for immediate visibility of complete code blocks.

Customize this behavior in Settings > Window Management to match your workflow preferences.

## Configure Code Expansion

You can configure the code expansion behavior in Settings > Window Management. This setting allows you to control whether code blocks automatically expand or remain collapsed by default.

## Pin Chat to Bottom

Keep the chat input field fixed at the bottom of the window for easy access and improved usability.

## Pin Chat to Bottom

Keep the chat input field fixed at the bottom of the window for easy access and improved usability.

Keep the chat input field in view at all times for instant access.

Access the chat feature directly without searching or scrolling.

Interact with the chat interface for a natural conversation rhythm.

Optimize the display of long chat histories while keeping the input accessible.

Keep the chat input field in view at all times for instant access.

Access the chat feature directly without searching or scrolling.

Interact with the chat interface for a natural conversation rhythm.

Optimize the display of long chat histories while keeping the input accessible.

## Code Apply View Position

The code apply view position feature lets you keep the code changes interface at the bottom of the window, allowing you to quickly apply changes without needing to scroll up through the code changes.

## Code Apply View Position

The code apply view position feature lets you keep the code changes interface at the bottom of the window, allowing you to quickly apply changes without needing to scroll up through the code changes.

Keep the code apply interface fixed at the bottom for easy access to changes.

Review and apply code changes without scrolling up through long conversations.

# Error & Warning Resolution

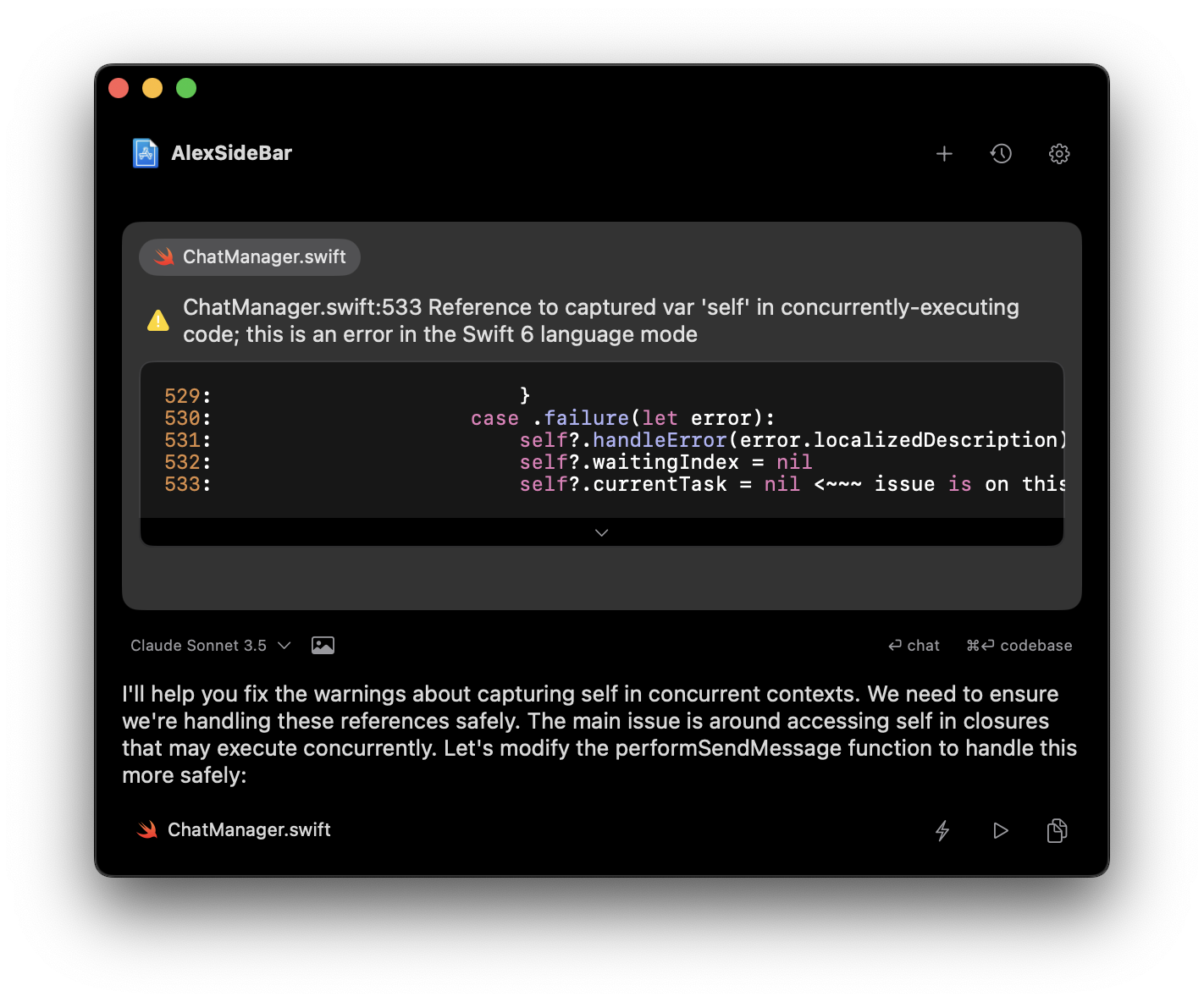

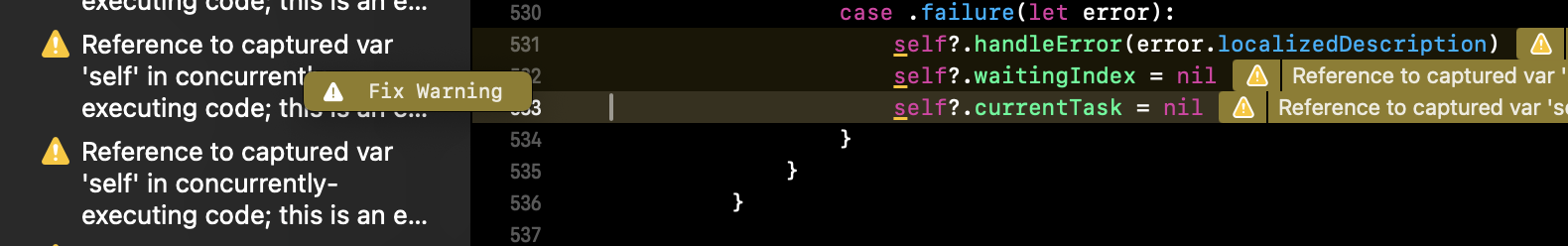

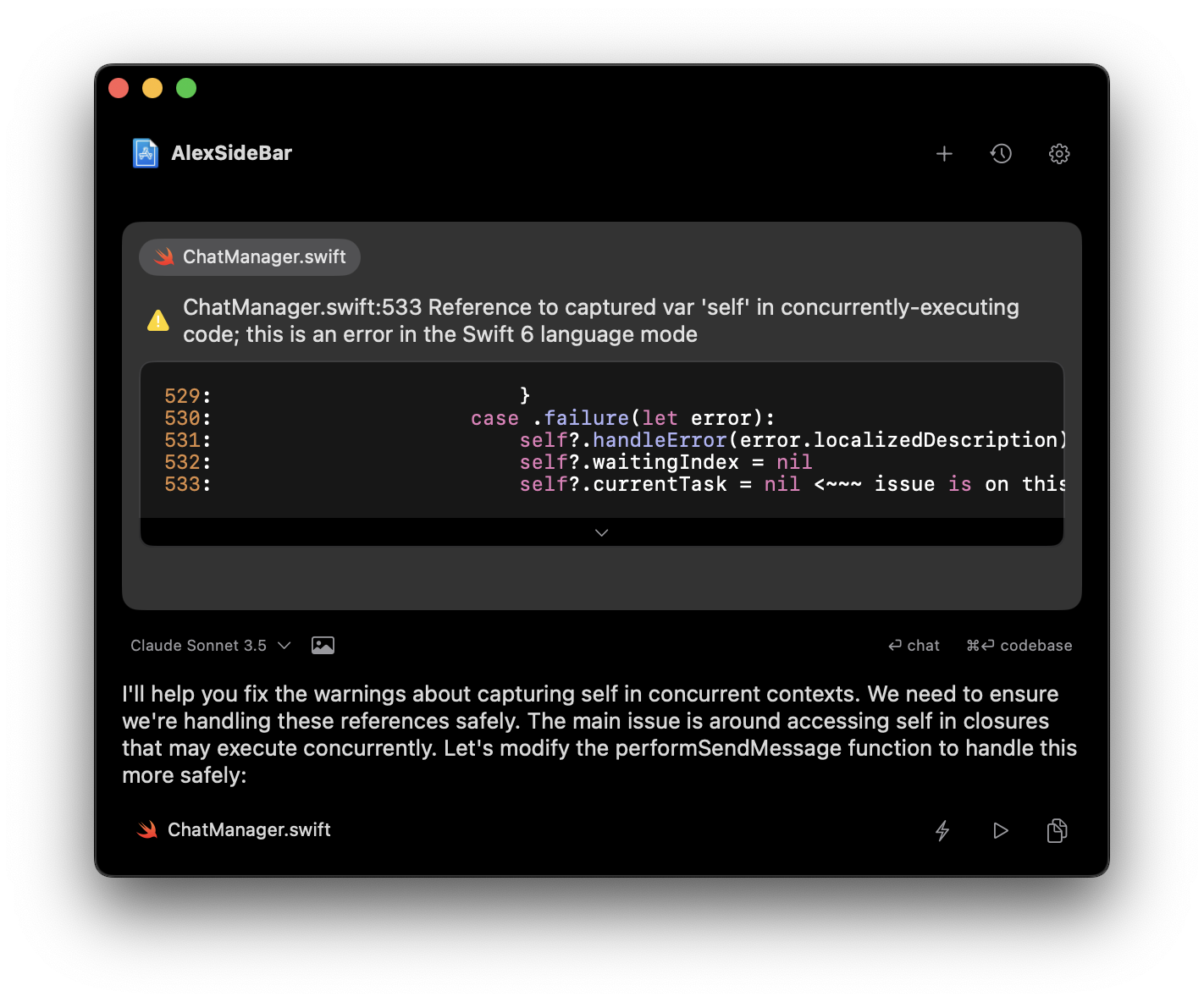

Source: https://alexcode.ai/docs/completions/error-fixes

Learn how to use Alex Sidebar to quickly resolve Xcode errors and warnings

## Quick Fix Integration

Alex Sidebar seamlessly integrates with Xcode's diagnostic system to help resolve errors and warnings efficiently.

Click any error or warning indicator in Xcode's gutter to get instant

AI-powered solutions.

Automatic context gathering and error analysis for targeted fixes.

## Using Quick Fix

Hover over the line containing the red (error) or yellow (warning) indicator in Xcode's, then click the indicator

Keep the code apply interface fixed at the bottom for easy access to changes.

Review and apply code changes without scrolling up through long conversations.

# Error & Warning Resolution

Source: https://alexcode.ai/docs/completions/error-fixes

Learn how to use Alex Sidebar to quickly resolve Xcode errors and warnings

## Quick Fix Integration

Alex Sidebar seamlessly integrates with Xcode's diagnostic system to help resolve errors and warnings efficiently.

Click any error or warning indicator in Xcode's gutter to get instant

AI-powered solutions.

Automatic context gathering and error analysis for targeted fixes.

## Using Quick Fix

Hover over the line containing the red (error) or yellow (warning) indicator in Xcode's, then click the indicator

Alex Sidebar starts a new chat with the warning and error message and code context.

Alex Sidebar starts a new chat with the warning and error message and code context.

Click "Apply" to implement the suggested changes. The smart apply button will handle both simple fixes like missing imports and complex changes that require accurate diffing. For faster application, use Quick Apply (⏩) to instantly apply changes without the diff panel.

Click "Apply" to implement the suggested changes. The smart apply button will handle both simple fixes like missing imports and complex changes that require accurate diffing. For faster application, use Quick Apply (⏩) to instantly apply changes without the diff panel.

Always review the proposed changes before applying them to ensure they

match your codebase requirements

## Common Fixes

Handles common build-time issues:

* Missing imports

* Type mismatches

* Protocol conformance

* Initialization errors

* Access control issues

Addresses potential runtime problems:

* Memory management

* Thread safety

* Deprecated API usage

* Performance optimizations

* Best practice violations

Resolves preview-specific issues:

* Missing preview providers

* Environment requirements

* Device configuration

* Preview context setup

## Advanced Usage

For specialized error cases:

* Select the problematic code

* Use Command + L to start a chat

* Provide additional context

* Get customized solutions

When dealing with multiple related issues:

* Group similar errors using Command + Shift + L to add the context in the existing chat

* Apply fixes systematically

* Validate changes incrementally

Pro Tip: Use the chat interface for more complex error scenarios that might

require additional context or explanation.

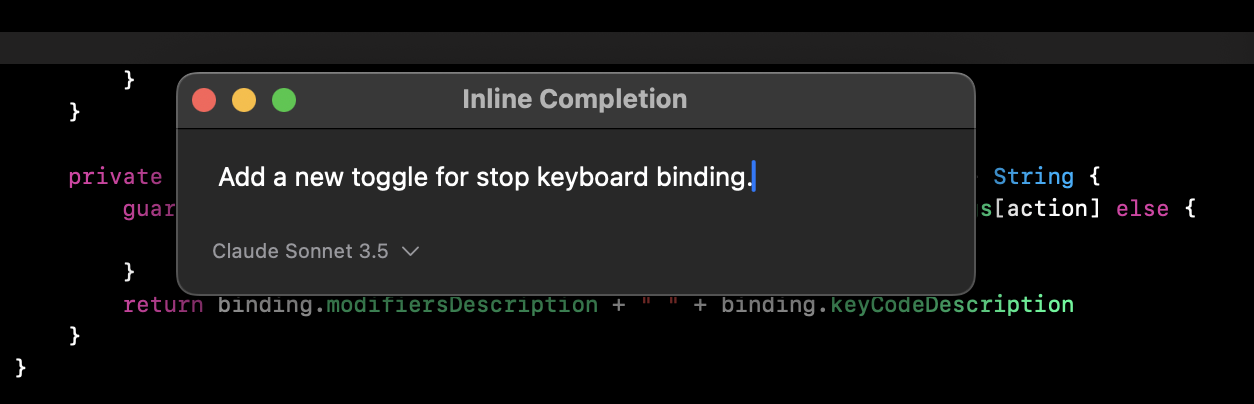

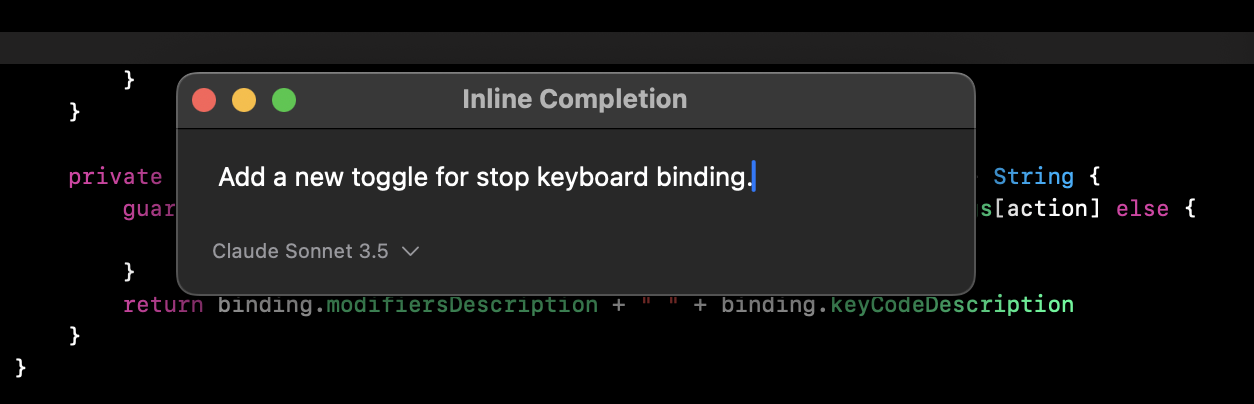

# Inline Code Suggestions

Source: https://alexcode.ai/docs/completions/inline-suggestions

Learn how to use Alex Sidebar's code completion features

## Quick Code Generation

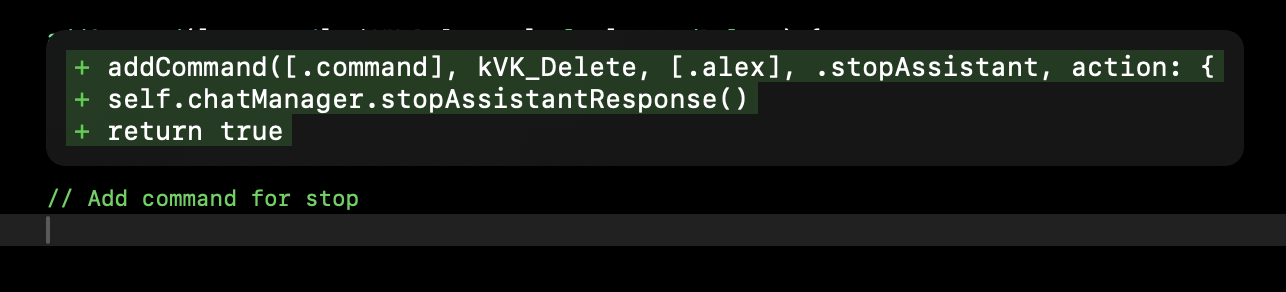

Alex Sidebar provides inline code suggestions and completions **directly in Xcode** to help you write code faster.

Trigger in-file suggestions to get AI-powered completions based on

your current code context.

Get real-time suggestions as you type. Press Tab to accept the highlighted

suggestion.

## Using Inline Completions

Always review the proposed changes before applying them to ensure they

match your codebase requirements

## Common Fixes

Handles common build-time issues:

* Missing imports

* Type mismatches

* Protocol conformance

* Initialization errors

* Access control issues

Addresses potential runtime problems:

* Memory management

* Thread safety

* Deprecated API usage

* Performance optimizations

* Best practice violations

Resolves preview-specific issues:

* Missing preview providers

* Environment requirements

* Device configuration

* Preview context setup

## Advanced Usage

For specialized error cases:

* Select the problematic code

* Use Command + L to start a chat

* Provide additional context

* Get customized solutions

When dealing with multiple related issues:

* Group similar errors using Command + Shift + L to add the context in the existing chat

* Apply fixes systematically

* Validate changes incrementally

Pro Tip: Use the chat interface for more complex error scenarios that might

require additional context or explanation.

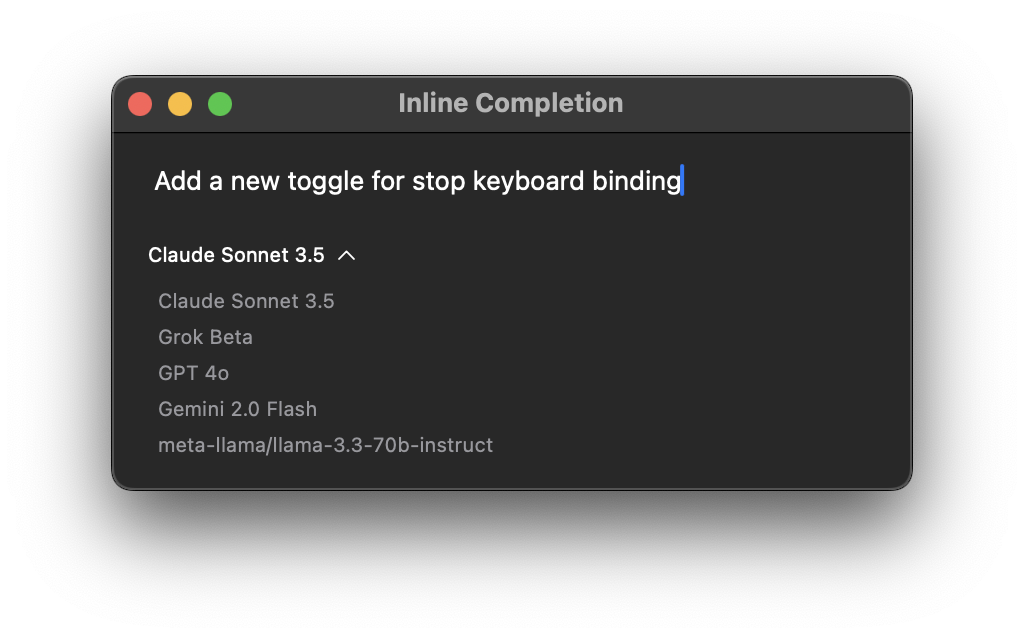

# Inline Code Suggestions

Source: https://alexcode.ai/docs/completions/inline-suggestions

Learn how to use Alex Sidebar's code completion features

## Quick Code Generation

Alex Sidebar provides inline code suggestions and completions **directly in Xcode** to help you write code faster.

Trigger in-file suggestions to get AI-powered completions based on

your current code context.

Get real-time suggestions as you type. Press Tab to accept the highlighted

suggestion.

## Using Inline Completions

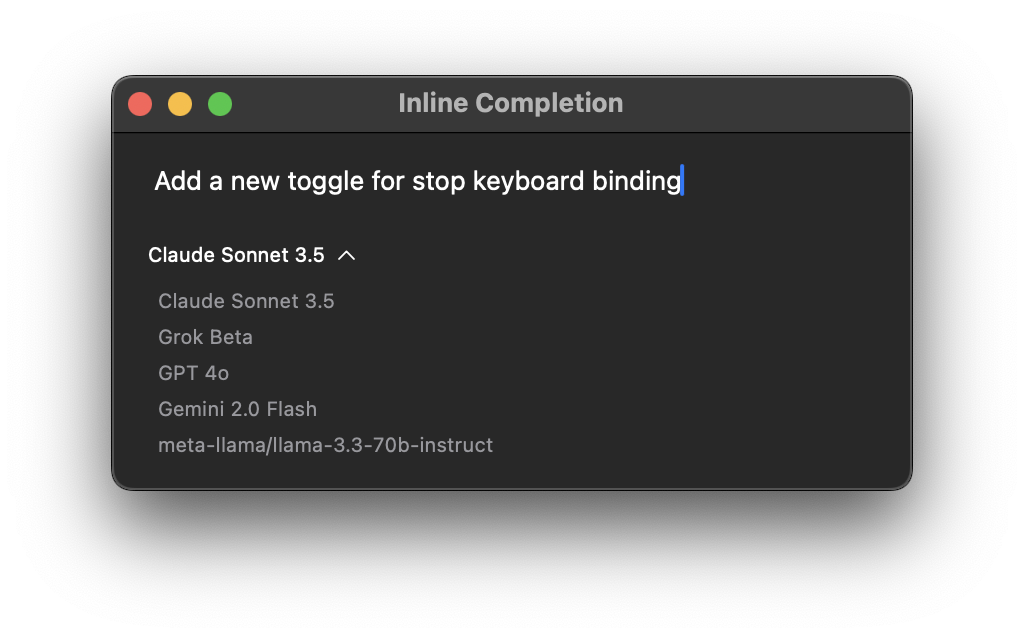

Position your cursor where you want to generate code (or select existing code) in **Xcode** and press **Command + K**

K\*\*

The AI analyzes your current file context to provide relevant suggestions

Choose from available AI models for completion:

* Claude 3.5 Sonnet: Advanced model for complex completions

* GPT-4: Balanced performance and accuracy

* Gemini Flash 2.0: Fast, lightweight completions

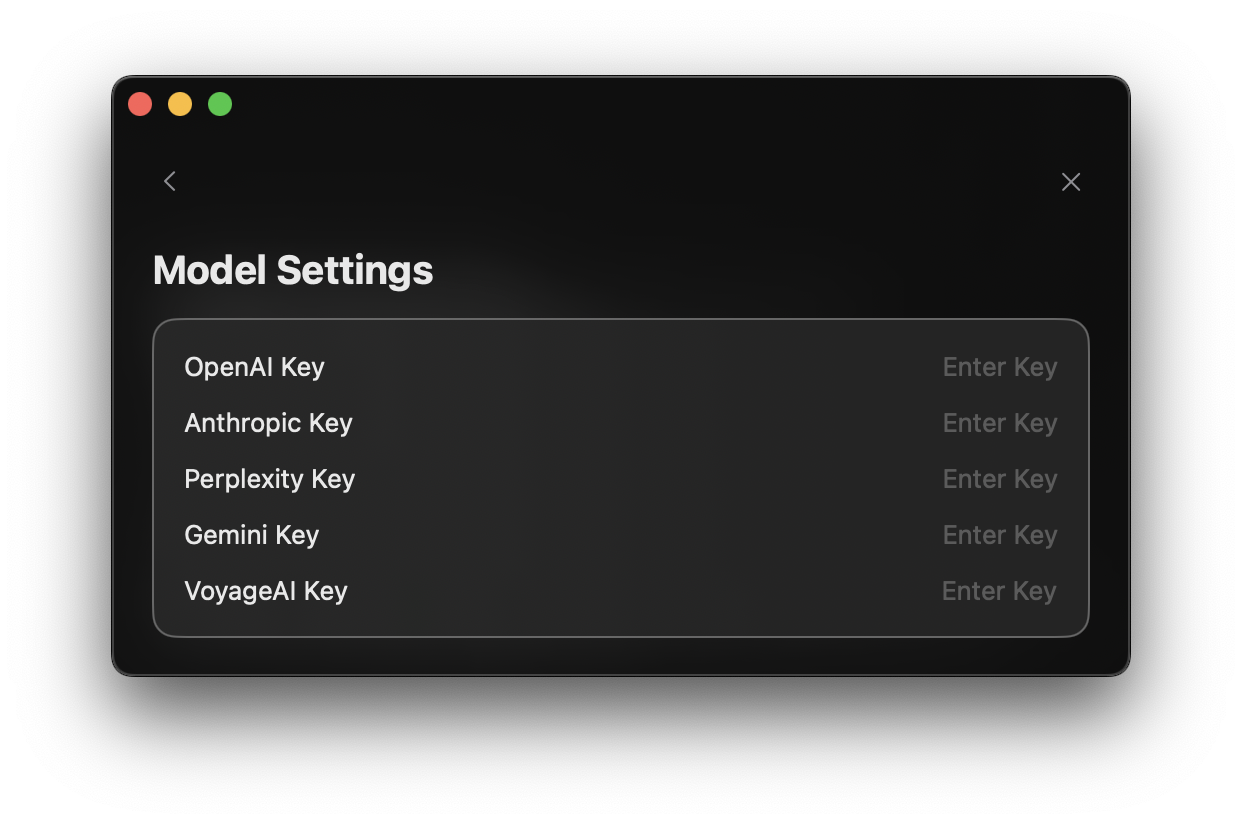

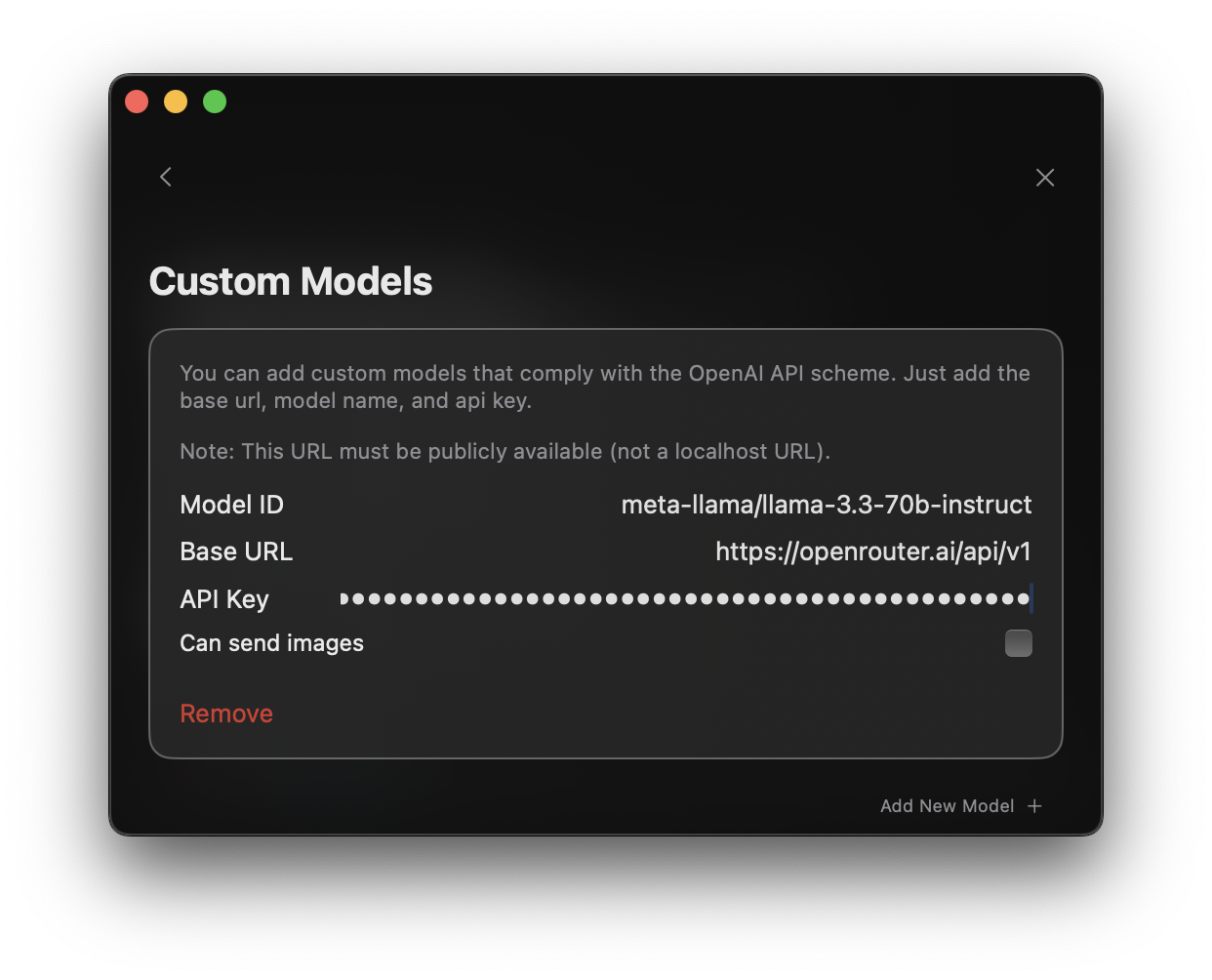

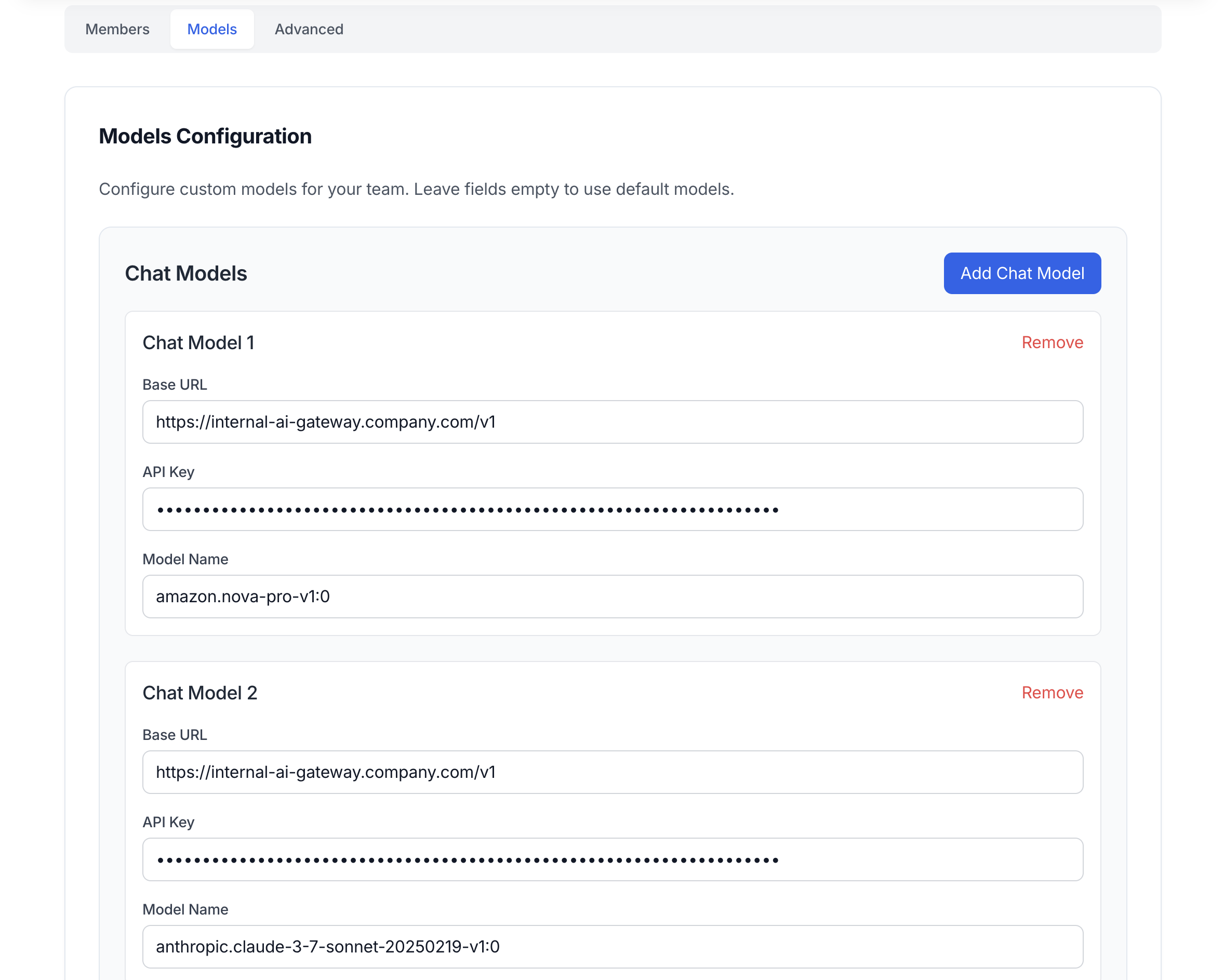

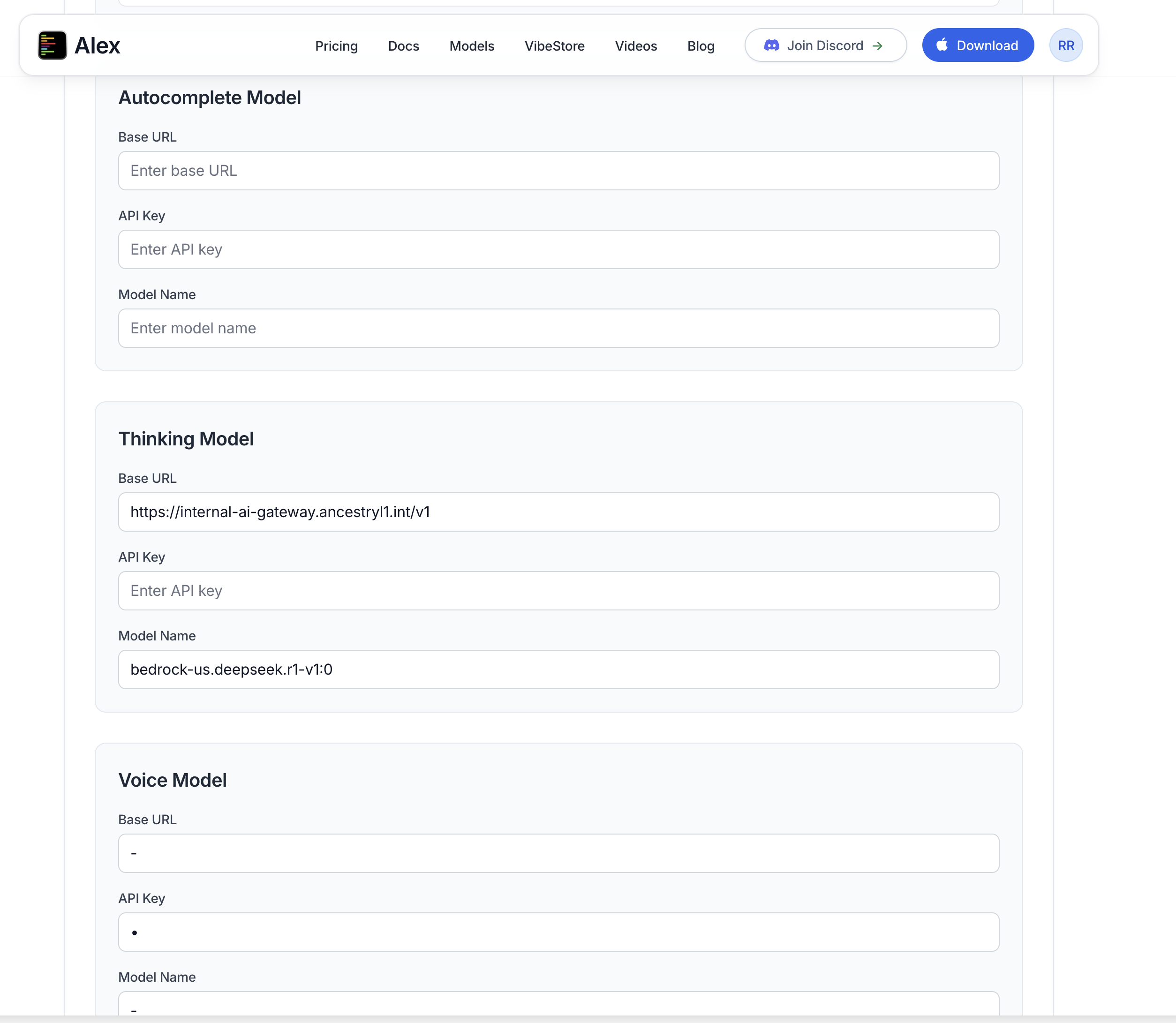

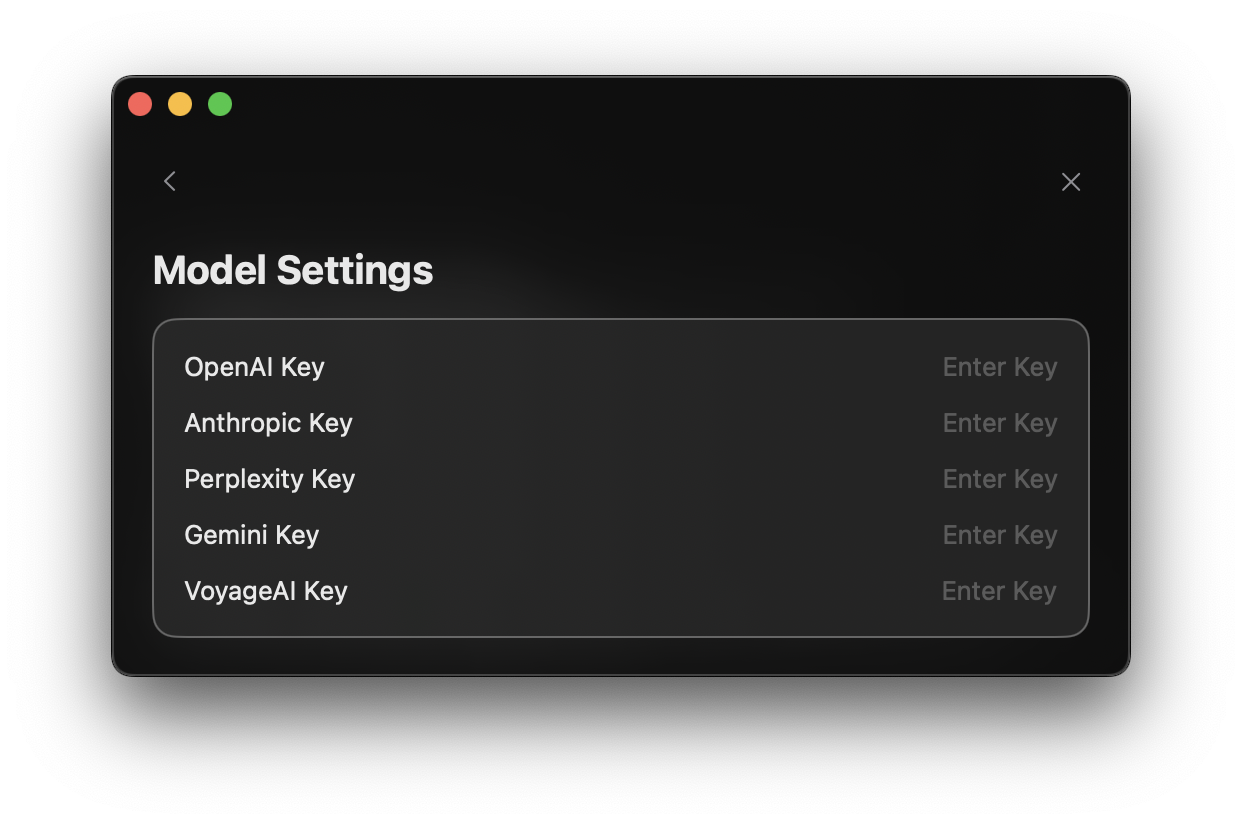

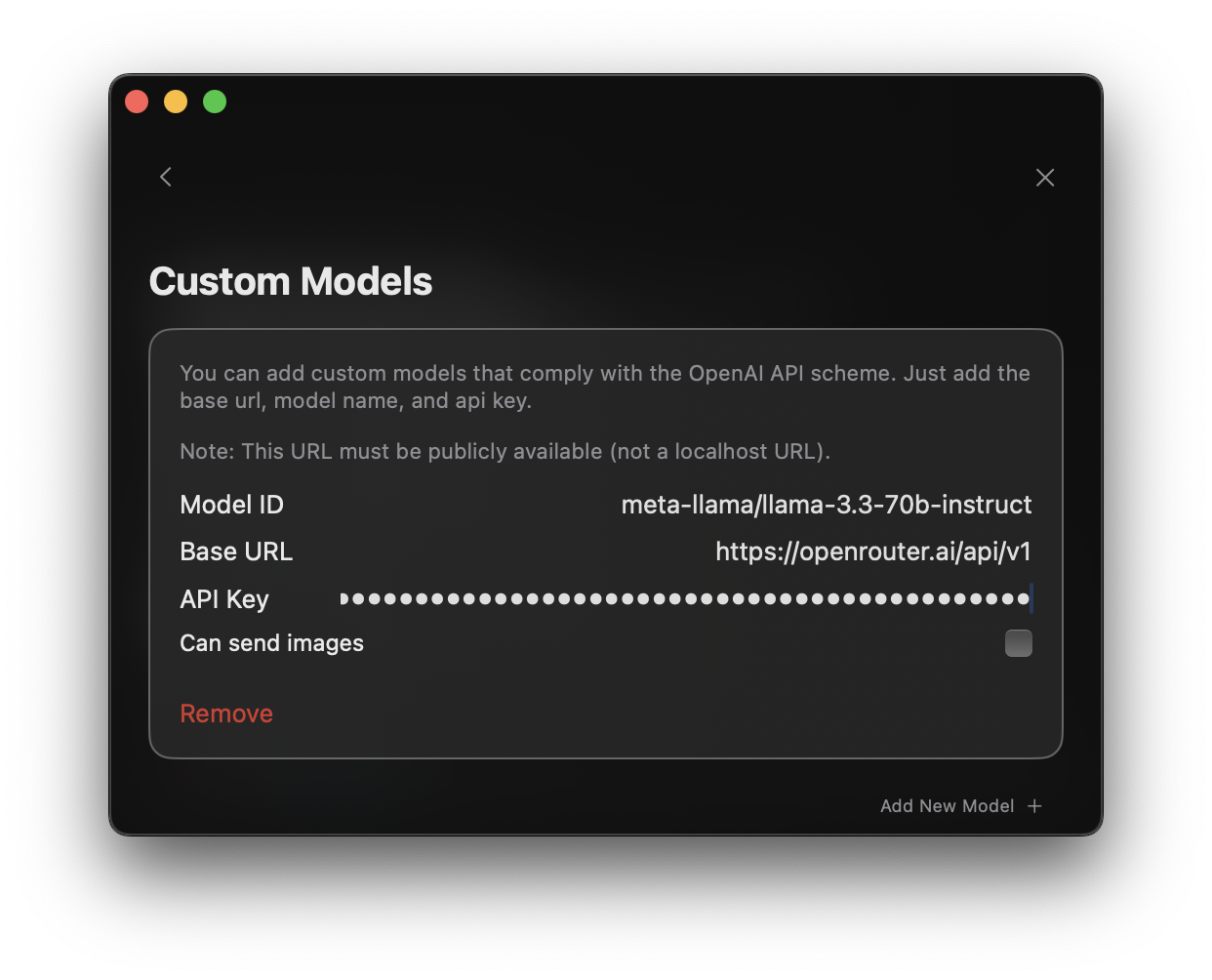

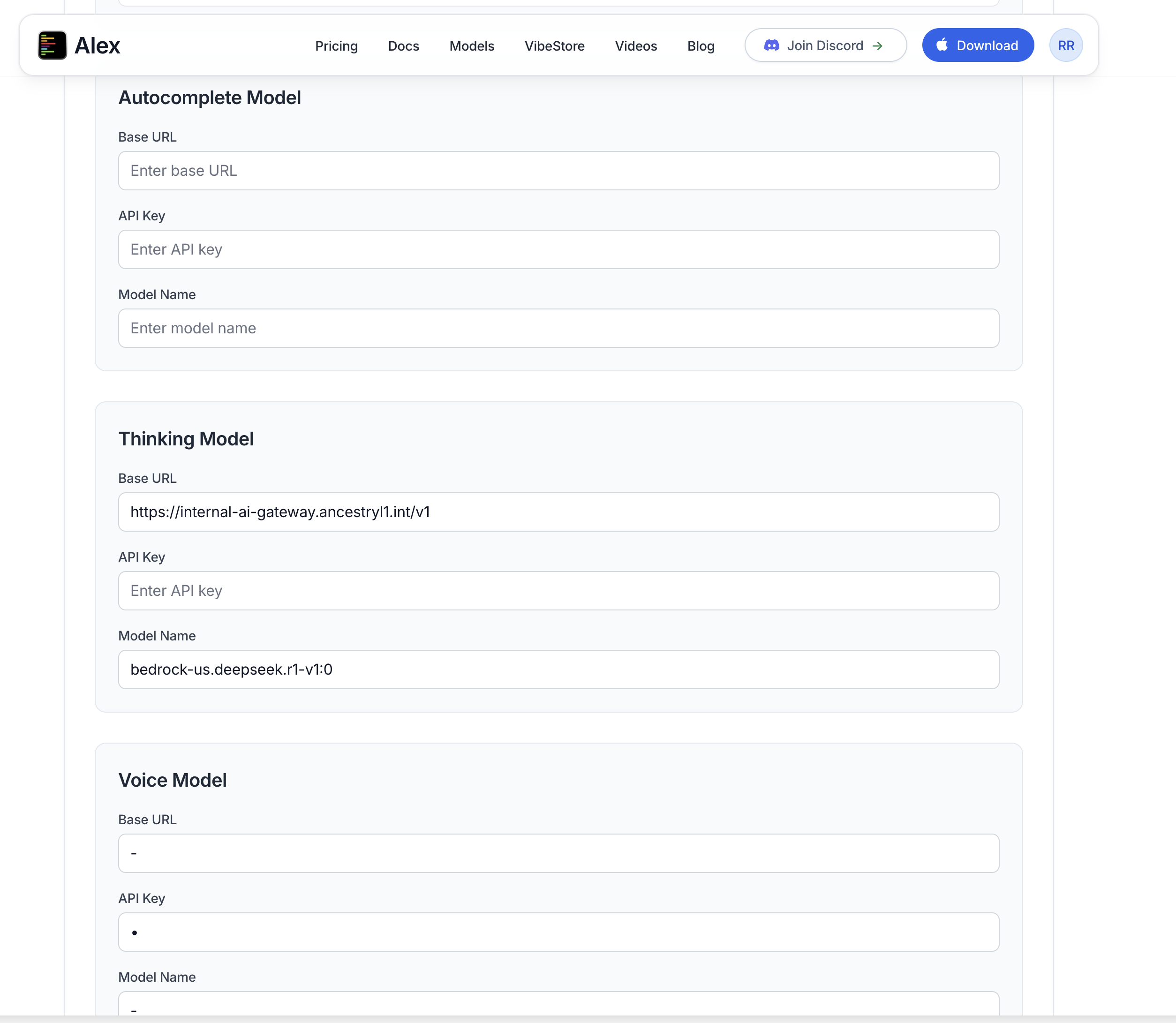

You can add additional models through the Model Settings, including:

* Local models via Ollama integration

* Custom API-compatible models

* Other OpenAI-compatible endpoints

See the [Model Configuration](/configuration/model-configuration) section for detailed setup instructions.

Position your cursor where you want to generate code (or select existing code) in **Xcode** and press **Command + K**

K\*\*

The AI analyzes your current file context to provide relevant suggestions

Choose from available AI models for completion:

* Claude 3.5 Sonnet: Advanced model for complex completions

* GPT-4: Balanced performance and accuracy

* Gemini Flash 2.0: Fast, lightweight completions

You can add additional models through the Model Settings, including:

* Local models via Ollama integration

* Custom API-compatible models

* Other OpenAI-compatible endpoints

See the [Model Configuration](/configuration/model-configuration) section for detailed setup instructions.

* Press Enter to accept the current suggestion

* Press Esc to dismiss suggestions

* Click retry button to generate new suggestions

* Press Enter to accept the current suggestion

* Press Esc to dismiss suggestions

* Click retry button to generate new suggestions

## Tab Completion

## Tab Completion

Alex Sidebar provides high-performance autocomplete suggestions with just 300-350ms latency - faster than GitHub Copilot and Xcode's built-in completions.

* Press Tab to accept suggestion

* Suggestions appear mid-sentence as you type

* Context-aware code suggestions

* Variable and method completions

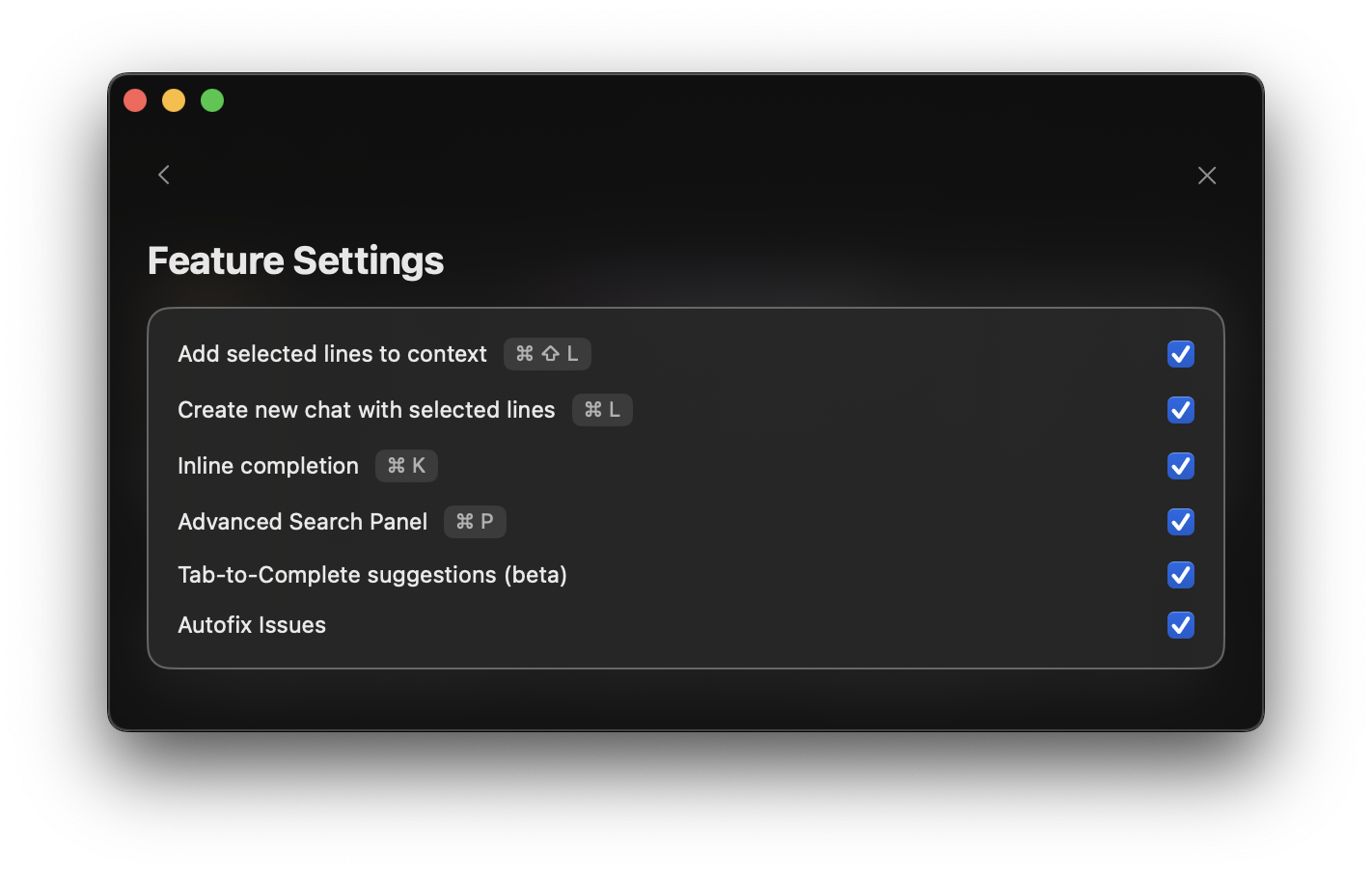

### Enabling Autocomplete

To configure autocomplete:

1. Open Settings

2. Go to Features & Keybindings

3. Find "Autocomplete Settings"

4. Configure the available options:

* **Enable Autocomplete**: Turn the feature on/off

* **Use Fast Autocomplete**: Switch to an optimized model that provides \~50% faster completions, ideal for rapid development

* **Inline completion**: Configure the ⌘ + K shortcut

The base autocomplete model has been optimized for better performance with large files (>600 lines).

## Best Practices

* Use Claude 3.5 Sonnet for complex logic

* Choose GPT-4 for balanced performance

* Select Gemini Flash 2.0 for quick completions

* Use Command + K for larger completions

* Try different models if results aren't ideal

* Use retry option for alternative suggestions

* Tab complete for quick suggestions

## SwiftUI Code Examples

Here are some practical examples of how Alex Sidebar's inline suggestions work with SwiftUI code:

Start typing a basic SwiftUI view and let inline suggestions help:

```swift

struct ContentView: View {

var body: some View {

// Type "VStack", wait for a bit and then press TAB

VStack(spacing: 16) {

Text("Hello, World!")

.font(.title)

.foregroundColor(.blue)

Button("Tap me") {

// Suggestions will offer action implementations

}

}

}

}

```

Get intelligent suggestions for SwiftUI property wrappers:

```swift

struct TodoView: View {

// Type "@St" and use Tab completion

@State private var taskTitle = ""

// Type "@Ob" for Observable properties

@ObservedObject var viewModel: TodoViewModel

var body: some View {

Form {

TextField("Task Title", text: $taskTitle)

// Suggestions will offer common modifiers

}

}

}

```

Get suggestions for custom view modifiers:

```swift

struct CardModifier: ViewModifier {

// Type "func" and let suggestions complete the method

func body(content: Content) -> some View {

content

.padding()

.background(Color.white)

.cornerRadius(10)

.shadow(radius: 5)

}

}

// Usage example with inline suggestions

Text("Card Content")

.modifier(CardModifier())

```

The examples above demonstrate common SwiftUI patterns where inline

suggestions are particularly helpful. As you type these patterns, Alex Sidebar

will suggest: - Property wrapper completions - View modifier chains - Common

SwiftUI view structures - Closure completions

While AI suggestions are powerful, always review generated code to ensure it

meets your requirements and follows your project's conventions.

# Tab Completions

Source: https://alexcode.ai/docs/completions/multiline-tab

Write code faster with intelligent, context-aware completions that span multiple lines

Tab completions in Alex Sidebar go beyond simple autocomplete. They understand your code's context and can suggest entire blocks and complete functions based on what you're writing.

To enable Multiline suggestions, which is currently an alpha feature, go to:

1. Settings

2. Features & Keybindings

3. Enable Multiline Suggestions

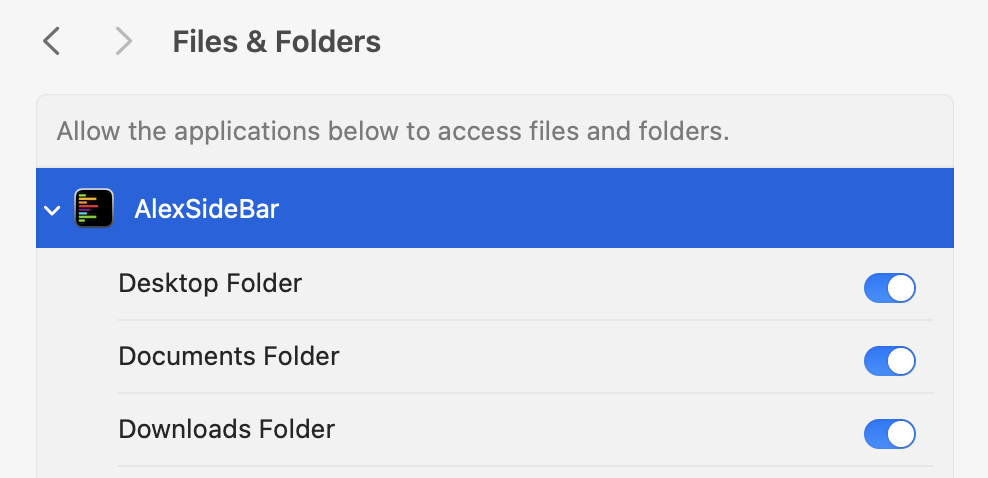

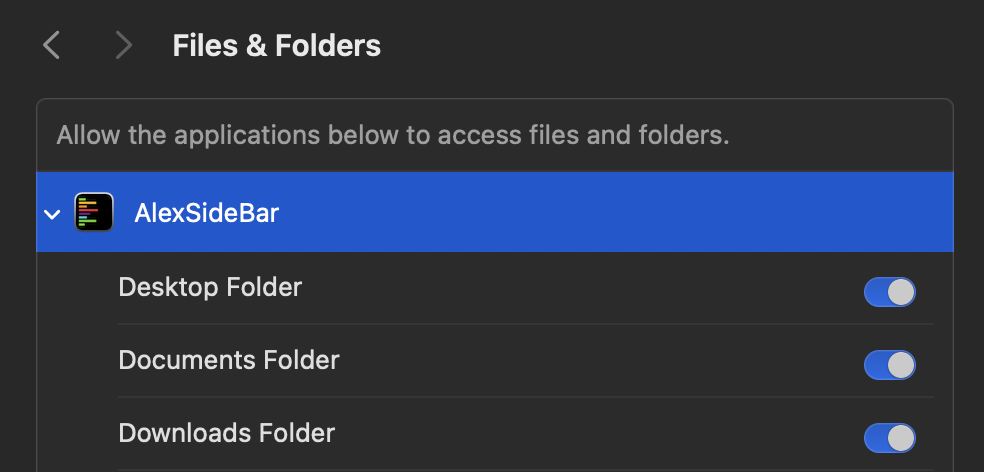

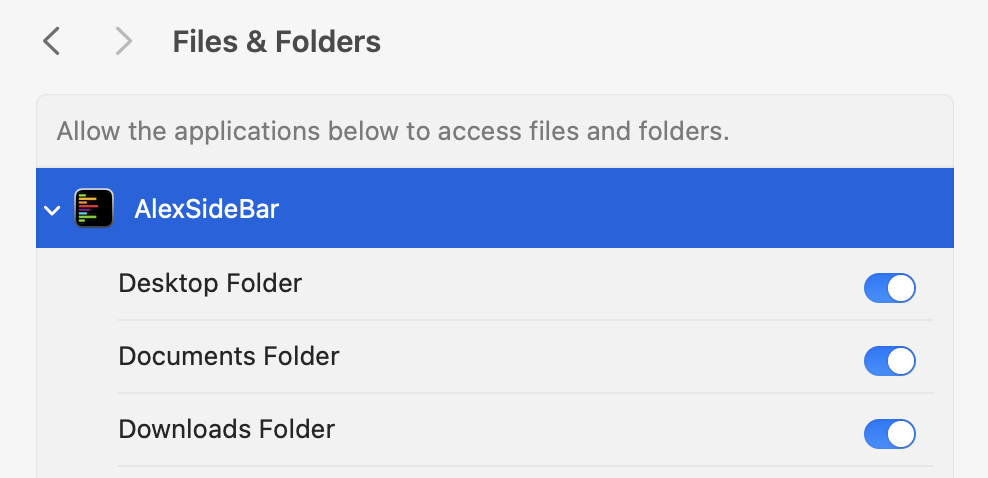

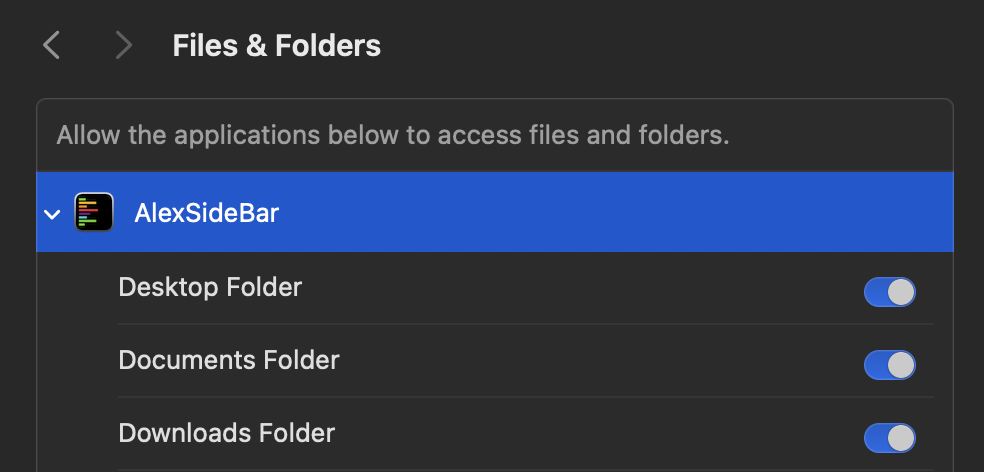

Because multline suggestions requires screen access, you will need to enable screen recording access to AlexSideBar in your system preferences.

Multiline tab (alpha) is available from version 3.3

## How Tab Works

As you type, Alex Sidebar analyzes your code and suggests completions that appear as completion text. Press **Tab** to accept the suggestion and watch as it intelligently fills in multiple lines at once.

To disable interferece with Xcode, please disable Apple's predictive code completion in Xcode → Settings → Text Editing → Editing → uncheck "Use code completion".

## Key Features

Understands your entire file and recent edits to provide relevant completions

Suggests complete code blocks, not just single lines

Considers other files in your project when making suggestions

Low-latency suggestions that keep up with your typing speed

## Using Tab Completions

### Basic Usage

Write your code normally. Alex Sidebar watches for opportunities to help.

Completion text appears showing what Alex suggests. This could be:

* Completing the current line

* Adding multiple lines to finish a function

* **Tab**: Accept the entire suggestion

* **Esc**: Dismiss the suggestion

* Keep typing to ignore and get new suggestions

### Advanced Features

#### Learning from Context

Tab uses your recent edits and previous tab acceptances to provide more relevant suggestions.

#### Contextual Understanding

Tab completions consider:

* Your recent edits and what you're working on

* The structure of your current function or class

* Import statements and available APIs

* Your coding style and naming conventions

* Xcode's build errors and warnings

Tab remembers your previous accepts across different files. When you accept a suggestion in one file, it learns from that pattern and applies similar suggestions in other files you work on.

## Configuration

### Enable/Disable Tab

Toggle Tab completions in Settings → Features & Keybindings → Enable Autocomplete under the "Editor Features" section.

Tab completions work best when codebase indexing is enabled. This helps Alex understand your project structure and provide more accurate suggestions.

You can also quickly enable/disable it in the system menubar when Alex is focused (Autocomplete section).

## Troubleshooting

### Suggestions not appearing?

* Check that Tab completions are enabled in settings

* Try manually triggering with Tab key

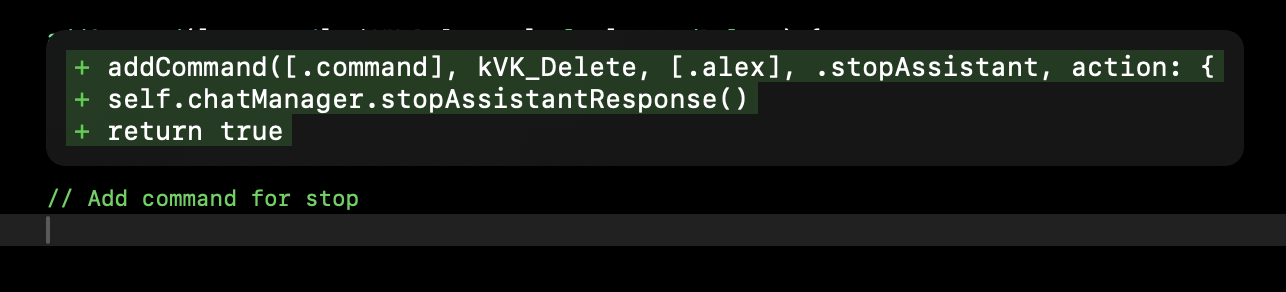

# Code & Context Shortcuts

Source: https://alexcode.ai/docs/configuration/code-context-shortcuts

Keyboard shortcuts for managing code interactions and chat context

These shortcuts can be customized by clicking on any default shortcut in the Settings panel.

* **Command + L**: Start a new chat with your selected code

* **Command + Shift + L**: Add selected code to your current chat

* **Command + N**: Create a fresh chat (without code)

* **Command + Delete**: Stop the current generation

Just getting started? The **Command + L** shortcut is essential for starting AI chats about your code directly from Xcode.

# Codebase Indexing

Source: https://alexcode.ai/docs/configuration/codebase-indexing

Learn how to manage and optimize codebase indexing for better AI assistance

When you open a project in Alex Sidebar, it automatically analyzes and indexes your code files to better understand your codebase.

Think of this like creating a smart map of your code - Alex reads through each file and then can utilize this information to provide more accurate and relevant suggestions when going through your queries.

Alex Sidebar provides high-performance autocomplete suggestions with just 300-350ms latency - faster than GitHub Copilot and Xcode's built-in completions.

* Press Tab to accept suggestion

* Suggestions appear mid-sentence as you type

* Context-aware code suggestions

* Variable and method completions

### Enabling Autocomplete

To configure autocomplete:

1. Open Settings

2. Go to Features & Keybindings

3. Find "Autocomplete Settings"

4. Configure the available options:

* **Enable Autocomplete**: Turn the feature on/off

* **Use Fast Autocomplete**: Switch to an optimized model that provides \~50% faster completions, ideal for rapid development

* **Inline completion**: Configure the ⌘ + K shortcut

The base autocomplete model has been optimized for better performance with large files (>600 lines).

## Best Practices

* Use Claude 3.5 Sonnet for complex logic

* Choose GPT-4 for balanced performance

* Select Gemini Flash 2.0 for quick completions

* Use Command + K for larger completions

* Try different models if results aren't ideal

* Use retry option for alternative suggestions

* Tab complete for quick suggestions

## SwiftUI Code Examples

Here are some practical examples of how Alex Sidebar's inline suggestions work with SwiftUI code:

Start typing a basic SwiftUI view and let inline suggestions help:

```swift

struct ContentView: View {

var body: some View {

// Type "VStack", wait for a bit and then press TAB

VStack(spacing: 16) {

Text("Hello, World!")

.font(.title)

.foregroundColor(.blue)

Button("Tap me") {

// Suggestions will offer action implementations

}

}

}

}

```

Get intelligent suggestions for SwiftUI property wrappers:

```swift

struct TodoView: View {

// Type "@St" and use Tab completion

@State private var taskTitle = ""

// Type "@Ob" for Observable properties

@ObservedObject var viewModel: TodoViewModel

var body: some View {

Form {

TextField("Task Title", text: $taskTitle)

// Suggestions will offer common modifiers

}

}

}

```

Get suggestions for custom view modifiers:

```swift

struct CardModifier: ViewModifier {

// Type "func" and let suggestions complete the method

func body(content: Content) -> some View {

content

.padding()

.background(Color.white)

.cornerRadius(10)

.shadow(radius: 5)

}

}

// Usage example with inline suggestions

Text("Card Content")

.modifier(CardModifier())

```

The examples above demonstrate common SwiftUI patterns where inline

suggestions are particularly helpful. As you type these patterns, Alex Sidebar

will suggest: - Property wrapper completions - View modifier chains - Common

SwiftUI view structures - Closure completions

While AI suggestions are powerful, always review generated code to ensure it

meets your requirements and follows your project's conventions.

# Tab Completions

Source: https://alexcode.ai/docs/completions/multiline-tab

Write code faster with intelligent, context-aware completions that span multiple lines

Tab completions in Alex Sidebar go beyond simple autocomplete. They understand your code's context and can suggest entire blocks and complete functions based on what you're writing.

To enable Multiline suggestions, which is currently an alpha feature, go to:

1. Settings

2. Features & Keybindings

3. Enable Multiline Suggestions

Because multline suggestions requires screen access, you will need to enable screen recording access to AlexSideBar in your system preferences.

Multiline tab (alpha) is available from version 3.3

## How Tab Works

As you type, Alex Sidebar analyzes your code and suggests completions that appear as completion text. Press **Tab** to accept the suggestion and watch as it intelligently fills in multiple lines at once.

To disable interferece with Xcode, please disable Apple's predictive code completion in Xcode → Settings → Text Editing → Editing → uncheck "Use code completion".

## Key Features

Understands your entire file and recent edits to provide relevant completions

Suggests complete code blocks, not just single lines

Considers other files in your project when making suggestions

Low-latency suggestions that keep up with your typing speed

## Using Tab Completions

### Basic Usage

Write your code normally. Alex Sidebar watches for opportunities to help.

Completion text appears showing what Alex suggests. This could be:

* Completing the current line

* Adding multiple lines to finish a function

* **Tab**: Accept the entire suggestion

* **Esc**: Dismiss the suggestion

* Keep typing to ignore and get new suggestions

### Advanced Features

#### Learning from Context

Tab uses your recent edits and previous tab acceptances to provide more relevant suggestions.

#### Contextual Understanding

Tab completions consider:

* Your recent edits and what you're working on

* The structure of your current function or class

* Import statements and available APIs

* Your coding style and naming conventions

* Xcode's build errors and warnings

Tab remembers your previous accepts across different files. When you accept a suggestion in one file, it learns from that pattern and applies similar suggestions in other files you work on.

## Configuration

### Enable/Disable Tab

Toggle Tab completions in Settings → Features & Keybindings → Enable Autocomplete under the "Editor Features" section.

Tab completions work best when codebase indexing is enabled. This helps Alex understand your project structure and provide more accurate suggestions.

You can also quickly enable/disable it in the system menubar when Alex is focused (Autocomplete section).

## Troubleshooting

### Suggestions not appearing?

* Check that Tab completions are enabled in settings

* Try manually triggering with Tab key

# Code & Context Shortcuts

Source: https://alexcode.ai/docs/configuration/code-context-shortcuts

Keyboard shortcuts for managing code interactions and chat context

These shortcuts can be customized by clicking on any default shortcut in the Settings panel.

* **Command + L**: Start a new chat with your selected code

* **Command + Shift + L**: Add selected code to your current chat

* **Command + N**: Create a fresh chat (without code)

* **Command + Delete**: Stop the current generation

Just getting started? The **Command + L** shortcut is essential for starting AI chats about your code directly from Xcode.

# Codebase Indexing

Source: https://alexcode.ai/docs/configuration/codebase-indexing

Learn how to manage and optimize codebase indexing for better AI assistance

When you open a project in Alex Sidebar, it automatically analyzes and indexes your code files to better understand your codebase.

Think of this like creating a smart map of your code - Alex reads through each file and then can utilize this information to provide more accurate and relevant suggestions when going through your queries.

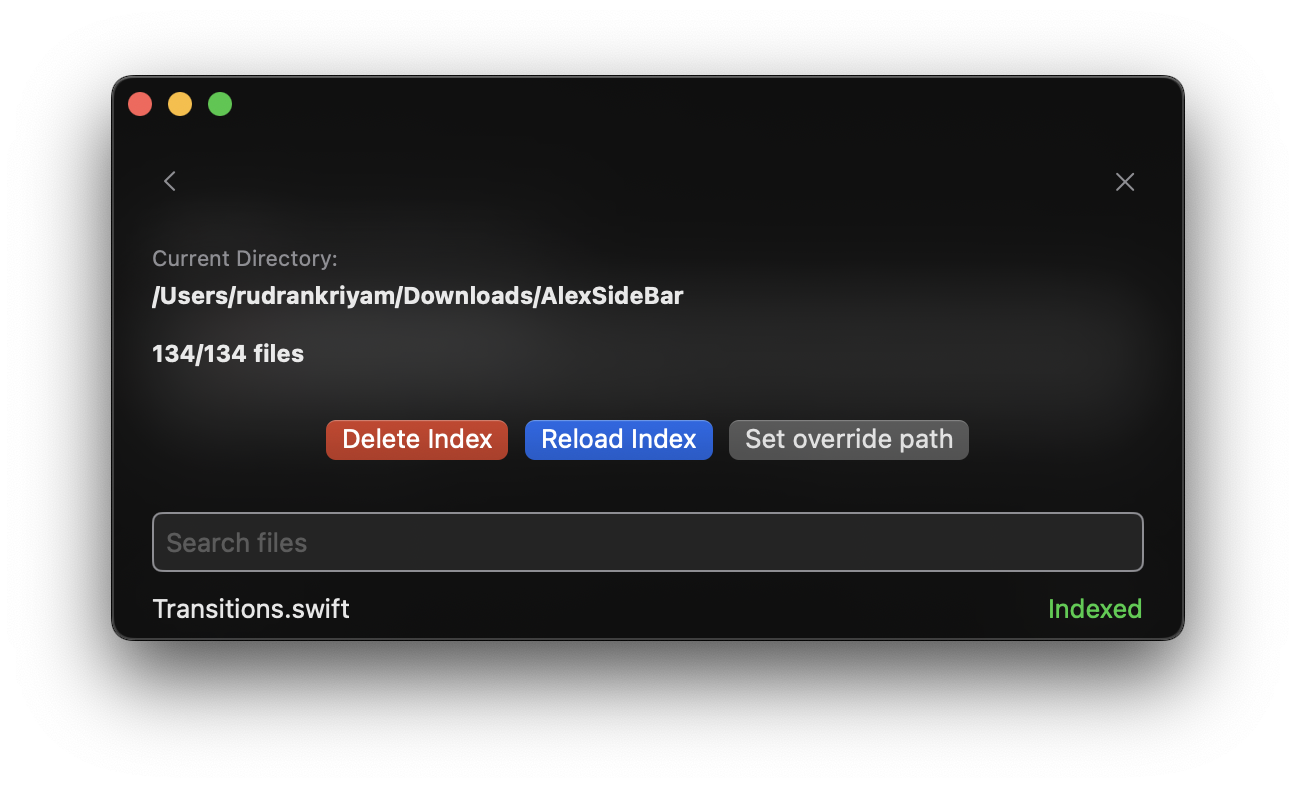

### What is Indexing?

Indexing is the process where Alex:

* Scans through all your code files

* Creates special embeddings for each file

* Stores these embeddings to quickly reference later

### Why is it Important?

This indexing helps Alex:

* Give you more accurate code suggestions

* Better understand the context when you ask questions

* Find relevant code examples from your own project

* Make smarter recommendations based on your actual codebase

## Code Maps

Building on top of basic indexing, Alex now automatically parses your Swift project and constructs a "Code Map" for the files you are chatting about. This means Alex has an understanding of how files interact with each other, resulting in better code generation.

When you start a chat, Alex:

* Analyzes file dependencies and imports

* Maps class and type relationships

* Understands protocol conformances

* Tracks function calls between files

This deeper understanding helps Alex:

* Generate more accurate code suggestions

* Provide better refactoring recommendations

* Maintain consistency across related files

* Respect your project's architecture

Code Maps are enabled by default for all chats, so you don't need to do anything extra to get these benefits!

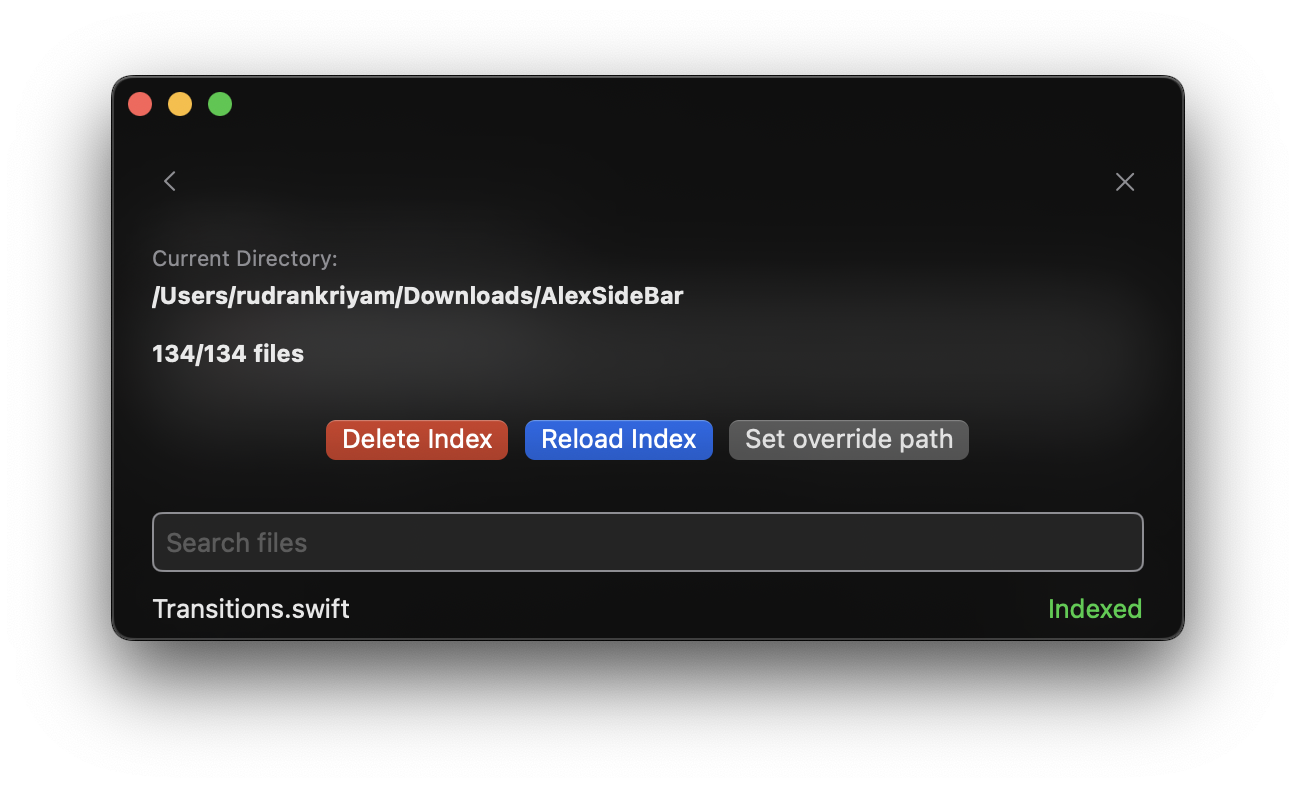

## Managing Indexes

Open the "Indexed Files" section in Settings to access the indexing UI.

Here you can:

* View all indexed files

* Check indexing status

* Manage existing indexes

* Add multiple folders for indexing

To index additional folders beyond your main Xcode project:

1. Under "Search Files", click the **"Additional Folders"** collapsible button

2. Click the **plus (+) button** to select a folder

3. Choose the folder you want to index (e.g., server repo, Android repo)

4. Click **"Reload Index"** to start indexing the new folder

5. If Alex has difficulties with recognizing the folder, hit delete index and reload it. Or try restarting the app.

This allows Alex to understand multiple codebases simultaneously, making it helpful when working with:

* Backend server repositories

* Android projects alongside iOS

* Shared libraries or dependencies

* Any other related code folders

The following operations are available:

* **Reload Index**: Initialize or refresh indexing for files

* **Delete Index**: Remove existing index data

* **Add folders**: Include additional directories for comprehensive indexing

## Automatic Synchronization

Your codebase index automatically updates when:

* Files are modified

* New files are added

* Files are deleted

* Git branches are switched

This ensures Alex Sidebar always has the most up-to-date information about your codebase!

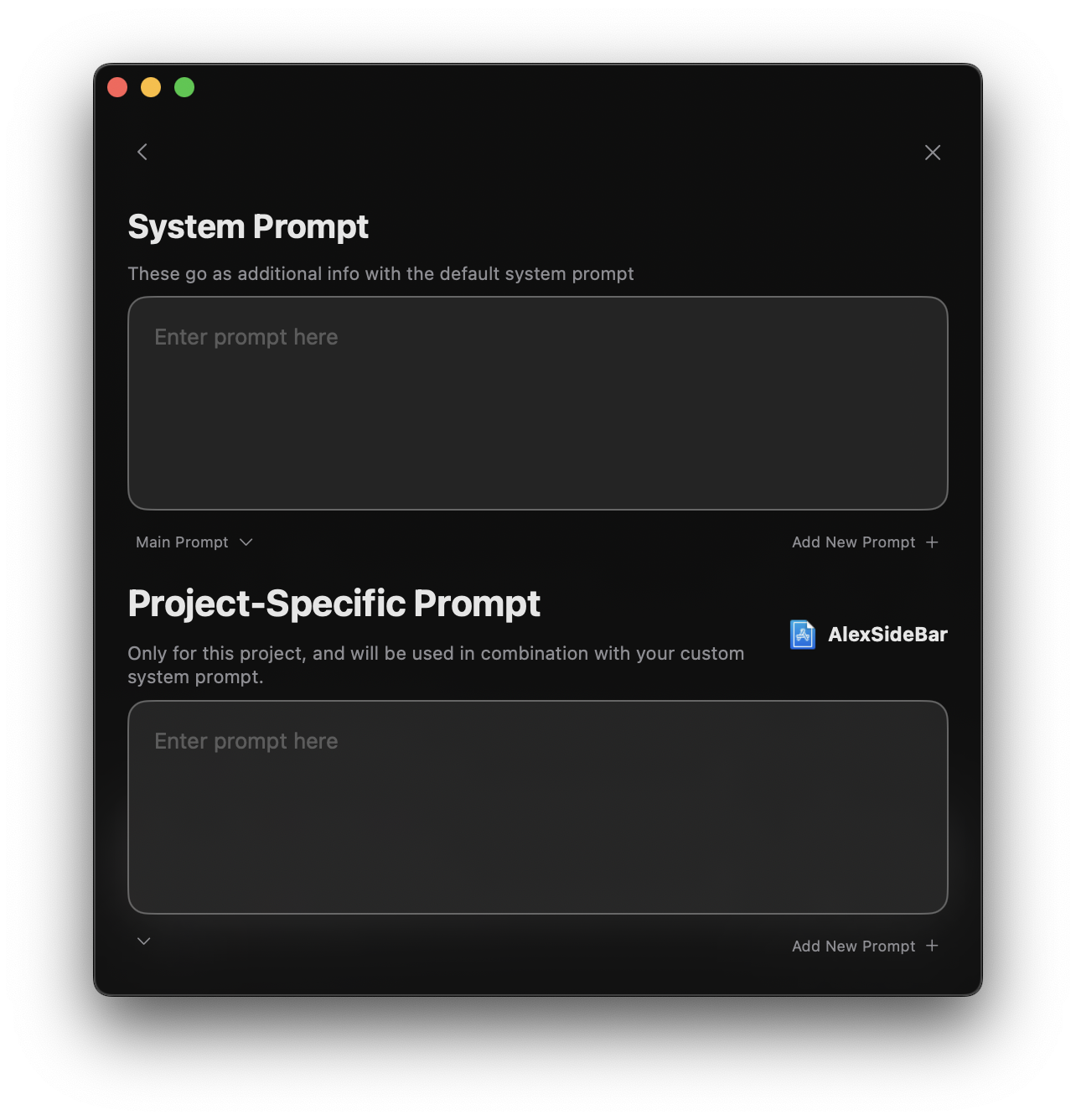

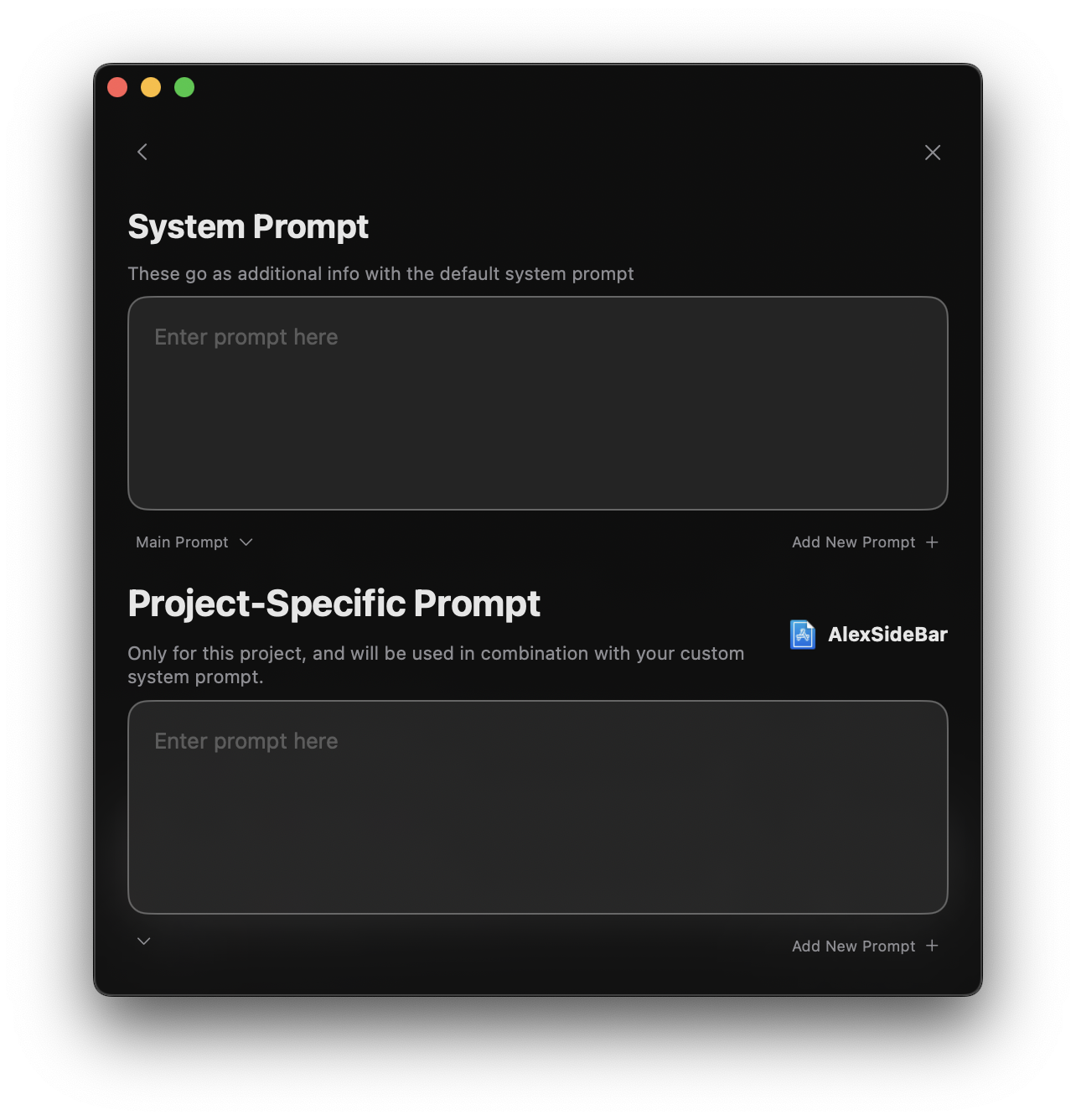

# Custom Prompts

Source: https://alexcode.ai/docs/configuration/custom-prompts

Make Alex work better for you with custom prompts

Use the two different kinds of prompts that work together to help you code:

Global prompts that apply across all projects. These define your general preferences and requirements.

Specific prompts that apply only to the current project, working in combination with system prompts.

## Prompt Configuration Interface

### What is Indexing?

Indexing is the process where Alex:

* Scans through all your code files

* Creates special embeddings for each file

* Stores these embeddings to quickly reference later

### Why is it Important?

This indexing helps Alex:

* Give you more accurate code suggestions

* Better understand the context when you ask questions

* Find relevant code examples from your own project

* Make smarter recommendations based on your actual codebase

## Code Maps

Building on top of basic indexing, Alex now automatically parses your Swift project and constructs a "Code Map" for the files you are chatting about. This means Alex has an understanding of how files interact with each other, resulting in better code generation.

When you start a chat, Alex:

* Analyzes file dependencies and imports

* Maps class and type relationships

* Understands protocol conformances

* Tracks function calls between files

This deeper understanding helps Alex:

* Generate more accurate code suggestions

* Provide better refactoring recommendations

* Maintain consistency across related files

* Respect your project's architecture

Code Maps are enabled by default for all chats, so you don't need to do anything extra to get these benefits!

## Managing Indexes

Open the "Indexed Files" section in Settings to access the indexing UI.

Here you can:

* View all indexed files

* Check indexing status

* Manage existing indexes

* Add multiple folders for indexing

To index additional folders beyond your main Xcode project:

1. Under "Search Files", click the **"Additional Folders"** collapsible button

2. Click the **plus (+) button** to select a folder

3. Choose the folder you want to index (e.g., server repo, Android repo)

4. Click **"Reload Index"** to start indexing the new folder

5. If Alex has difficulties with recognizing the folder, hit delete index and reload it. Or try restarting the app.

This allows Alex to understand multiple codebases simultaneously, making it helpful when working with:

* Backend server repositories

* Android projects alongside iOS

* Shared libraries or dependencies

* Any other related code folders

The following operations are available:

* **Reload Index**: Initialize or refresh indexing for files

* **Delete Index**: Remove existing index data

* **Add folders**: Include additional directories for comprehensive indexing

## Automatic Synchronization

Your codebase index automatically updates when:

* Files are modified

* New files are added

* Files are deleted

* Git branches are switched

This ensures Alex Sidebar always has the most up-to-date information about your codebase!

# Custom Prompts

Source: https://alexcode.ai/docs/configuration/custom-prompts

Make Alex work better for you with custom prompts

Use the two different kinds of prompts that work together to help you code:

Global prompts that apply across all projects. These define your general preferences and requirements.

Specific prompts that apply only to the current project, working in combination with system prompts.

## Prompt Configuration Interface

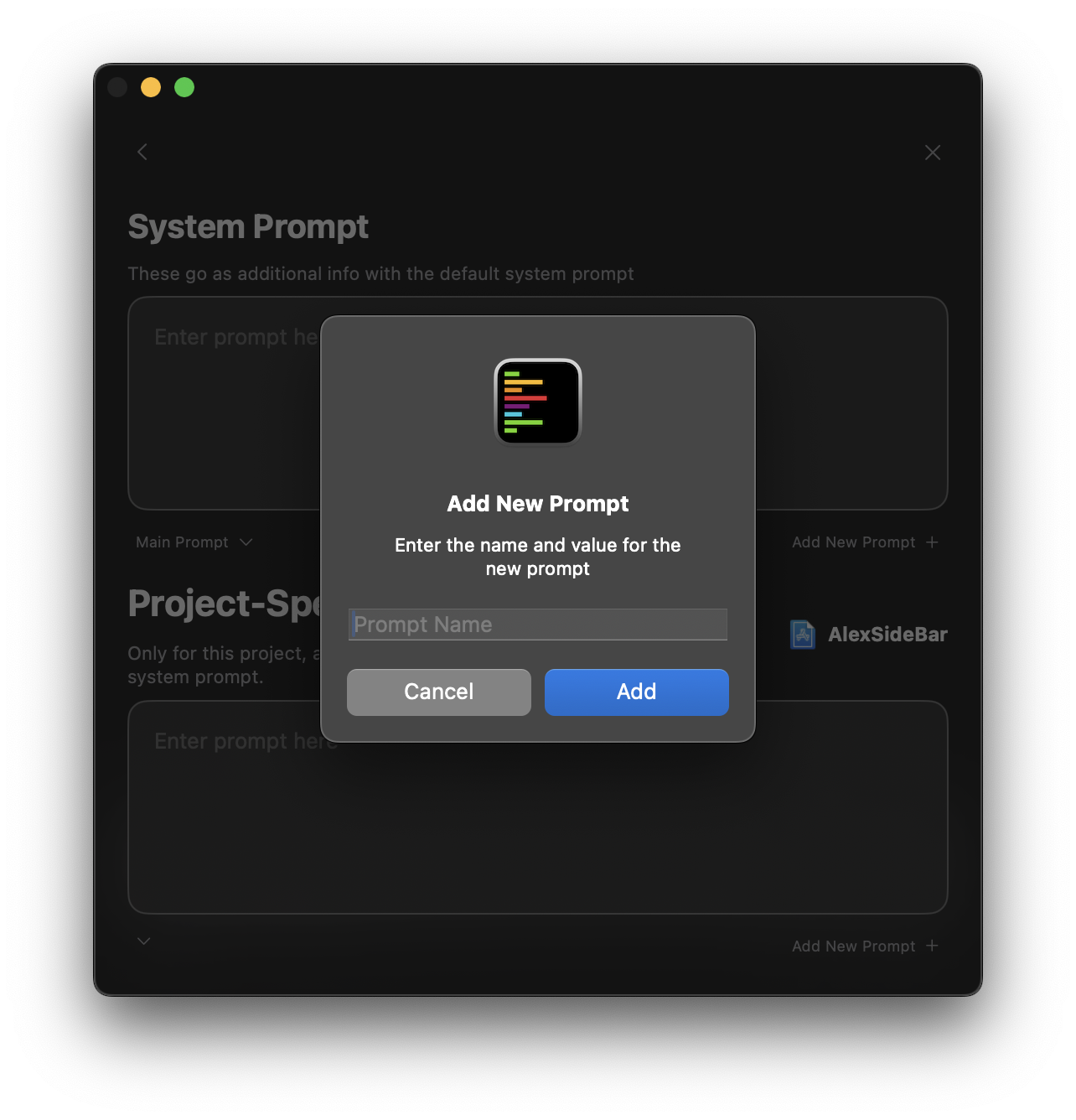

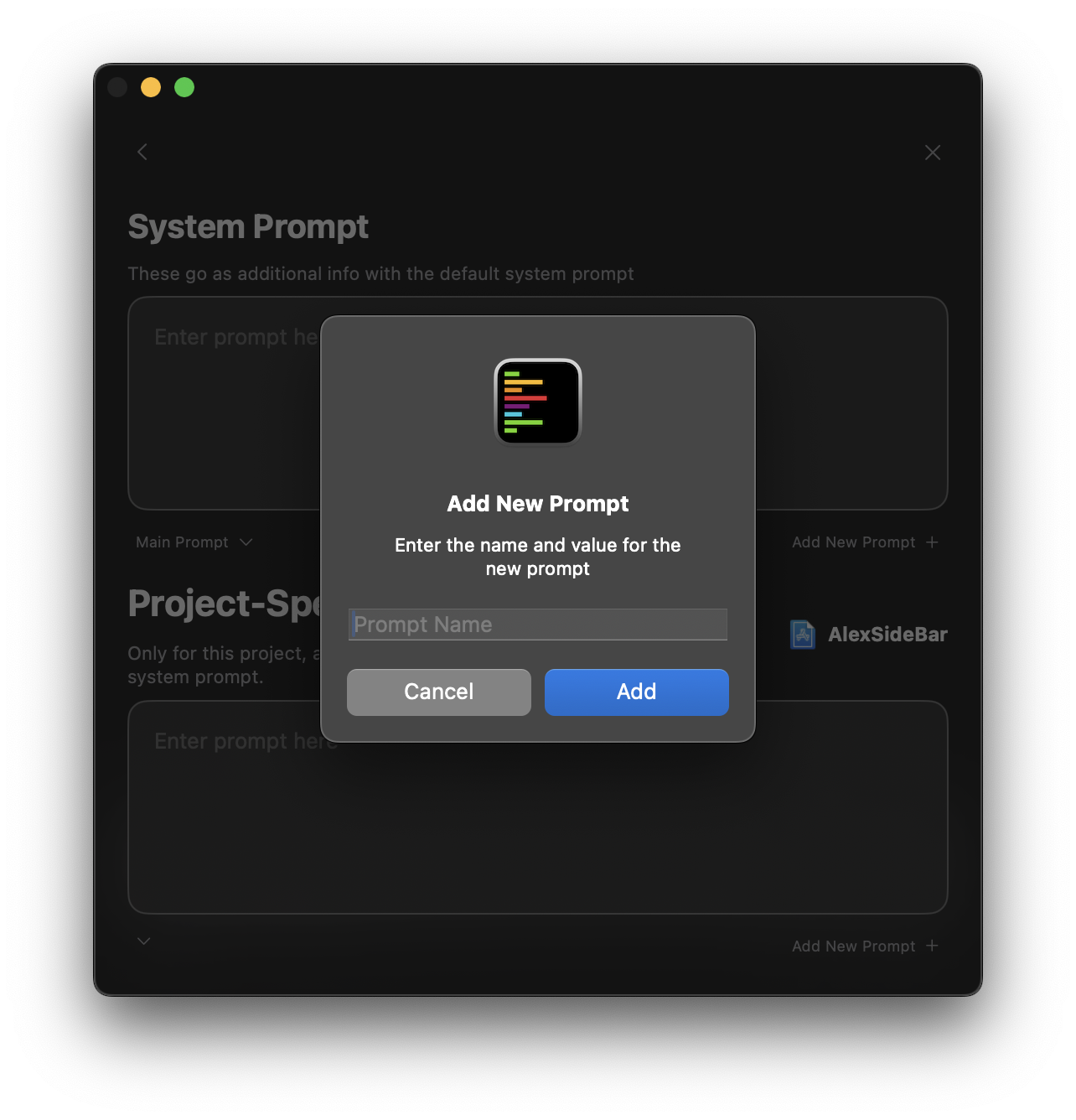

### Key Components

1. **System Prompt Section**

* Text area for global prompt configuration

* Dropdown menu to select active prompt

* "Add New Prompt +" button for creating additional prompts

### Key Components

1. **System Prompt Section**

* Text area for global prompt configuration

* Dropdown menu to select active prompt

* "Add New Prompt +" button for creating additional prompts

2. **Project-Specific Section**

* Dedicated prompt area for current project

* Project identifier display

* Automatic combination with system prompt

Changes to prompts take effect immediately and persist across sessions.

## SwiftUI Project Example

Here are some examples of how to configure prompts for a SwiftUI project to take inspiration from.

### System Prompt: SwiftUI Expert

```swift

You are a SwiftUI and iOS development expert. For all interactions:

**ARCHITECTURE:**

- Recommend MVVM pattern implementation

- Ensure proper view composition

- Follow SwiftUI best practices

- Consider performance implications

**CODE STYLE:**

- Use modern Swift syntax

- Implement proper property wrappers

- Follow Swift naming conventions

- Write clear documentation comments

**REQUIREMENTS:**

- Consider accessibility

- Follow Apple HIG

- Include SwiftUI previews

- Handle error cases

```

### Project Prompt: Custom App

```swift

Project: MLXFasting (Intermittent Fasting App)

**ARCHITECTURE:**

- Uses MVVM + Coordinator pattern

- CoreData for persistence

- Combine for data flow

- HealthKit integration